AI in the classroom? A marvel. A menace. A mystery. Teachers, professors, educators overall, you know the drill. Students turning in papers that seem too good to be true. Sentences too polished. Ideas too refined. The nagging suspicion that it’s not human brilliance but artificial intelligence at play. The problem? AI-generated content. The solution? AI Detection tools. But not just any tools. You need accuracy, reliability, transparency. You need Passed AI, one of the best AI content detection tools.

I’ve tested the tool thoroughly, and I’m here to showcase all the features. No jargon, no fluff, just the facts. Passed AI is not just another name in the market; it’s a game-changer. It’s the answer to the question you didn’t know you were asking. It’s about academic integrity in the age of AI. Intrigued? Let’s dive in.

Oh, didn’t I tell you? Passed AI is one of the top three AI detection tools on the web.

Quick Summary: Is Passed AI Right for You?

In a nutshell, Passed AI brings unprecedented power to IDing AI content. The Chrome extension gives it an unrivaled edge, arming teachers with superior tools.

The key features transform cheating detection:

Chrome extension

Slick Chrome extension — seamless Google Docs integration

AI Content Detection

Surgically precise AI text ID — will catch your sneakiest ChatGPT creations

Plagiarism detection

Plagiarism detection on par with top competitors

Audit Report

The audit report reveals all — edits, duration, everything

Document Timeline

Document timeline visually maps changes — obvious if AI used

Replay Tool

Replay tool forensically reconstructs creation

Beyond the core, Passed AI delivers:

Passed AI drawbacks (it has some):

Starts at $9.99/month

But the cons pale next to Passed AI’s immense capabilities. For teachers serious about combating AI content, this is a must-have. The Chrome extension alone merits the price tag.

Next up? Testing. Real-world scenarios. Passed AI in action.

Round 1. Testing Passed AI for sneakiness

Let’s cut to the chase with what’s most intriguing here: How Passed AI operates in Google Docs. A handy, cool feature for educators.

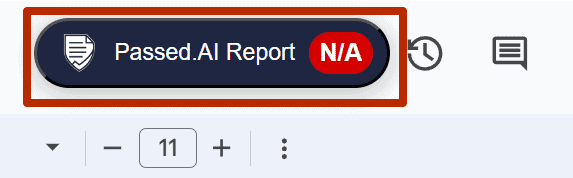

Here’s what the plugin looks like once installed:

So let’s test this bad boy immediately.

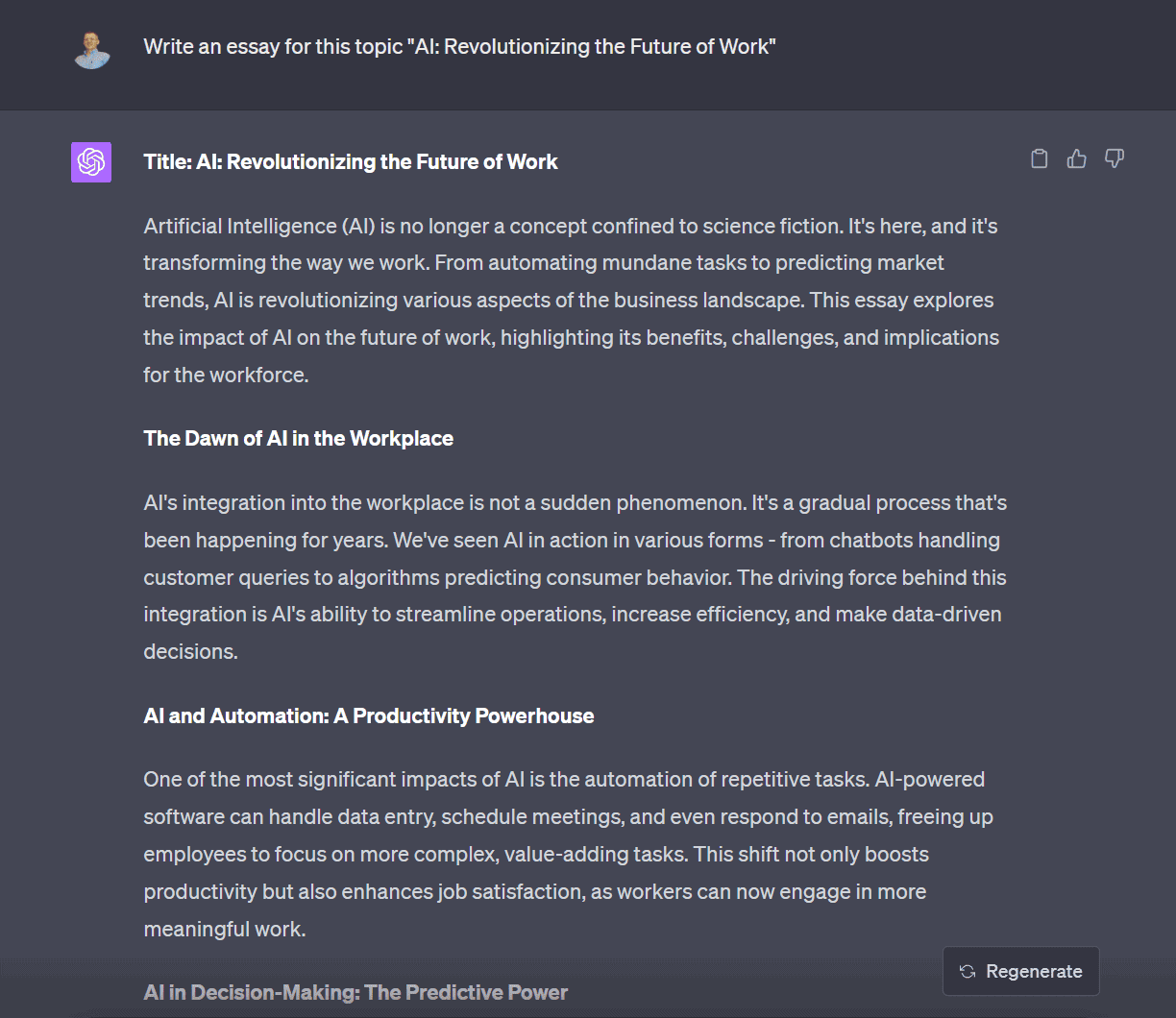

I’ll whip up an essay using ChatGPT (GPT-4).

Let me just grab that, copy it, and we’ll see what info the plugin spits out.

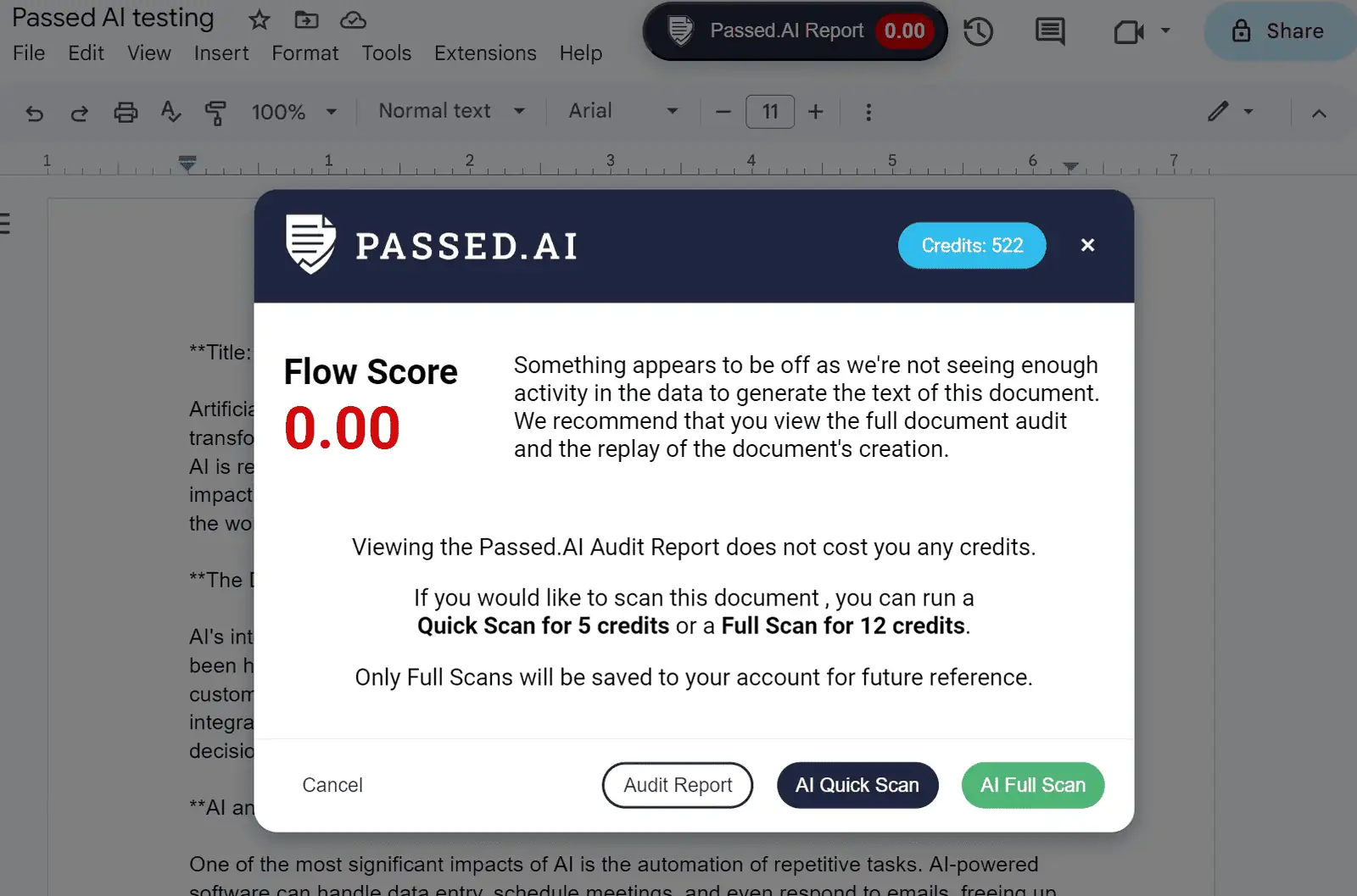

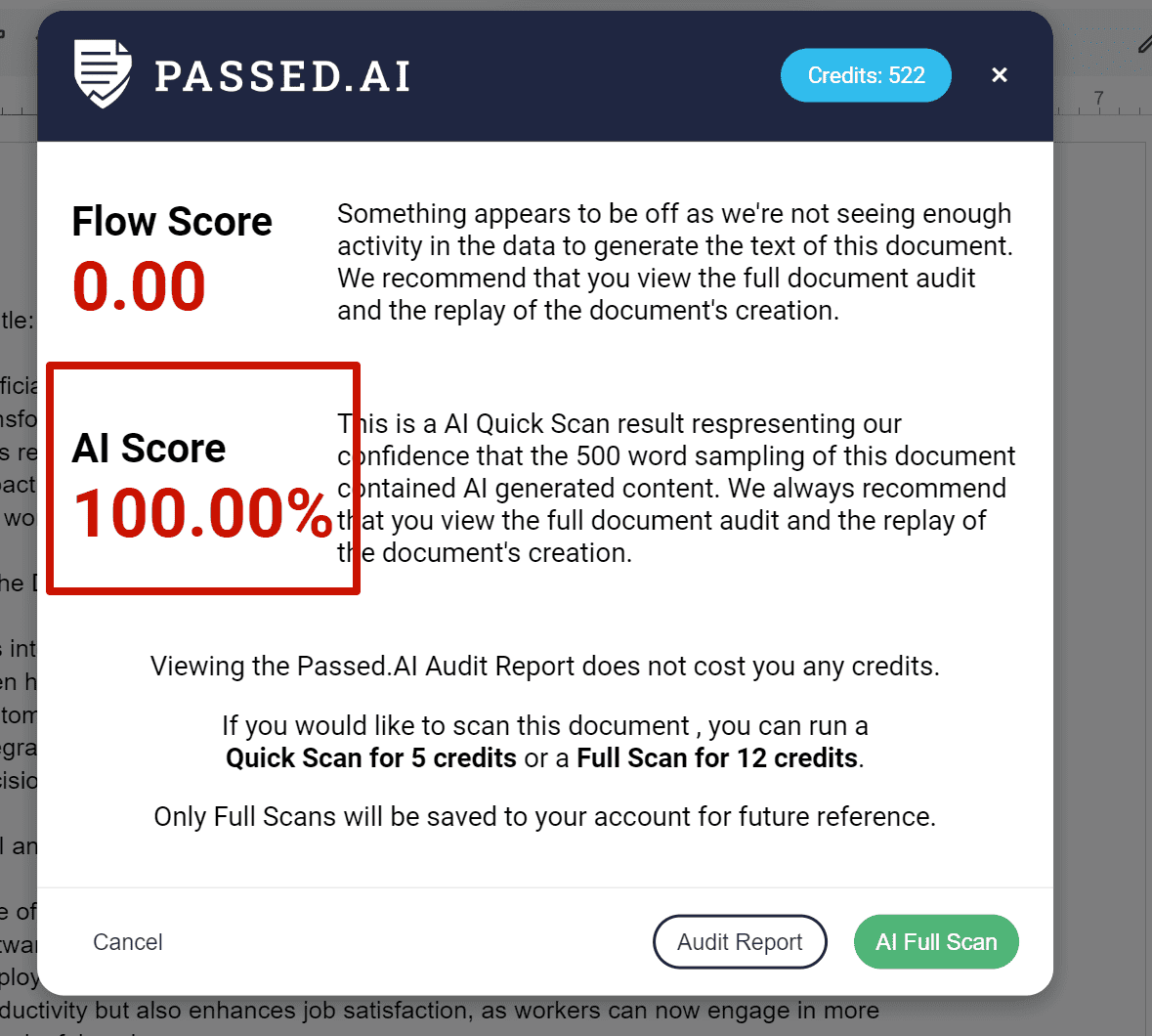

Here’s what we’ve got after clicking the plugin:

Let’s analyze this together:

Flow Score: 0.00. Not good. It screams “plagiarized content”. We know where it came from.

Next up: three buttons. I’ll walk through each since we’re examining this tool together. First, “Audit Report”.

A new tab opens up, presenting data on the document. Convenient.

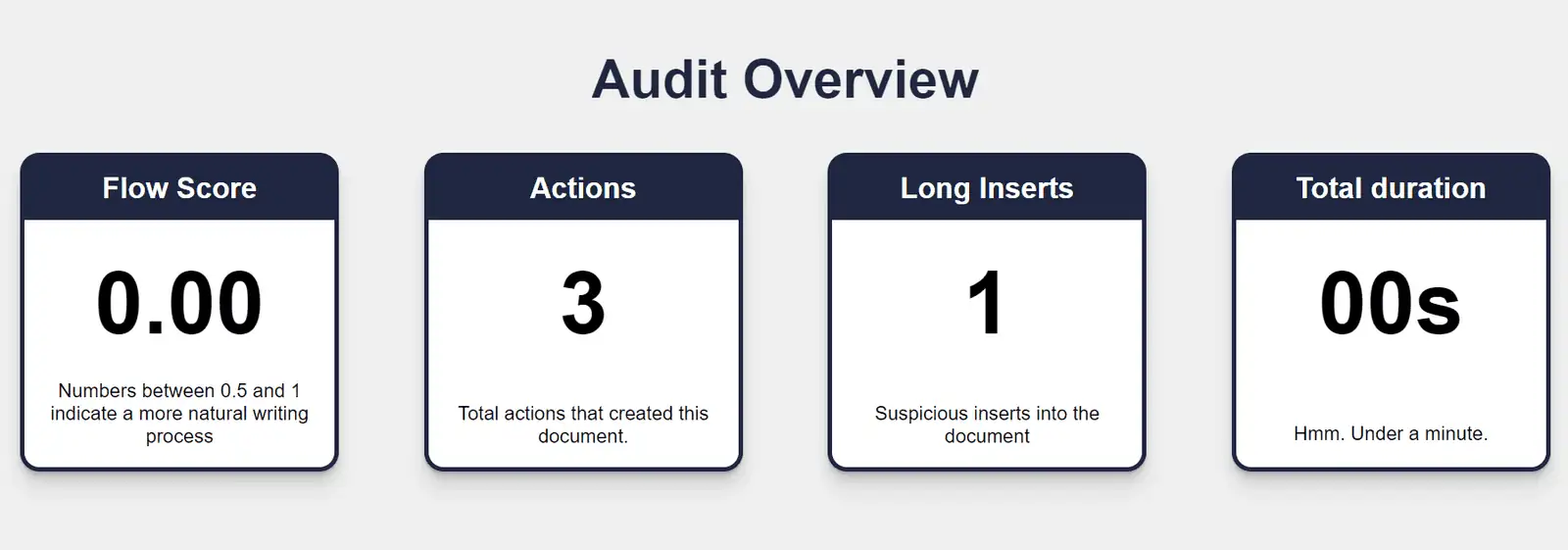

There’s the Audit Overview, Document Changes Timeline, Long Inserts, Contributors, Contributors Timeline (for multiple authors), and Sessions. In order:

Here’s what we’ve got:

- Flow Score: 0 (shady)

Actions: 3 (for a whole essay?) - Long Inserts: 1 (I copied the essay from ChatGPT — the plugin caught that)

- Total duration: time it took to “write” the essay.

Those metrics alone show the essay wasn’t written by a human. More to come.

Before we move on, here’s a quick guide on interpreting Passed AI results:

Interpreting Passed AI Results

Flow Score

- Combines metrics to evaluate how the document was written and arrive at a score from 0 to 1.

- 0 = least human-like writing process

- 1 = most human-like writing process

- Most human-written documents score 0.5 to 0.9.

AI Score

- Shows the likelihood content was AI-generated as a percentage (0 to 100).

- Low score = less likely AI-written.

- High score = more likely AI-written.

- But a high score doesn’t necessarily mean AI wrote the whole thing.

- If high AI Score, carefully review full audit to avoid false positives.

- Small chance human-written content mimics AI “fingerprints”.

- Passed AI’s approach minimizes this risk.

Audit Page

- Shows AI Confidence, Humanity, Long Inserts, Actions.

- AI Confidence expands on AI Score above.

- Long Inserts flags sections copied/pasted.

- Actions = total document changes.

- Low Actions relative to length = concern.

- Document Changes graph shows inserts, deletes over time.

History Overview

- Shows Total Duration, Contributors, Changes/Length, Deletes Ratio.

- Unrealistically short duration = concern.

- Unexpected Contributors = discuss with student.

- Changes/Length 0.5-1 = more natural writing.

- Deletes Ratio measures inserts vs deletes.

- Contributors shows each person’s changes.

Replay

- Watch document creation sped up or slowed down.

- Click Play/Pause, Back, Speed buttons.

Now, let’s move on.

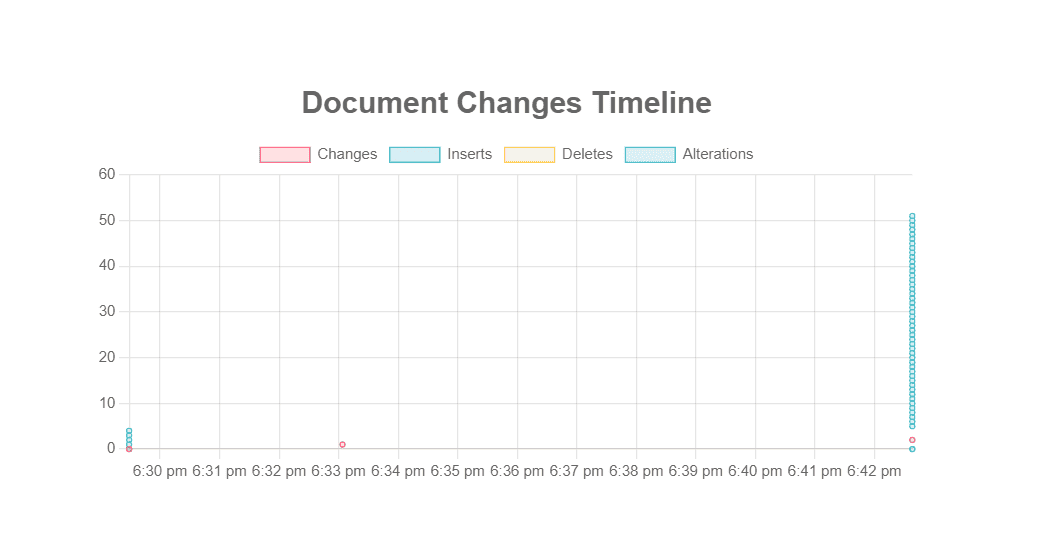

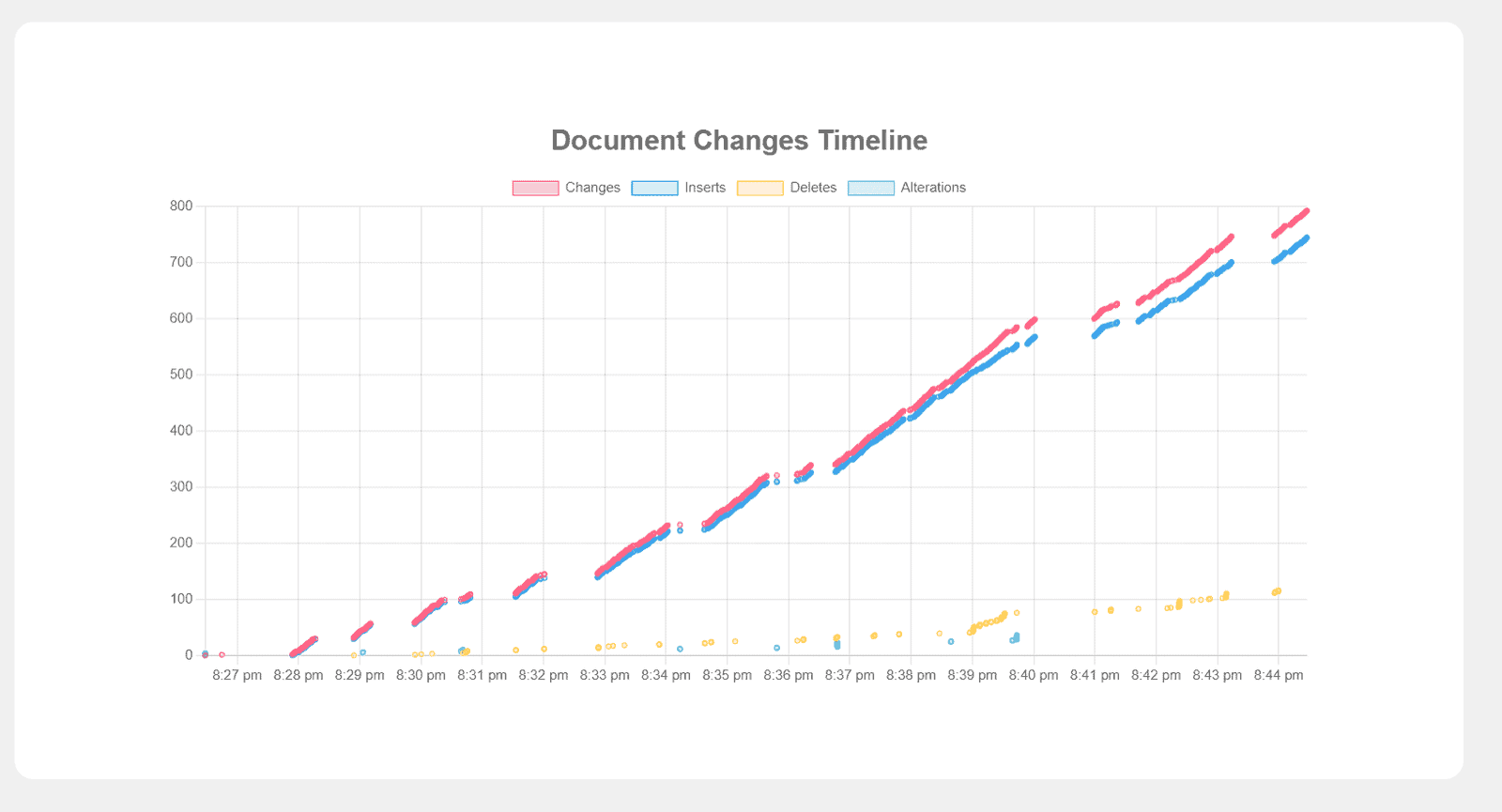

Document Change Timeline: Visual timeline of changes. Crystal clear.

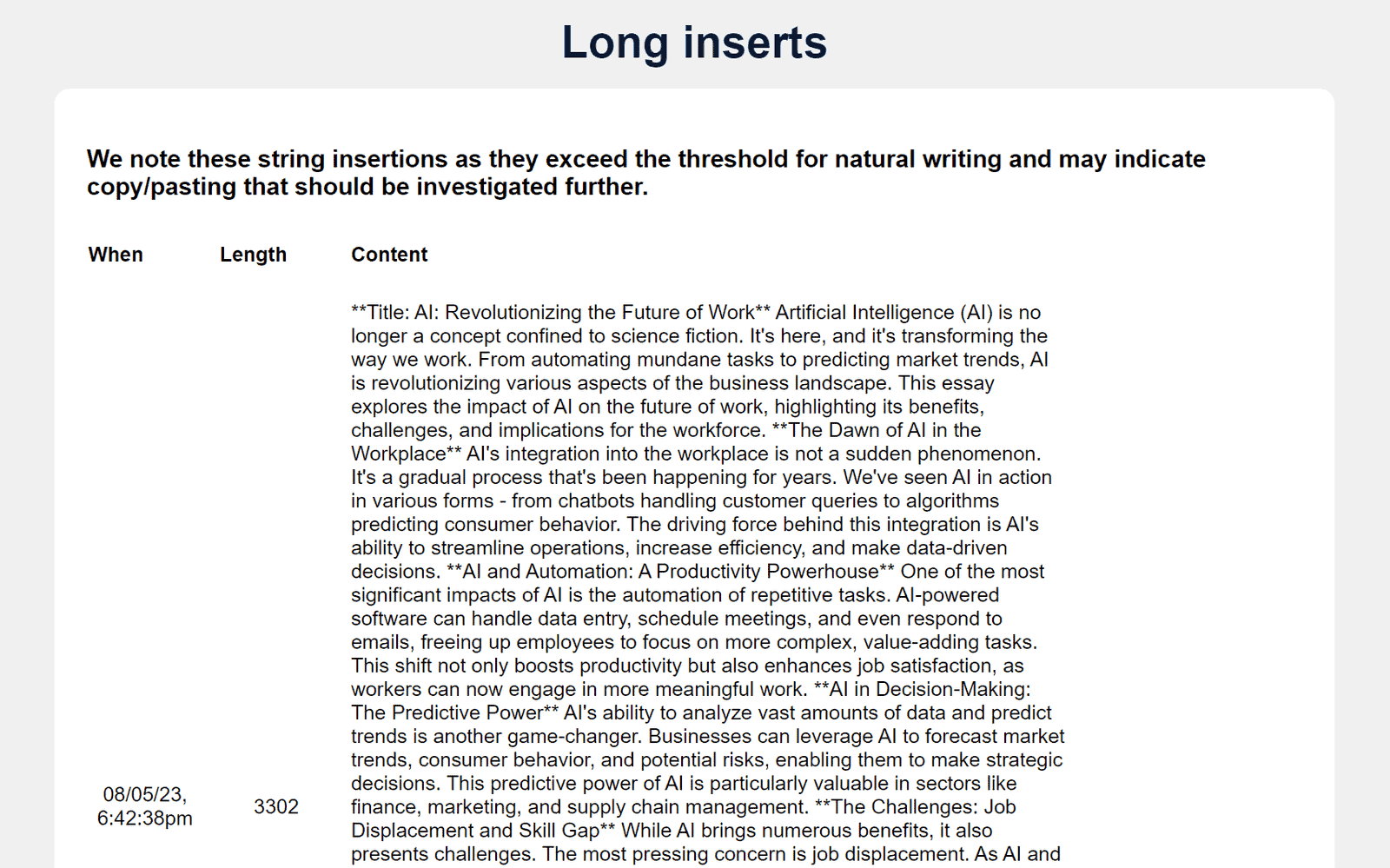

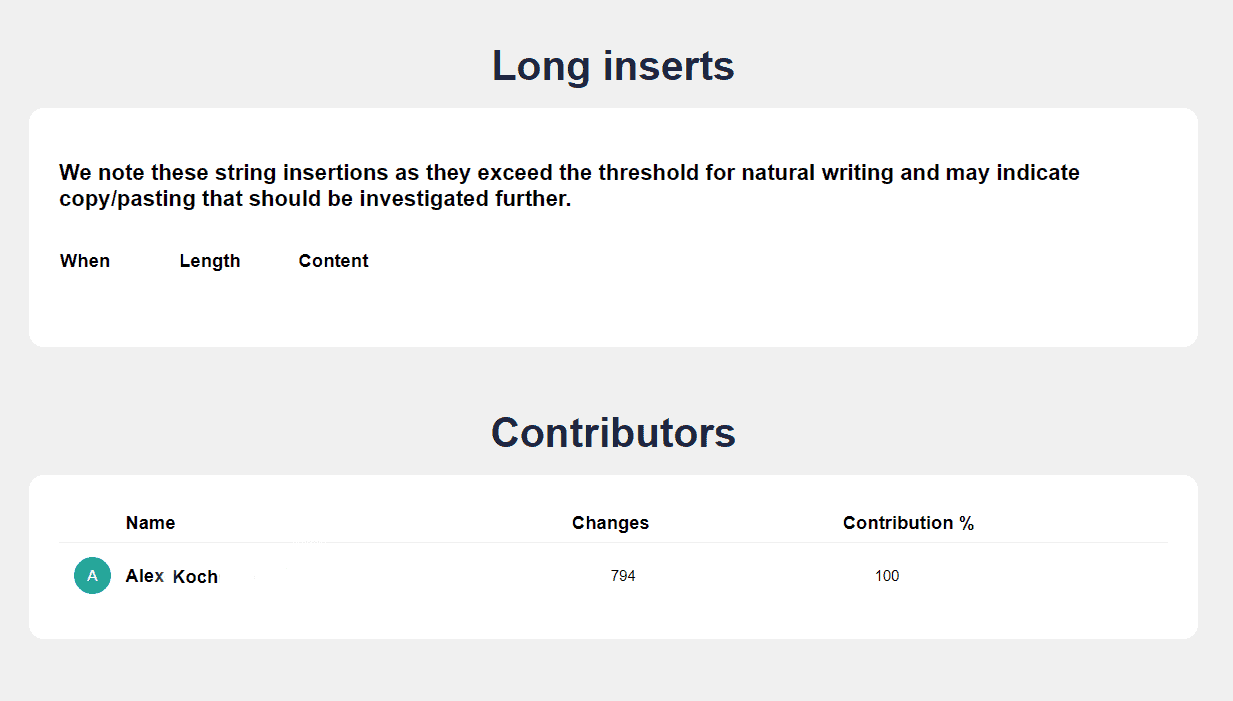

Long Inserts: Passed AI notes these as exceeding natural writing thresholds, indicating potential copying/pasting.

We can see exactly what text was copied and the amount.

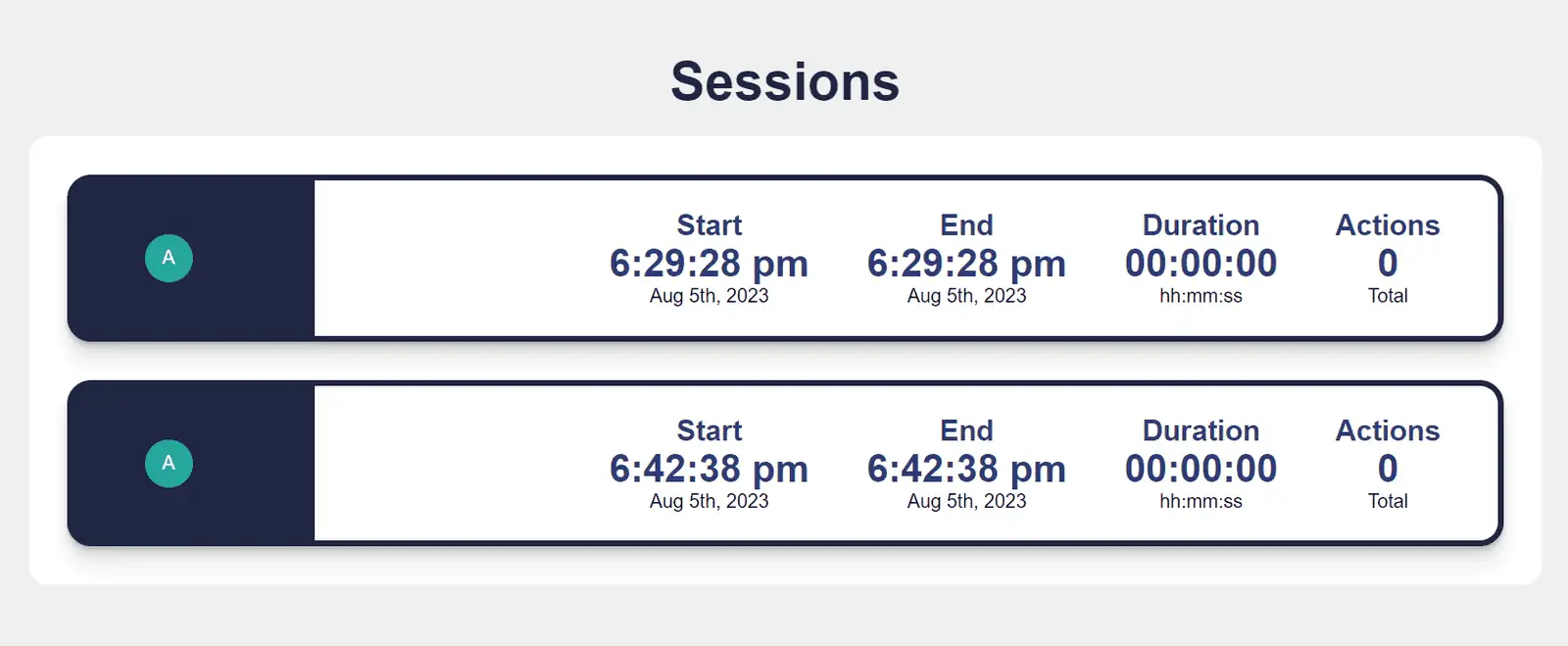

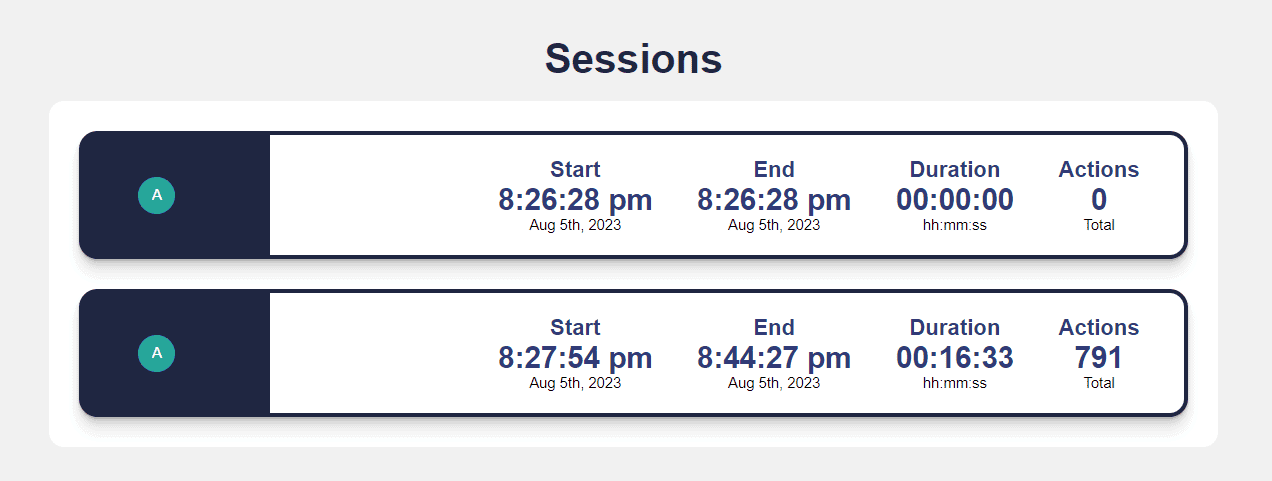

Sessions help understand who worked on the document and how much.

Everything’s done flawlessly here. Perfecto.

But let’s rewind back to the top of the page:

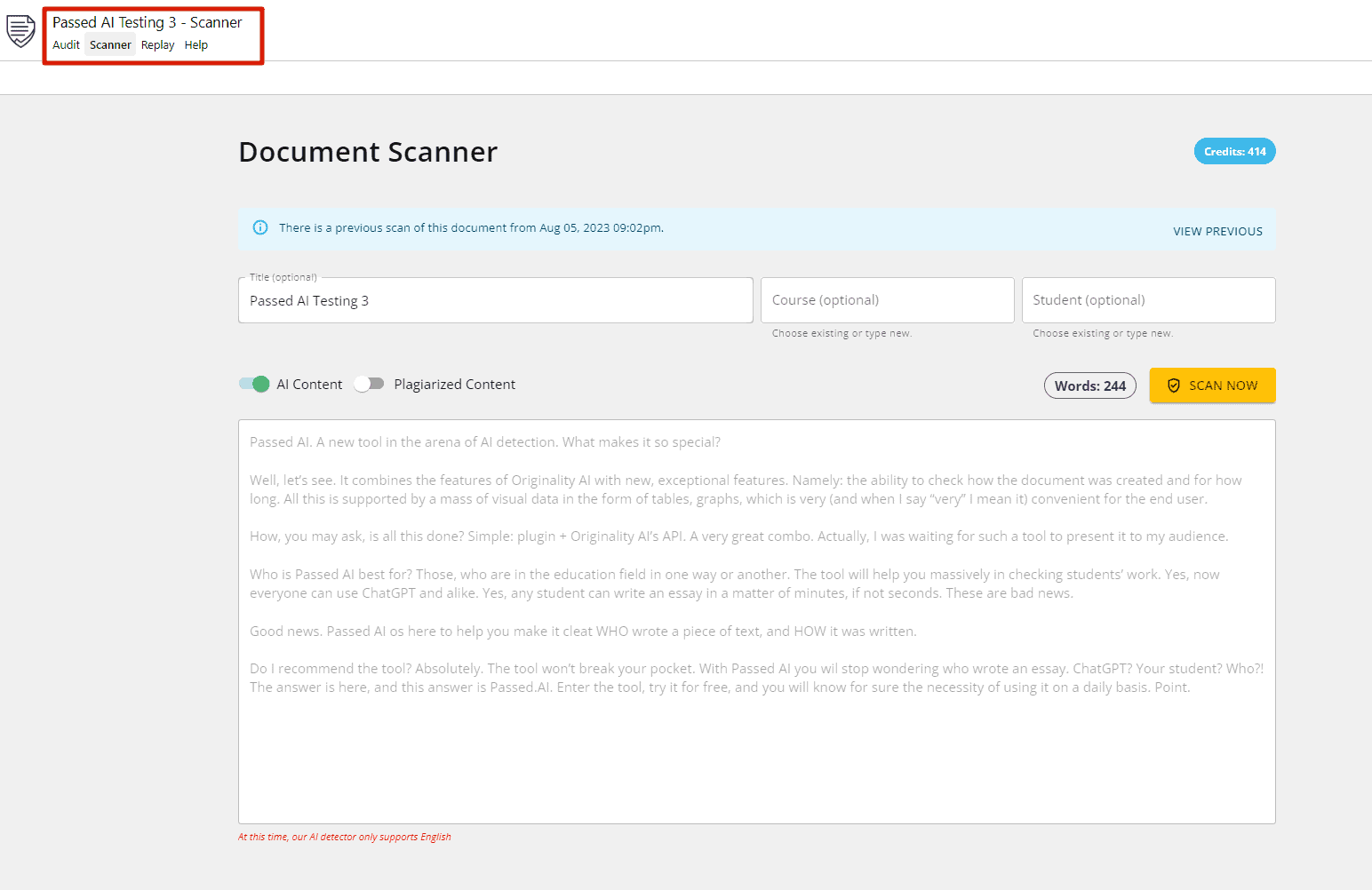

We’ve got 4 tabs up here. We’ve gone through the first with you. Now onto “Scanner”.

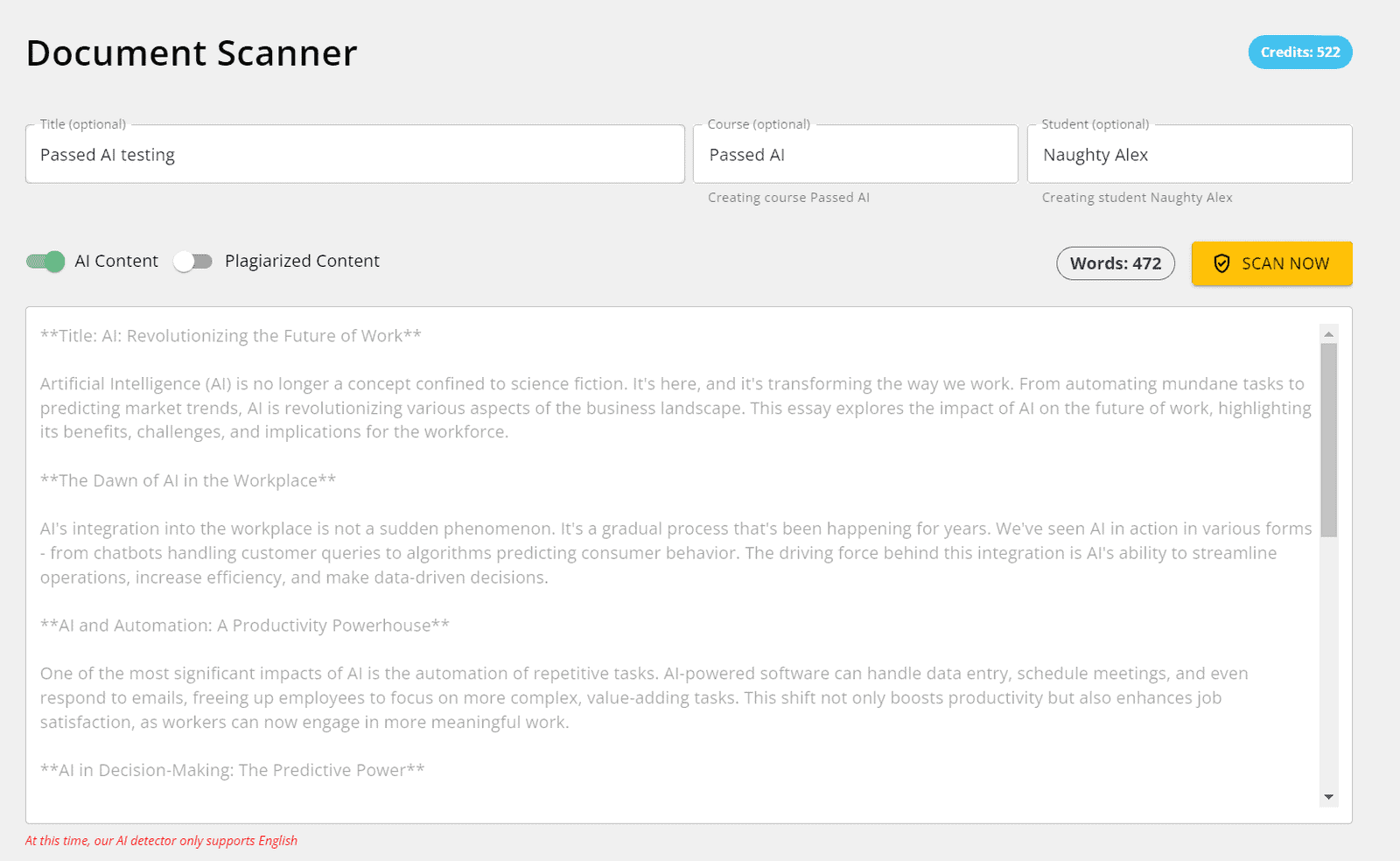

This lets us scan a document to ID the creator: human or AI? Let’s do it:

Press “Scan Now” (and check plagiarism too):

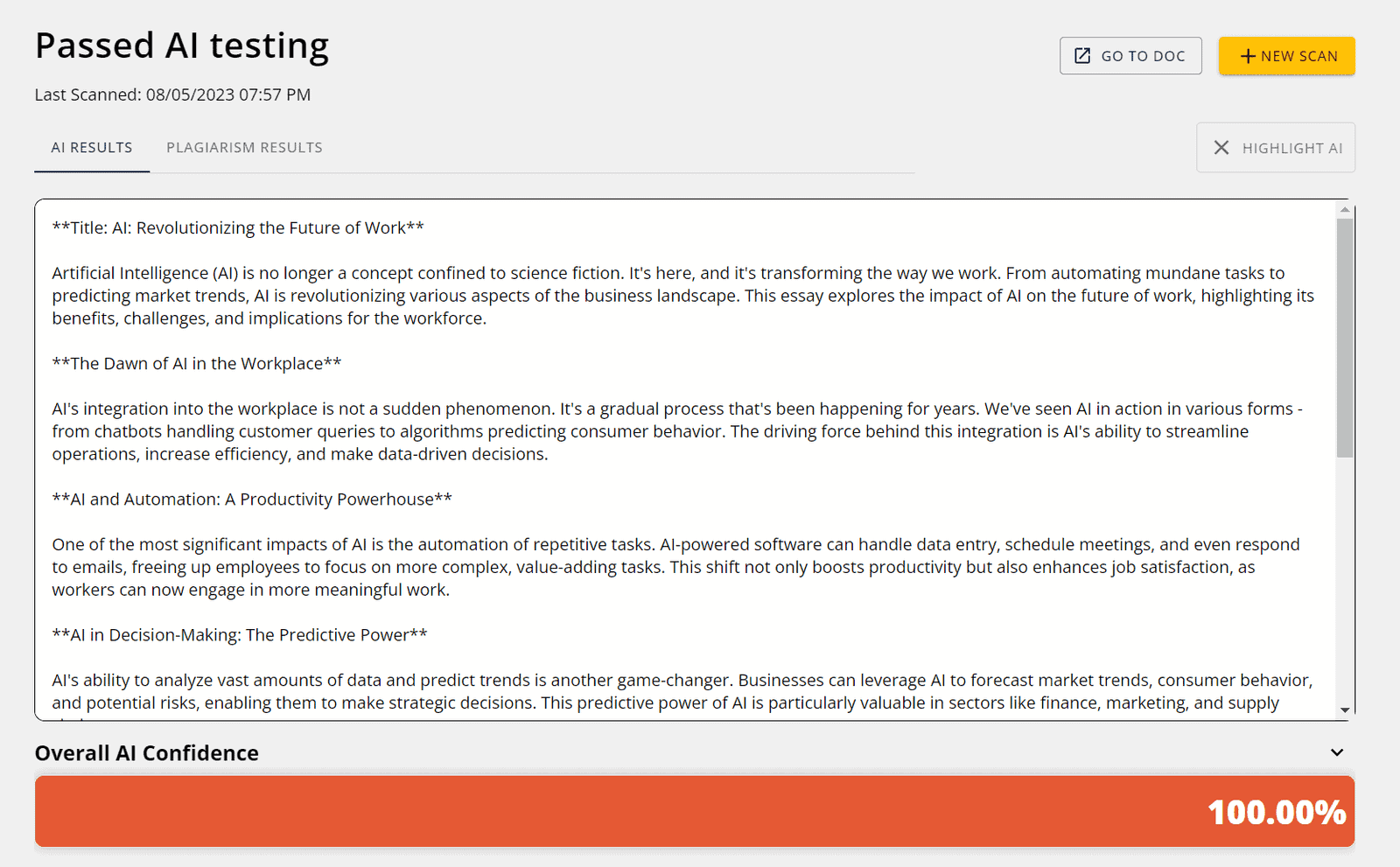

The “AI Results” tab shows: 100% AI. Accurate.

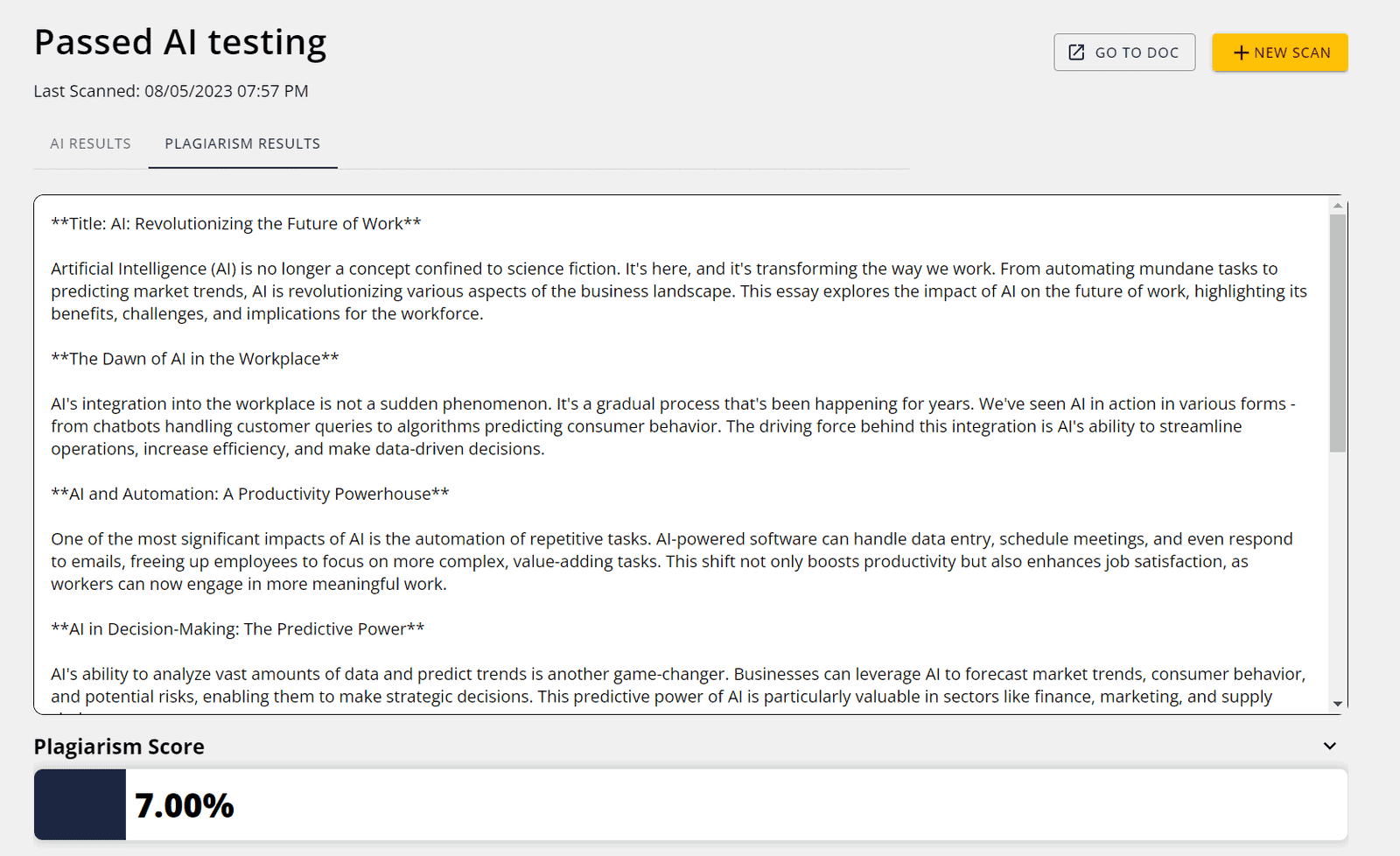

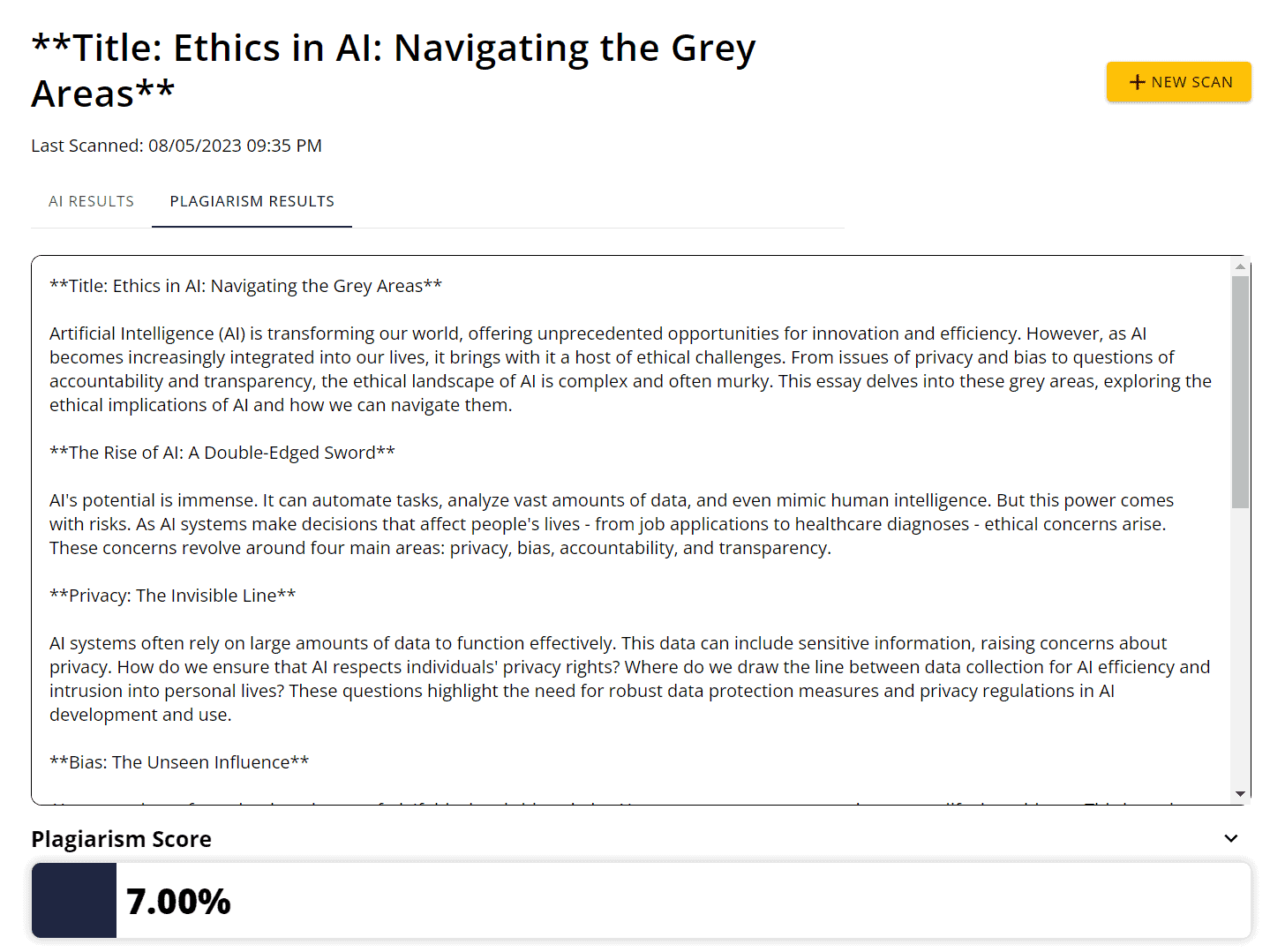

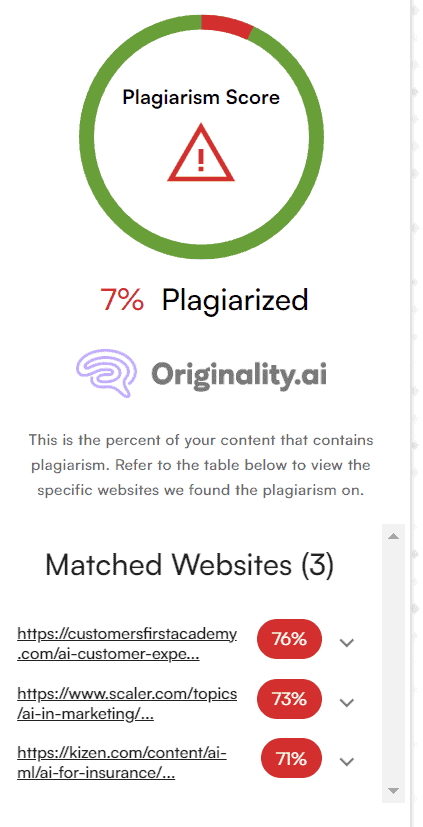

Now the “Plagiarism Results” tab:

7% plagiarism — standard for ChatGPT.

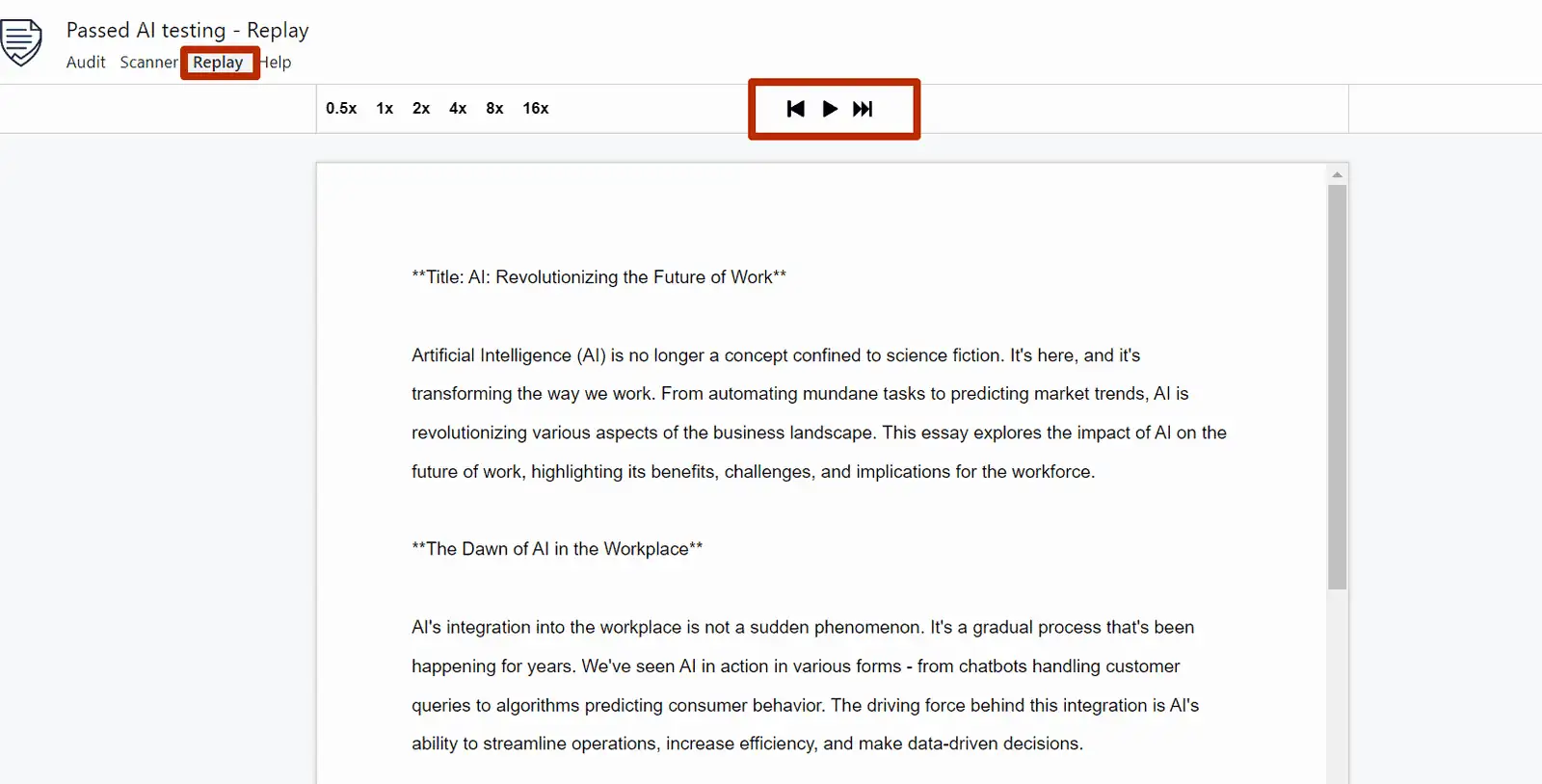

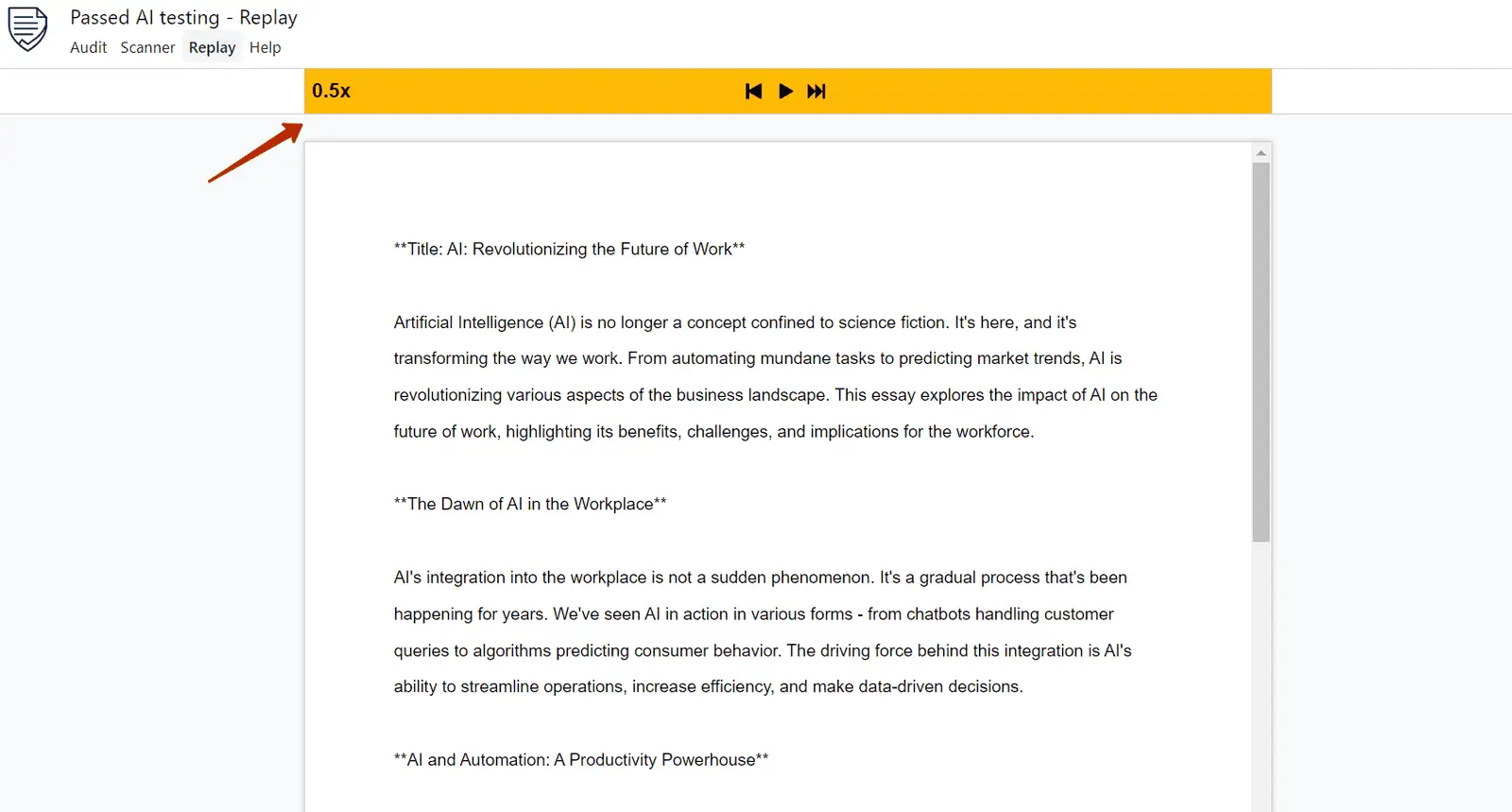

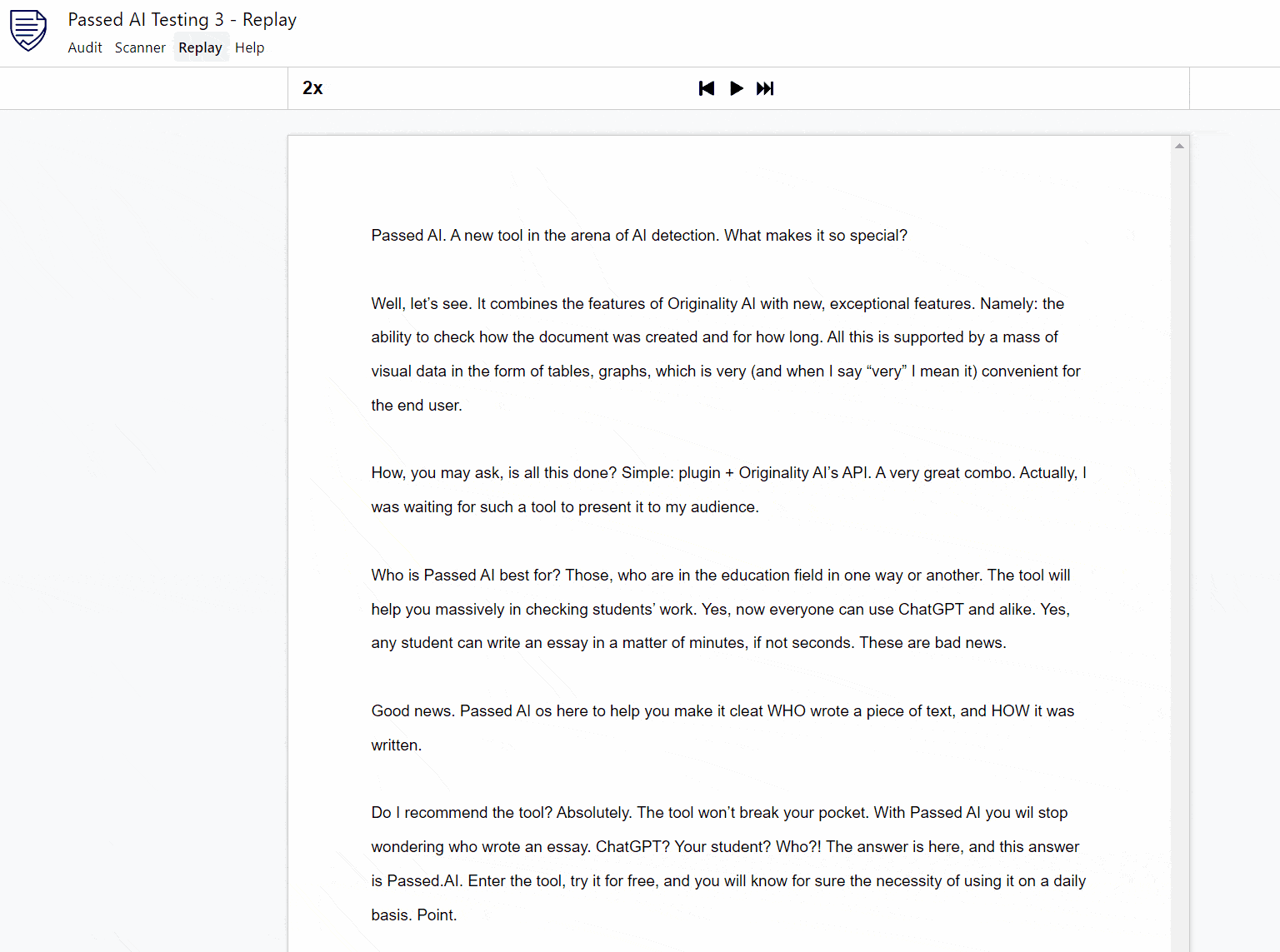

Peep the clutch Replay Tool (everything in one spot, so convenient):

Hit Play and watch the doc changes unfold. For me it’s obvious: done in one sec. Up top you can tweak playback speed:

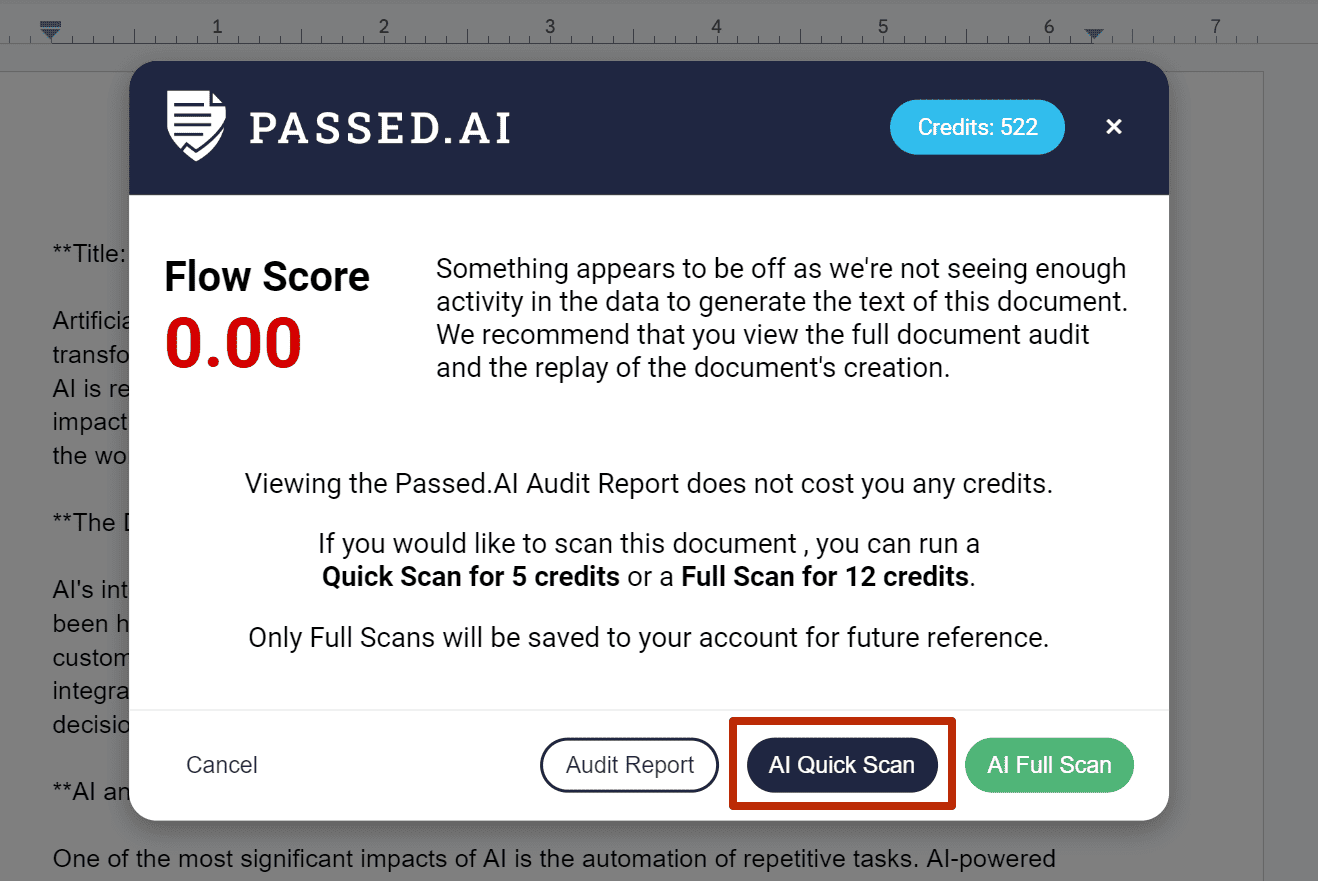

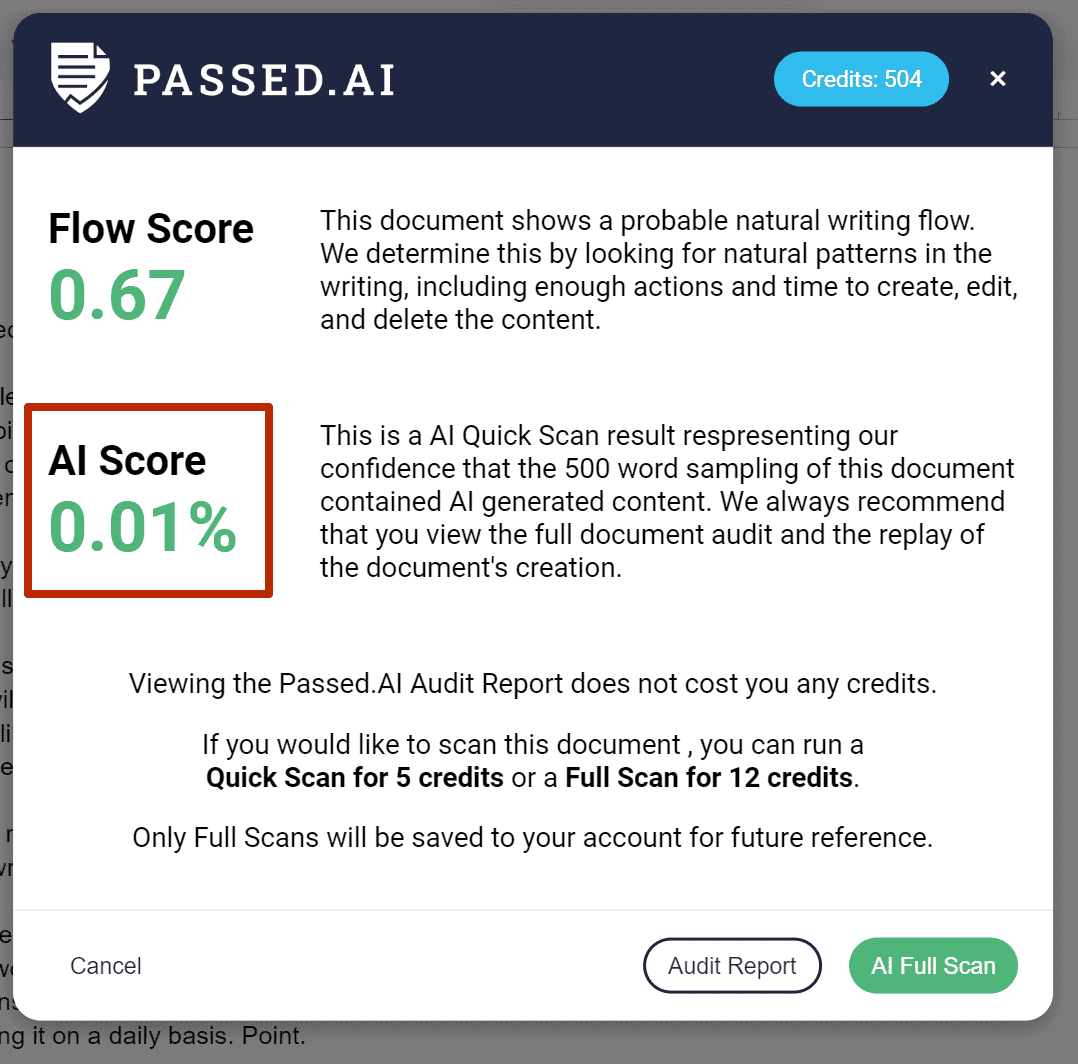

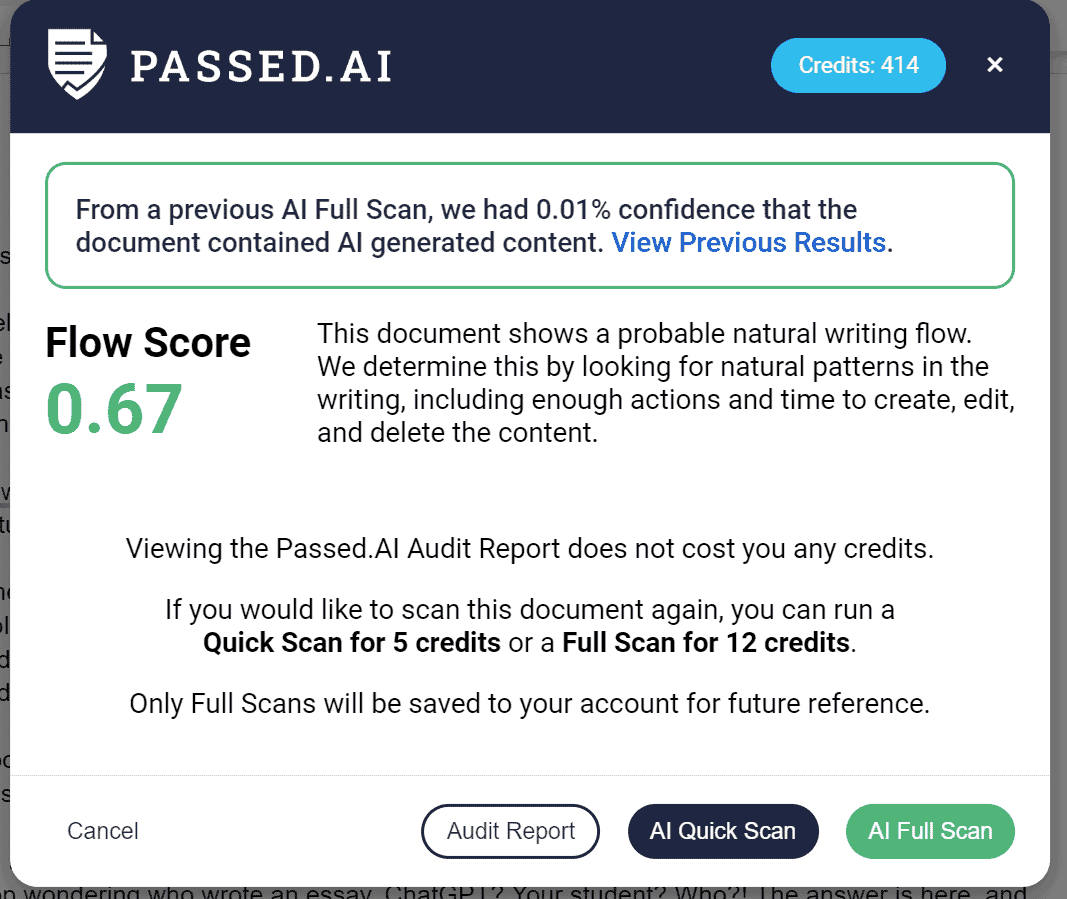

Now back to the main doc. Remember we clicked “Audit Report”. Now let’s try “AI Quick Scan”:

A few seconds and the plugin spits out data:

AI Score: 100%. Quick scan is clutch for rapid checks. Analyzes Flow Score, Actions, Long Inserts, Total Duration – then a verdict. Uses fewer credits since it’s not a full scan yet. Sweet.

If we press AI Full Scan, we’re back on the tab to thoroughly audit for AI.

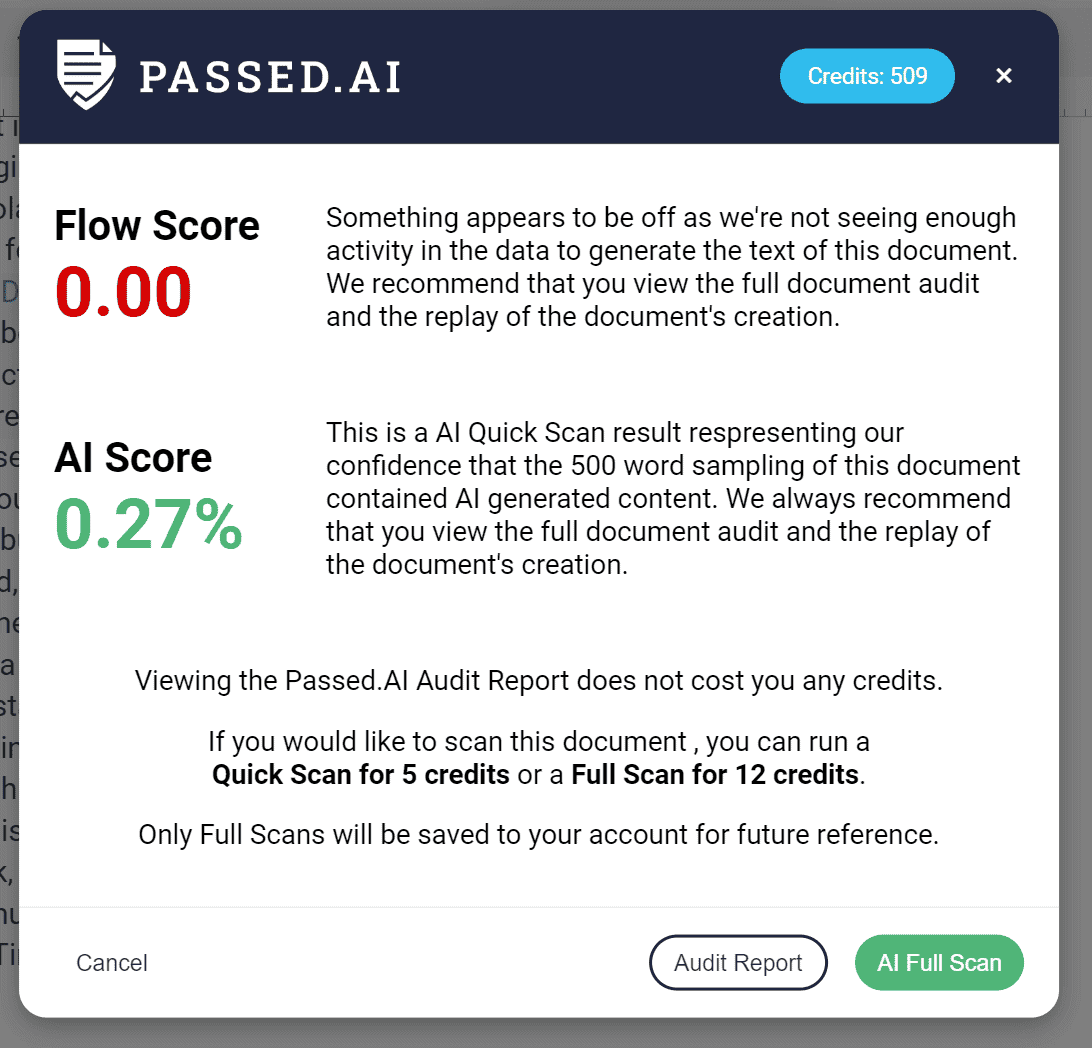

Let’s try another scenario: I’ll insert my own text, click Quick Scan and here’s what I get:

Despite the copy/paste, it detects my human writing — not AI.

Checking the Audit Report, all data shows a copy/paste (see above examples).

Don’t just rely on Audit Scan. Before accusing AI writing, do at least a Quick Scan. This clearly shows the text isn’t AI-generated. But for total certainty, fully scan the text.

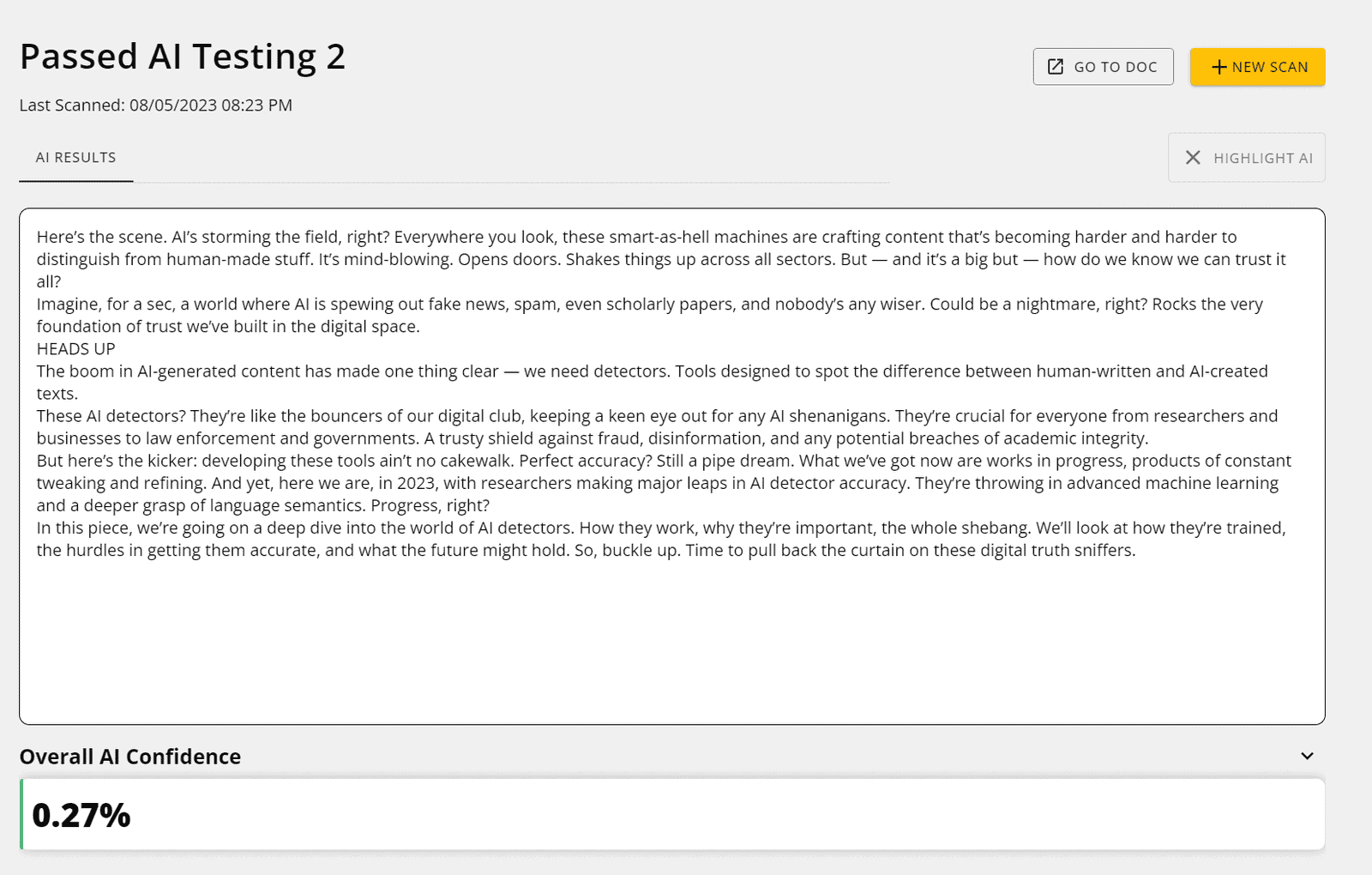

And the outcome:

Unchanged result.I think this pro tip will help you down the road.

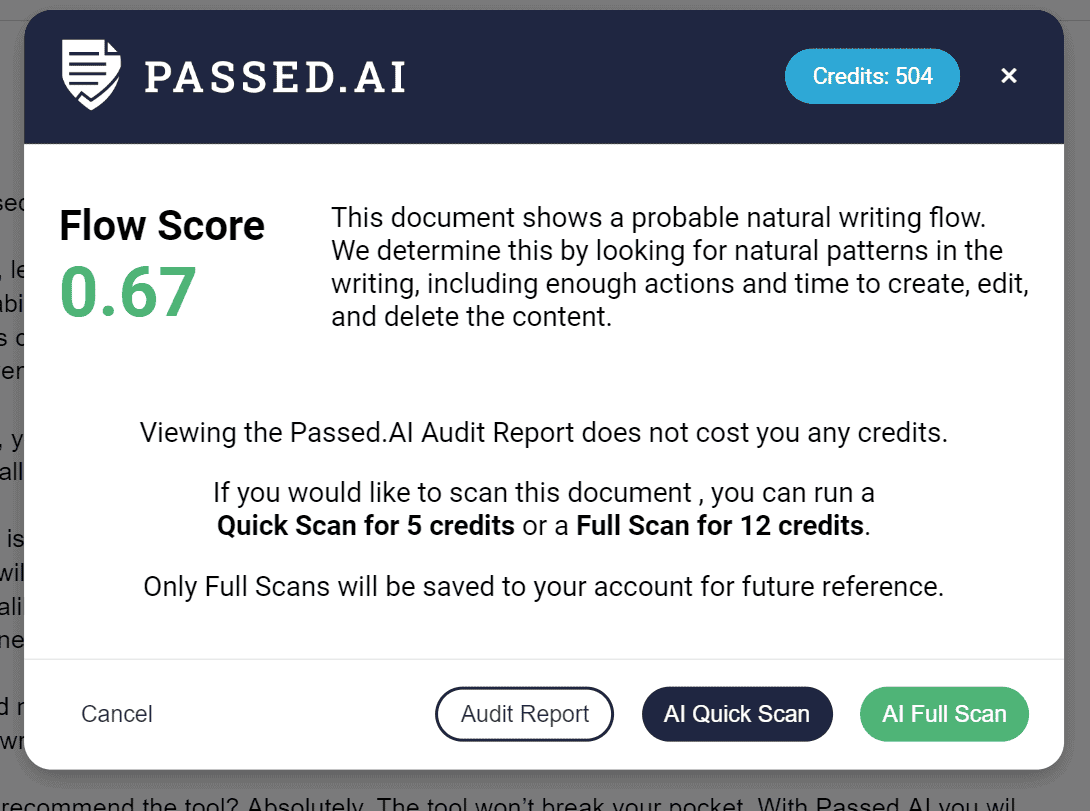

One more experiment: let’s test the Passed AI plugin live. I’ll write a piece of content myself in Google Docs with the plugin on and see what happens.

Pause.

Okay, I just penned the text solo. No special techniques. Just checking how Passed AI rolls. Let’s go.

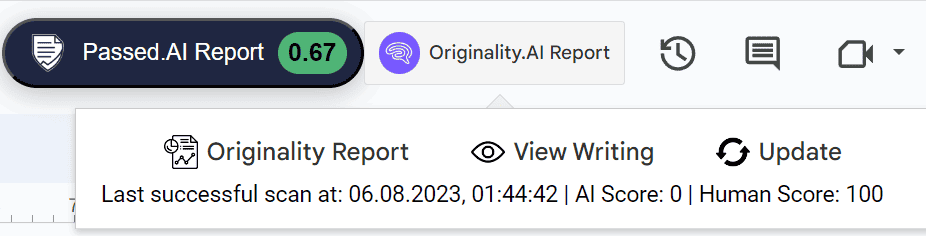

First check, the Flow Score, shows I wrote at my own HUMAN pace. Dope. Before the full audit, let’s do an AI quick scan.

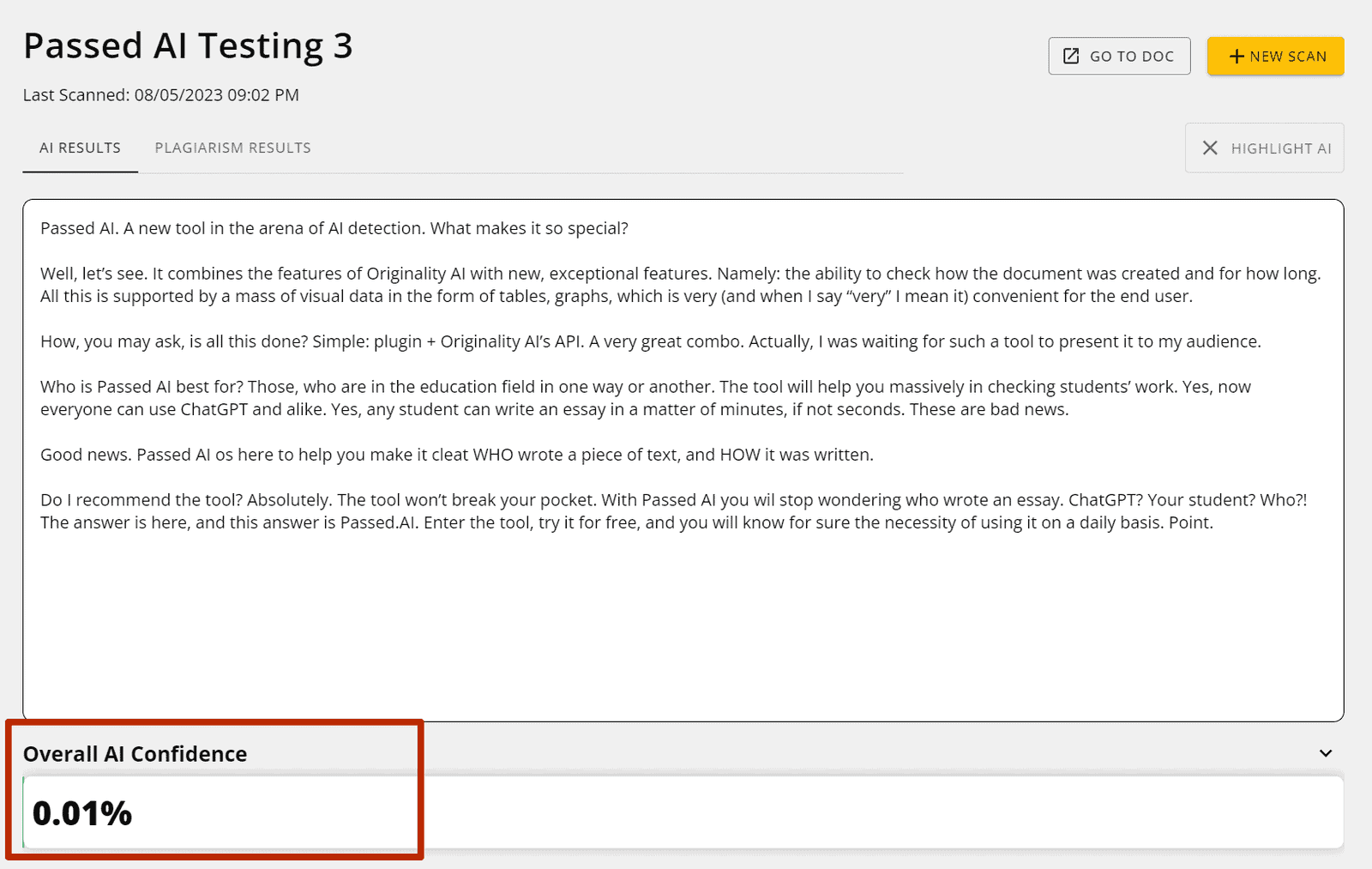

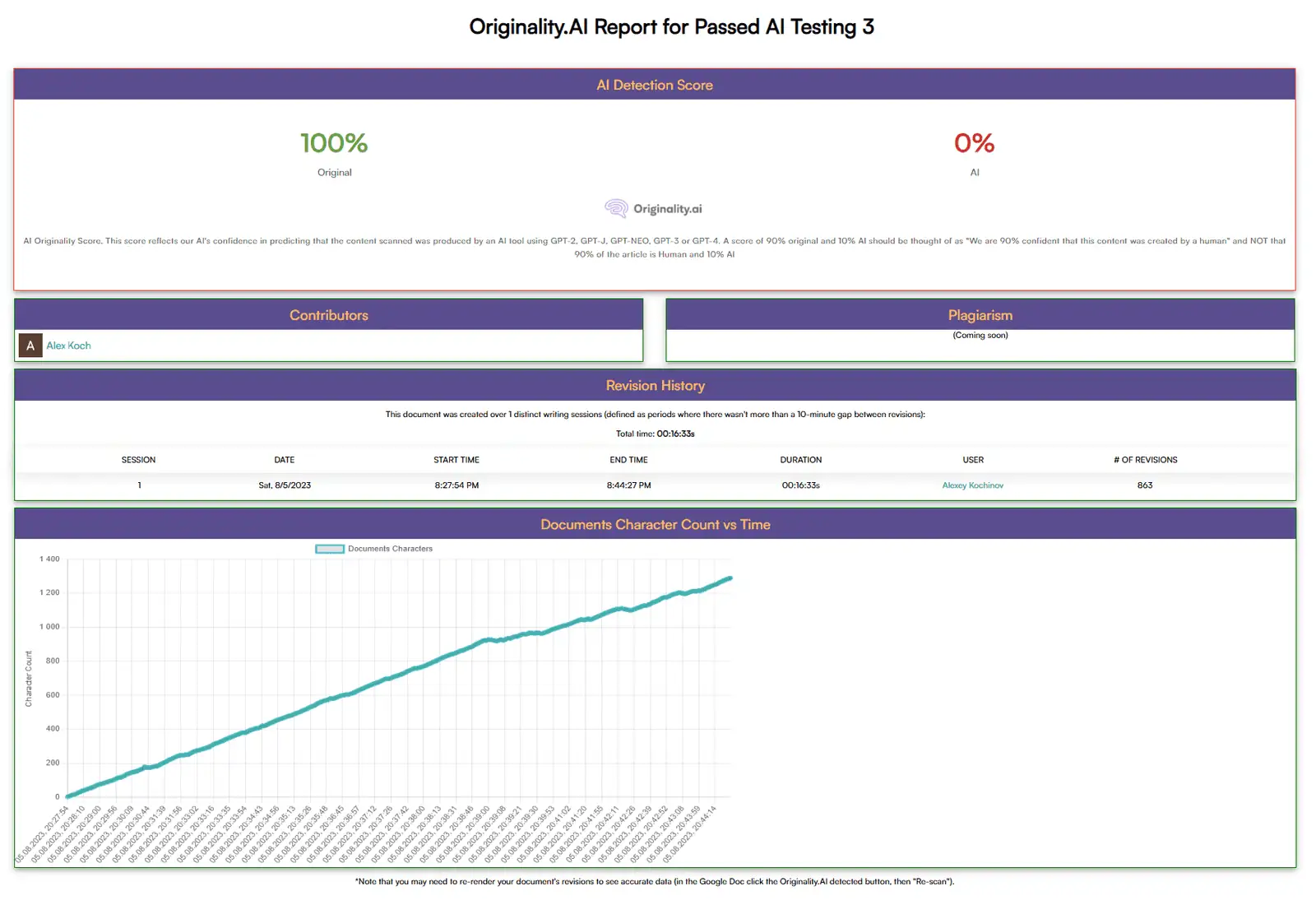

For the quick check, AI Score: 0.01%. Bangin’. Because that’s facts. Now, a more holistic audit (audit report):

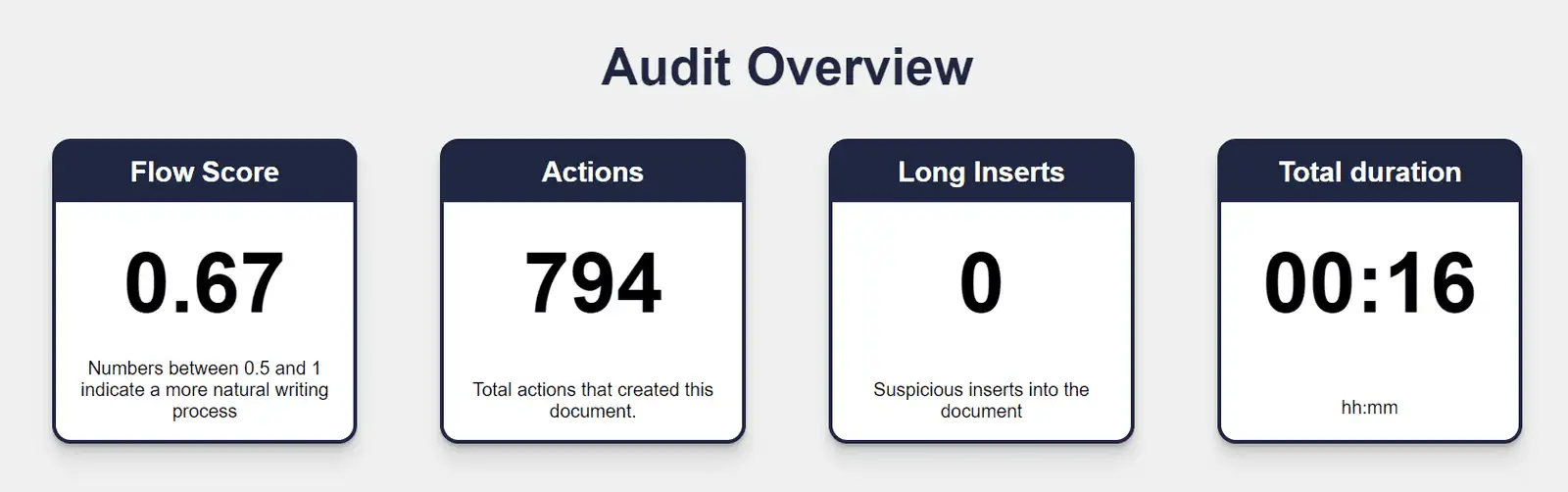

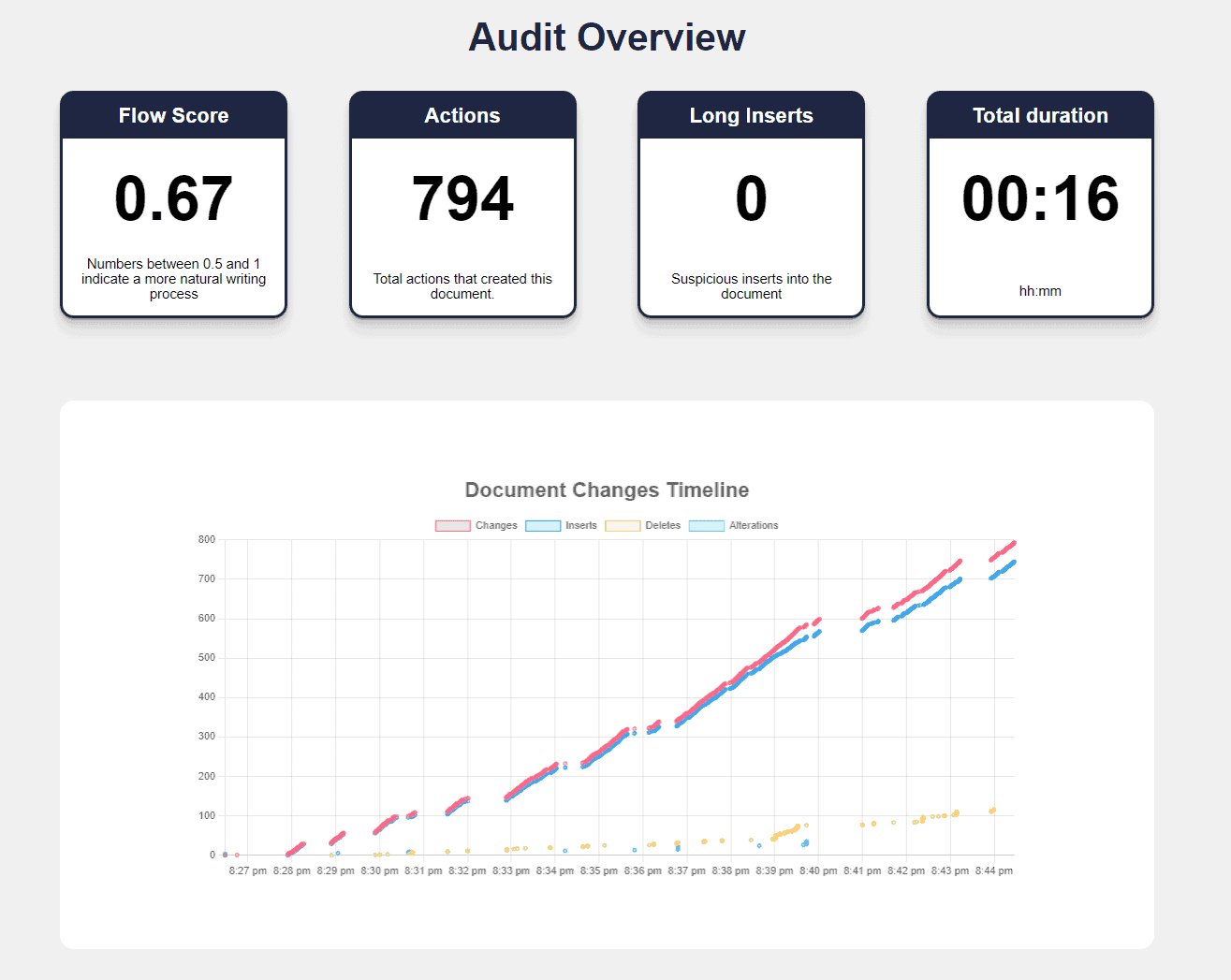

Let’s break this down:

- Flow Score: 0.67. Written organically, legit.

- Actions: 794. Remember the first test? Just 3. Here, a massive 794, proving human authorship — not AI.

- Long Inserts: 0. Accurate, didn’t copy/paste anything.

- Total Duration: 16 minutes. Yup, exactly how long it took to create the document.

As you can see, with human writing, there’s zilch to call out. But a couple more metrics on this page:

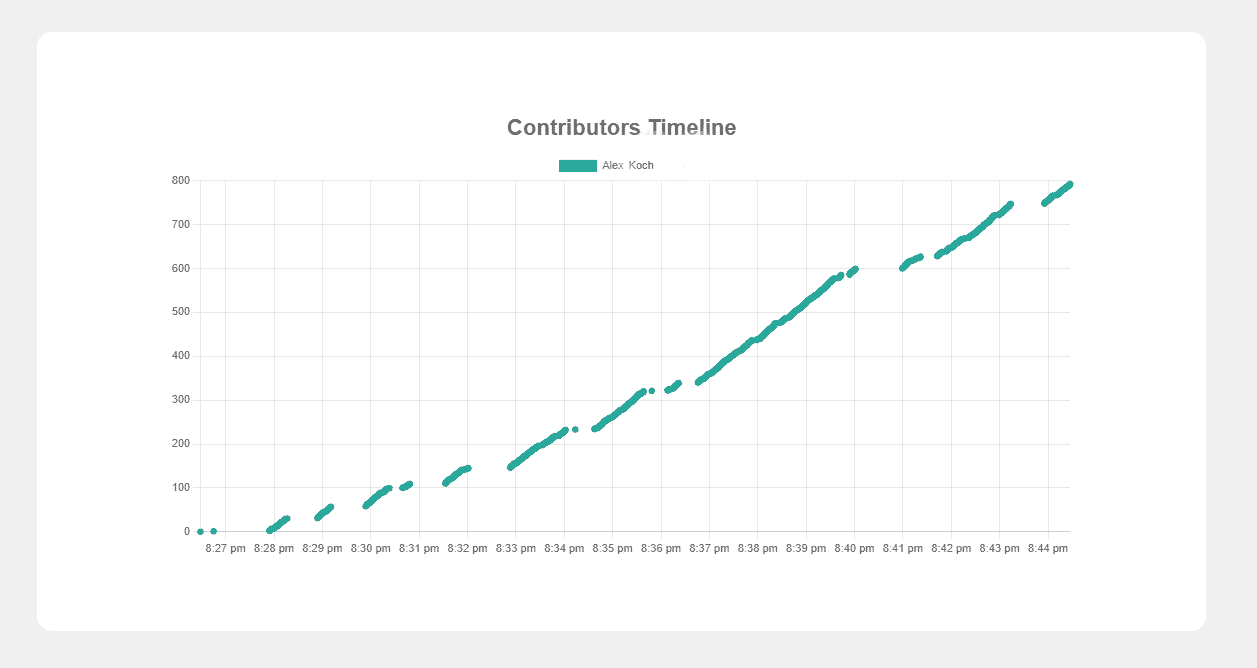

The Document Changes Timeline displays the clear trajectory of how the document was created. More proof of human authorship.

Long Inserts: empty column. Logical, didn’t copy/paste anything.

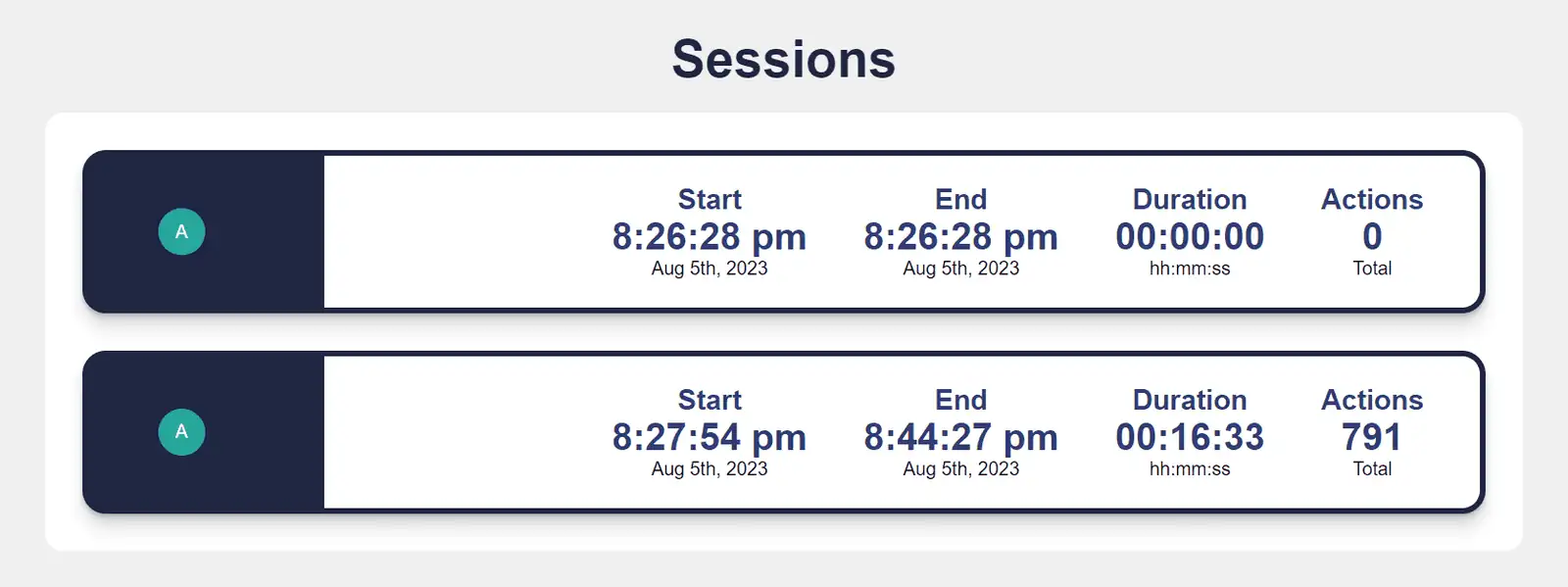

Peep the sessions yourself. For some reason, the session count is slightly different in the second doc. But minor details don’t really matter. Essentially everything is reflected accurately.

For certainty, let’s check AI detection and plagiarism:

AI Confidence: 0.01%. Meaning 99.99% Human Written. Now plagiarism:

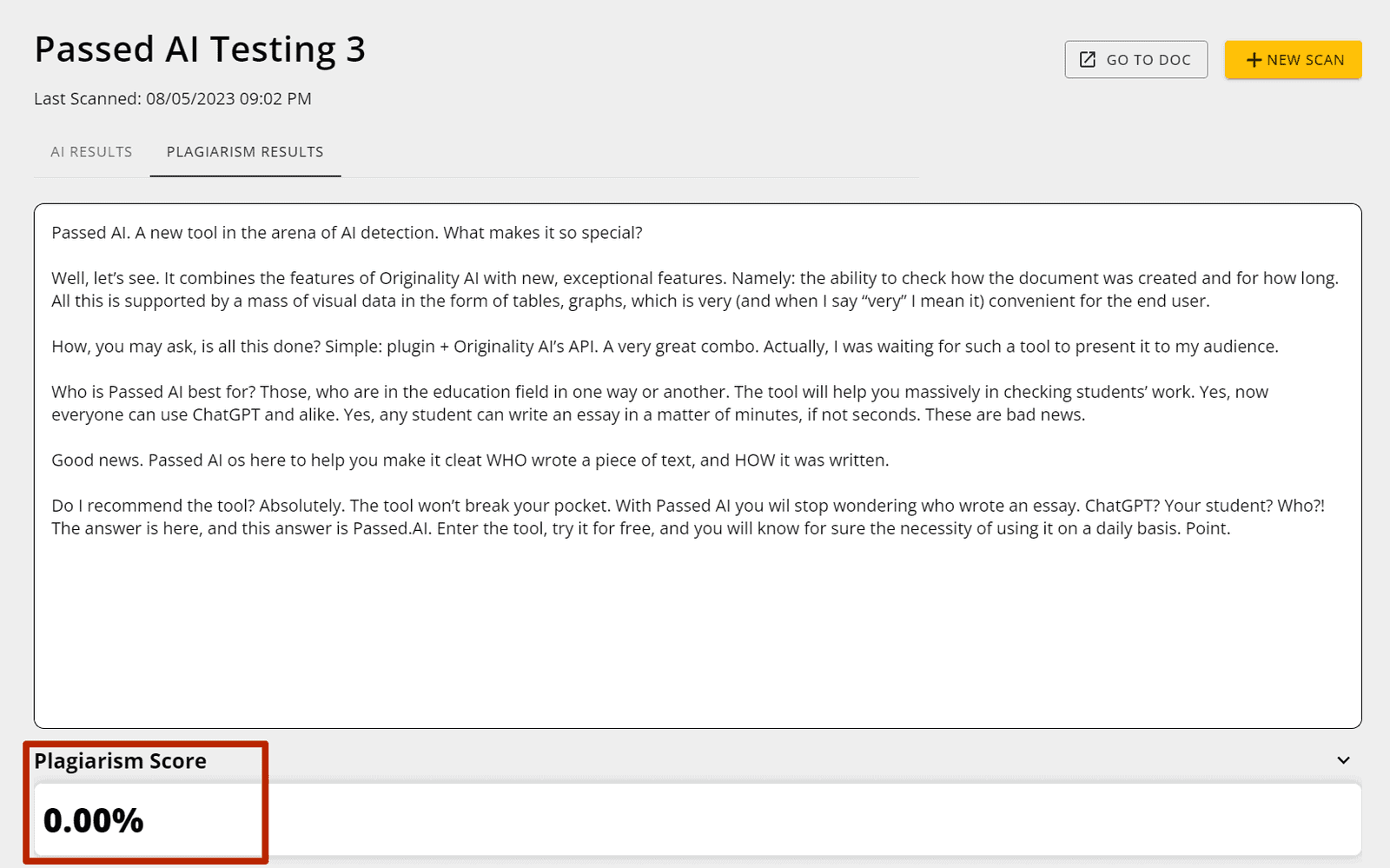

0% plagiarism. Anything else that needs proving here? Don’t think so.

And check out the money Replay Tool. Made this GIF just for you:

So that’s how to harness the plugin’s power. I led with this because it’s the most crucial element. Passed AI targets educators, and their plugin nails it. I’ve proven that here.

Next, we’ll test the Document Scanner on the main site. You never know — might be more convenient. Given my faith in Originality AI, and Passed AI’s partnership with their API, I’ve no doubts. Passed AI plans to integrate other AI detectors — interesting but potentially confusing.

My advice? Stick with Passed AI + Originality AI.

Now, the tests. 10 total. We’ll check texts for plagiarism and AI, using my credits without restraint. 5 texts from ChatGPT, 5 personal tests. We’ll also thoroughly compare plagiarism checks to other popular tools: Originality AI and Grammarly.

Round 2: Testing 5 ChatGPT essays without prompts.

Test 1:

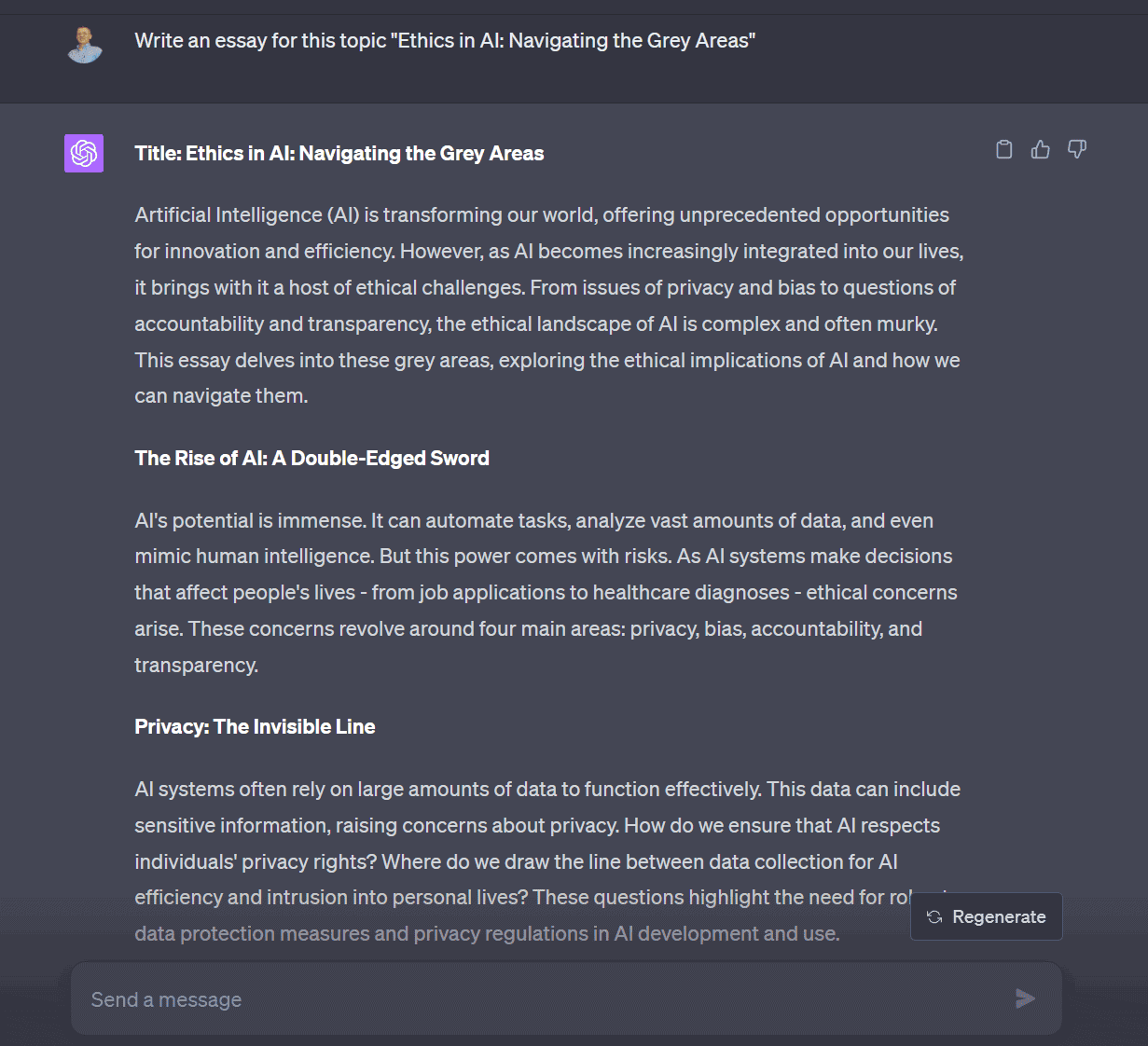

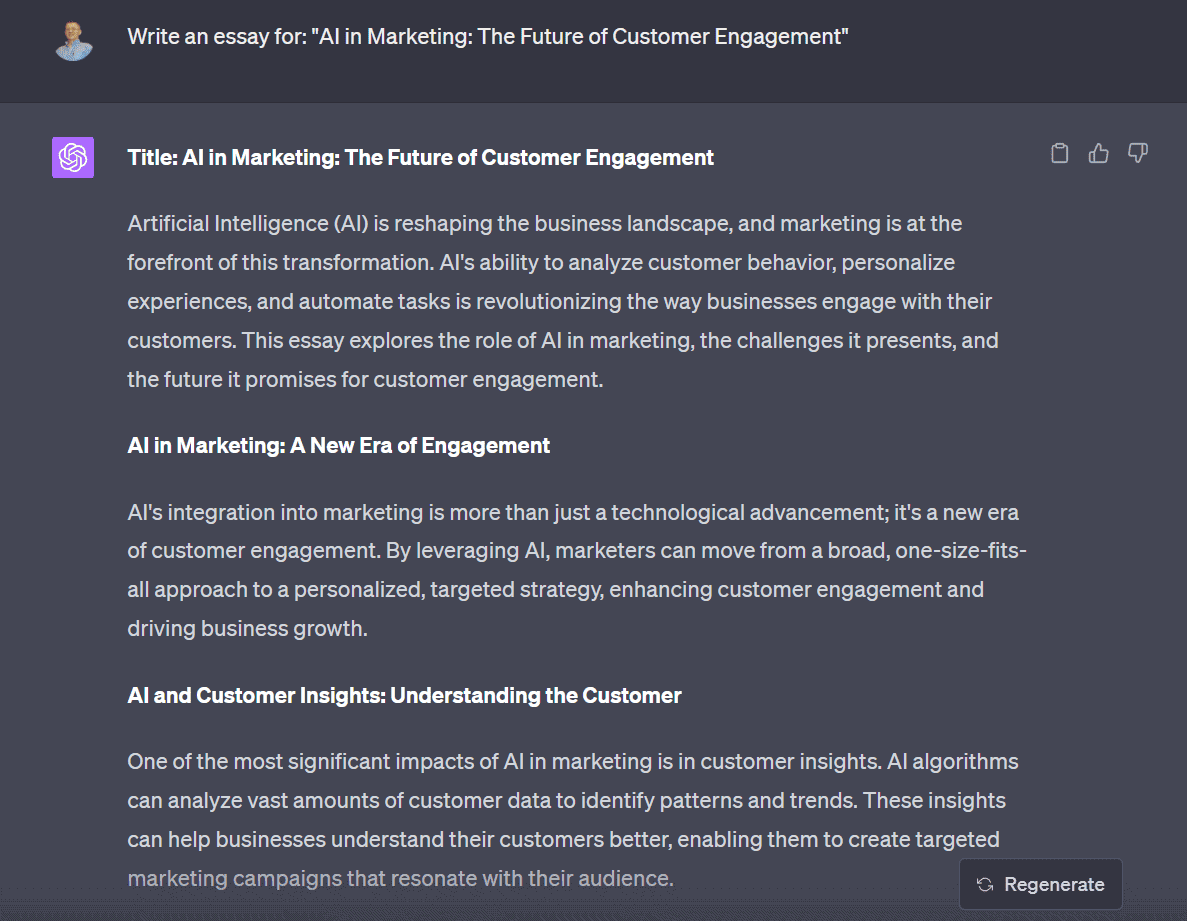

Here’s ChatGPT’s creation:

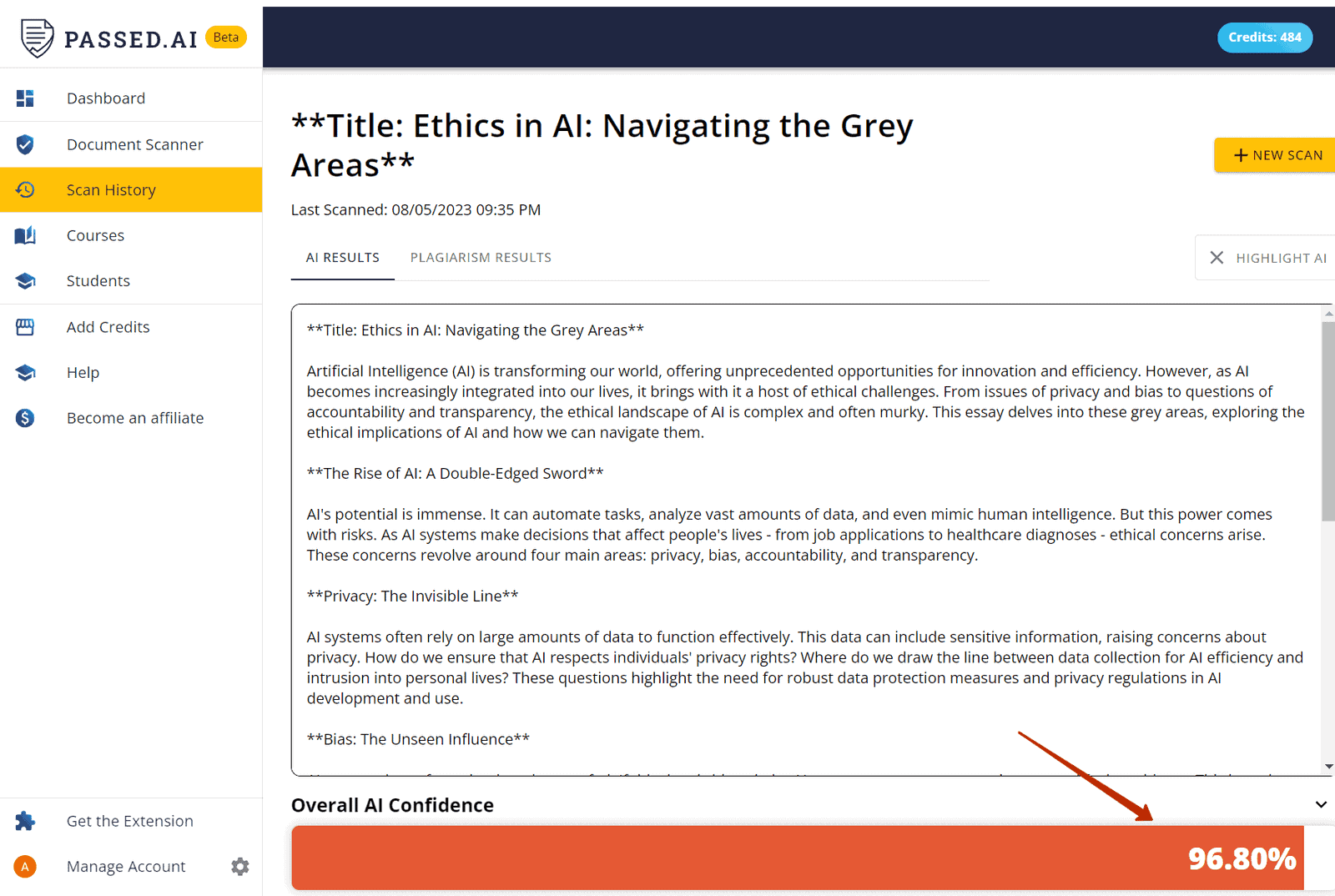

Let’s test:

AI Score: 96.8%.

Plagiarism: 7%.

Checks out logically.

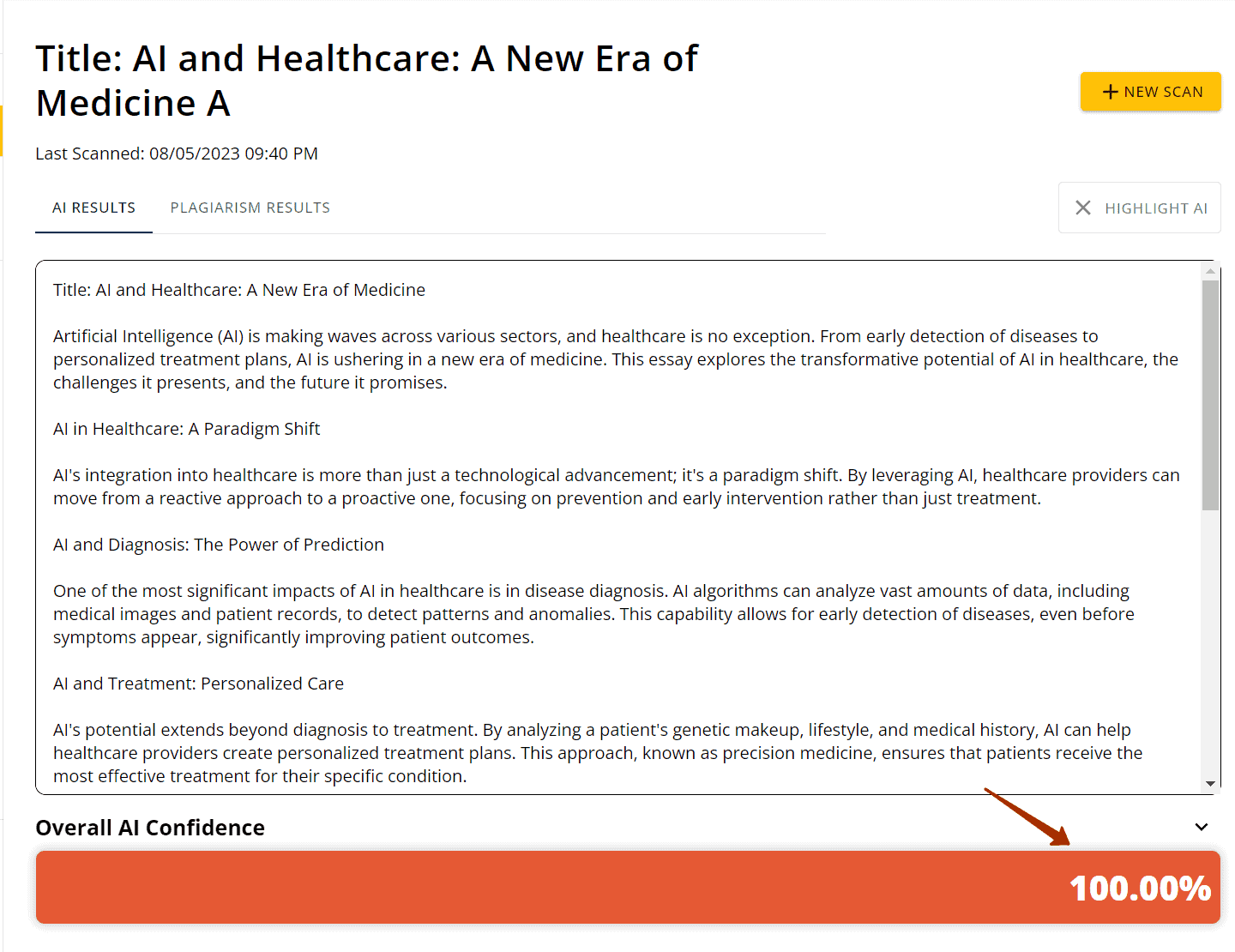

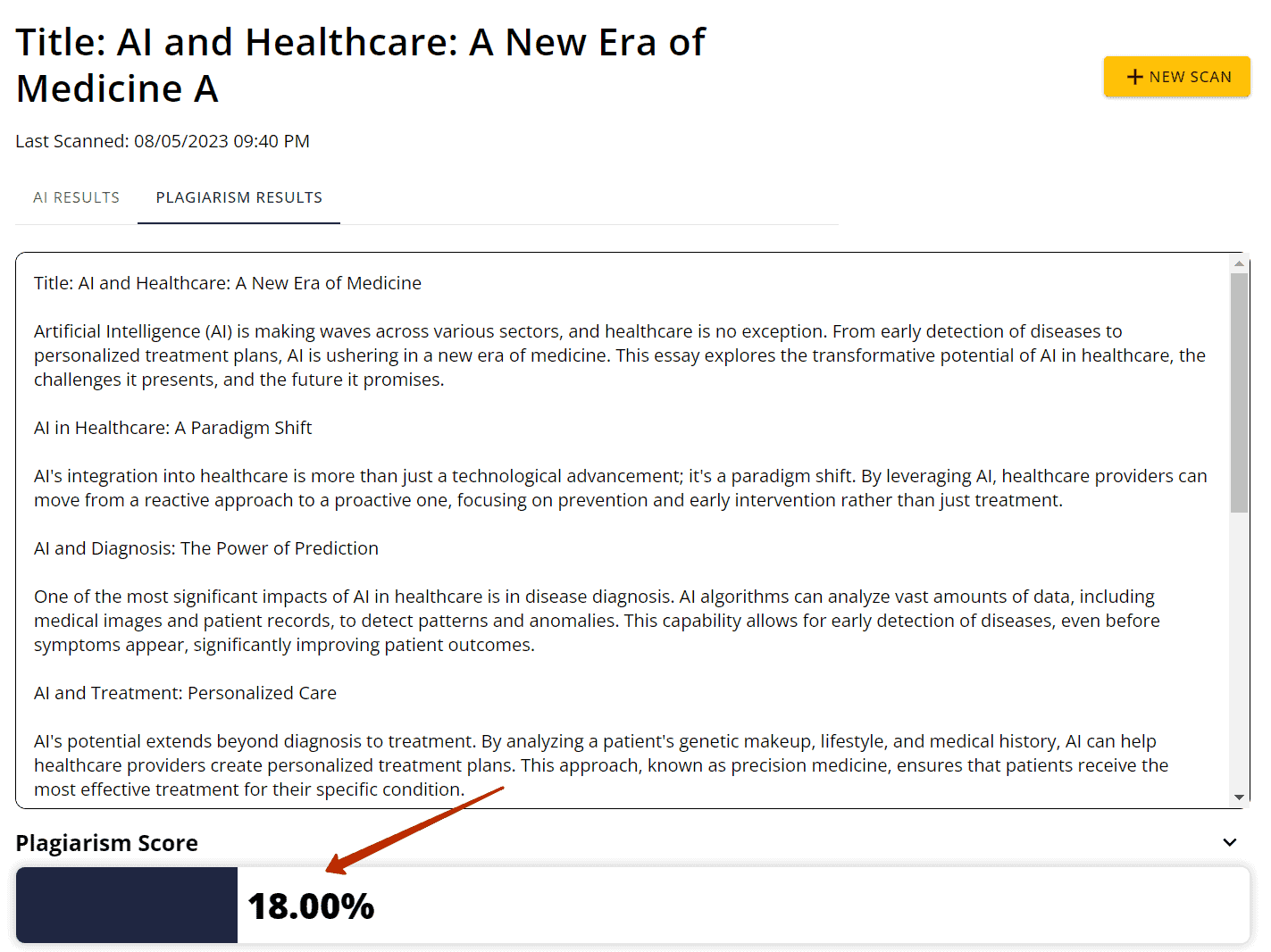

Test 2.

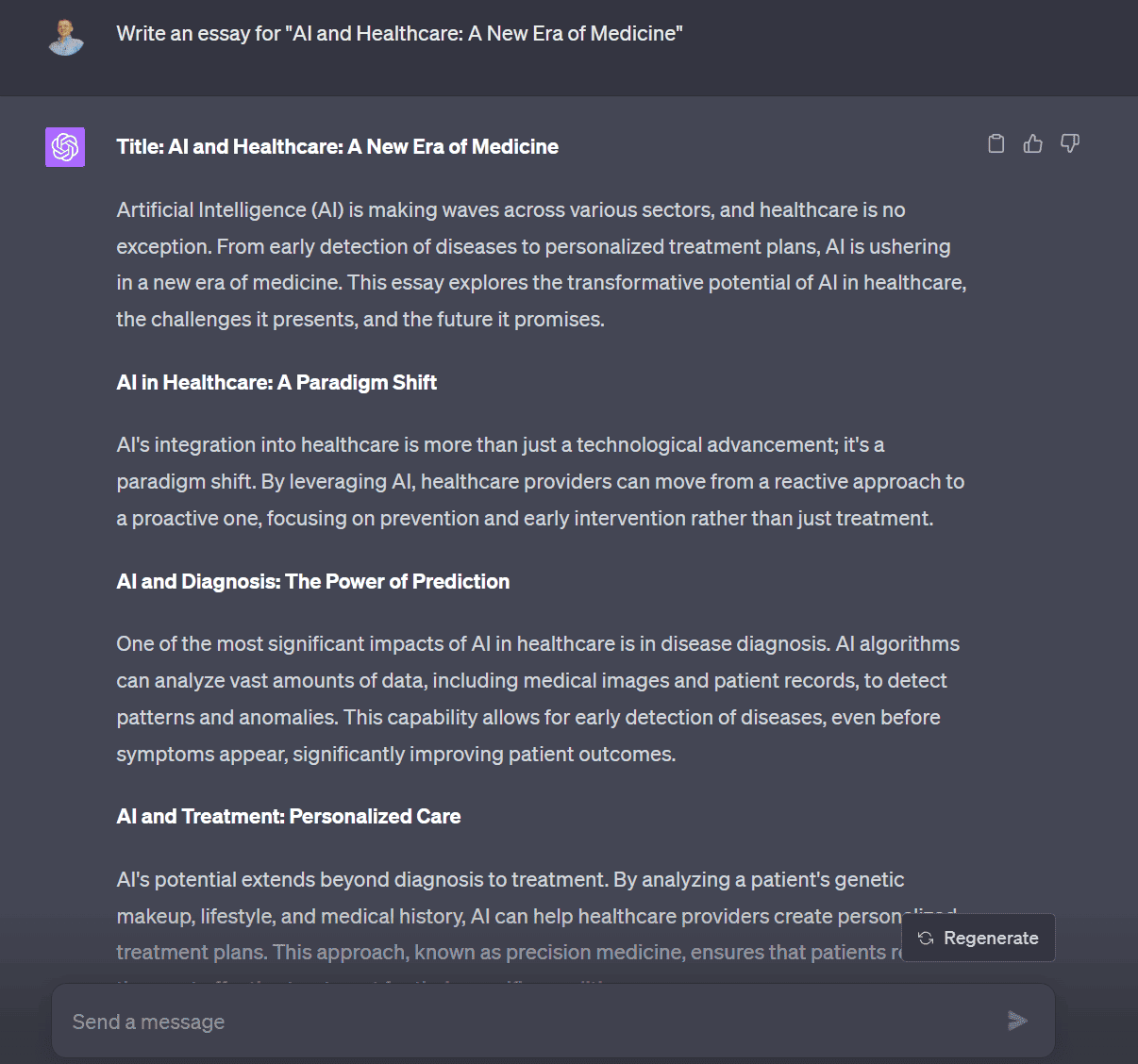

ChatGPT’s masterpiece:

Passed AI results:

100% AI text. Accurate.

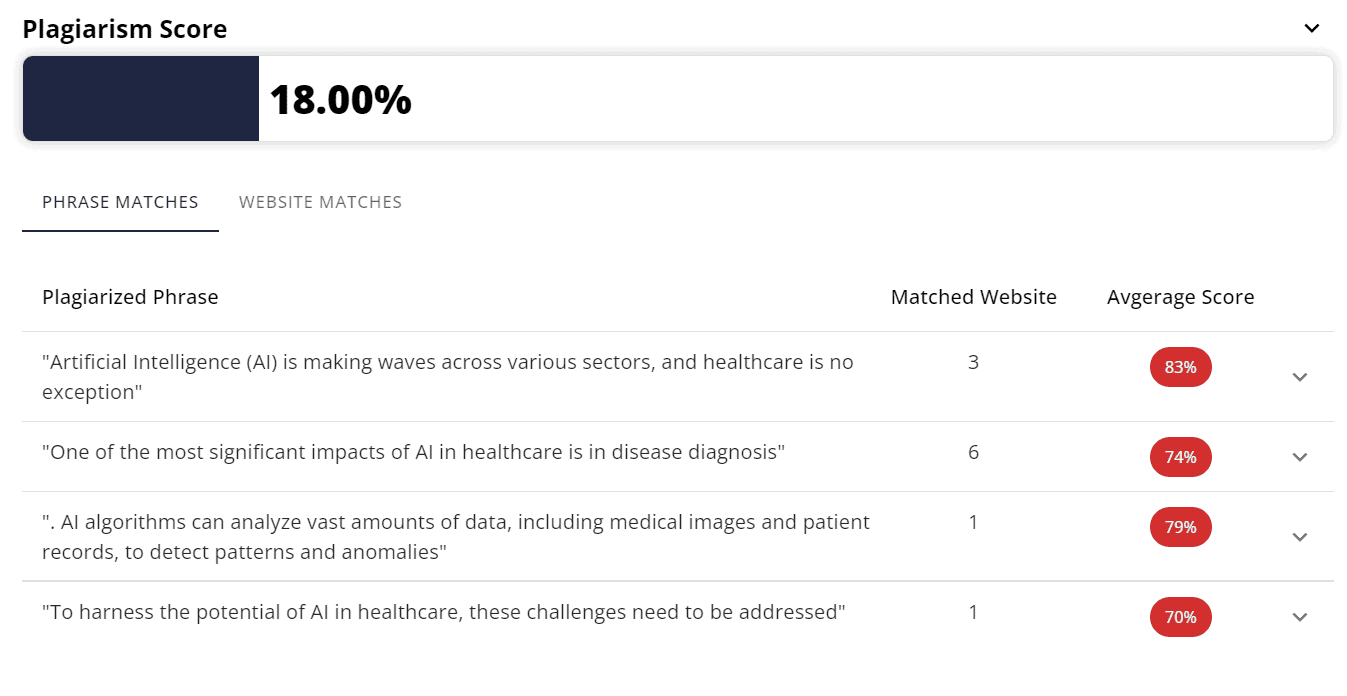

18% plagiarism this time. ChatGPT’s outdone itself.

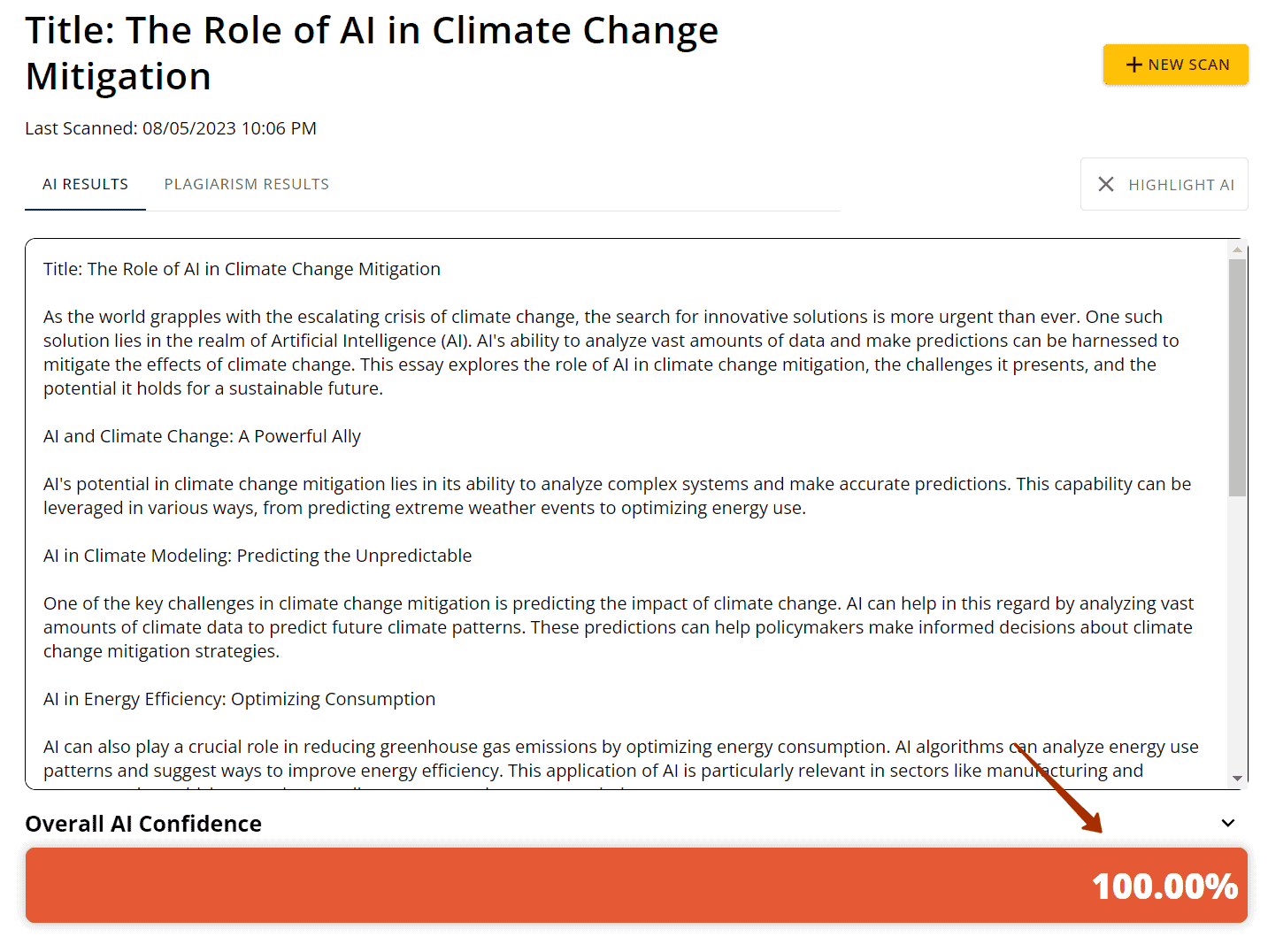

Test 3.

ChatGPT’s brainchild:

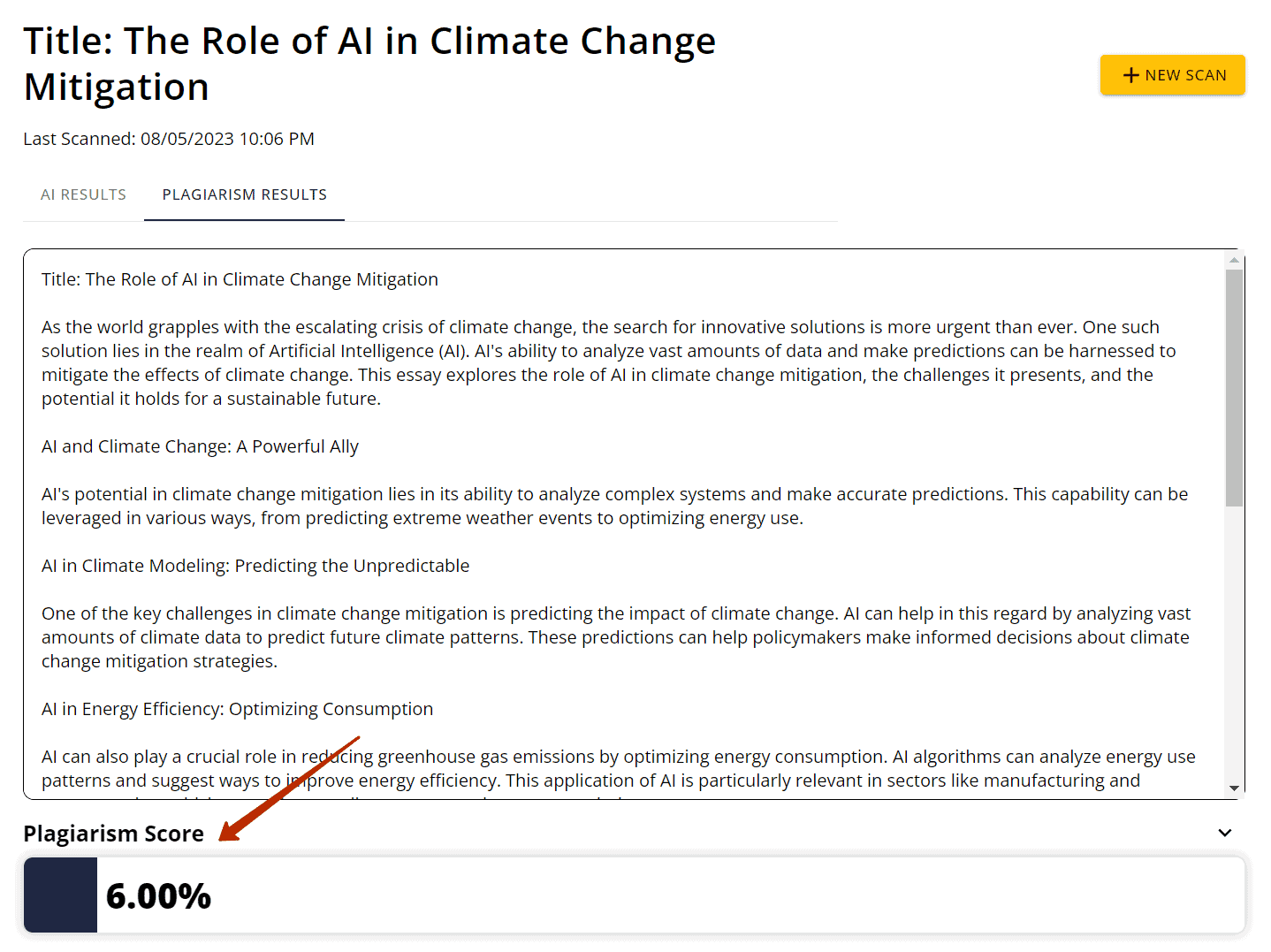

Passed AI test results:

100% AI score. Spot on.

Plagiarism: 6%. Sounds right.

Test 4.

Another ChatGPT gem:

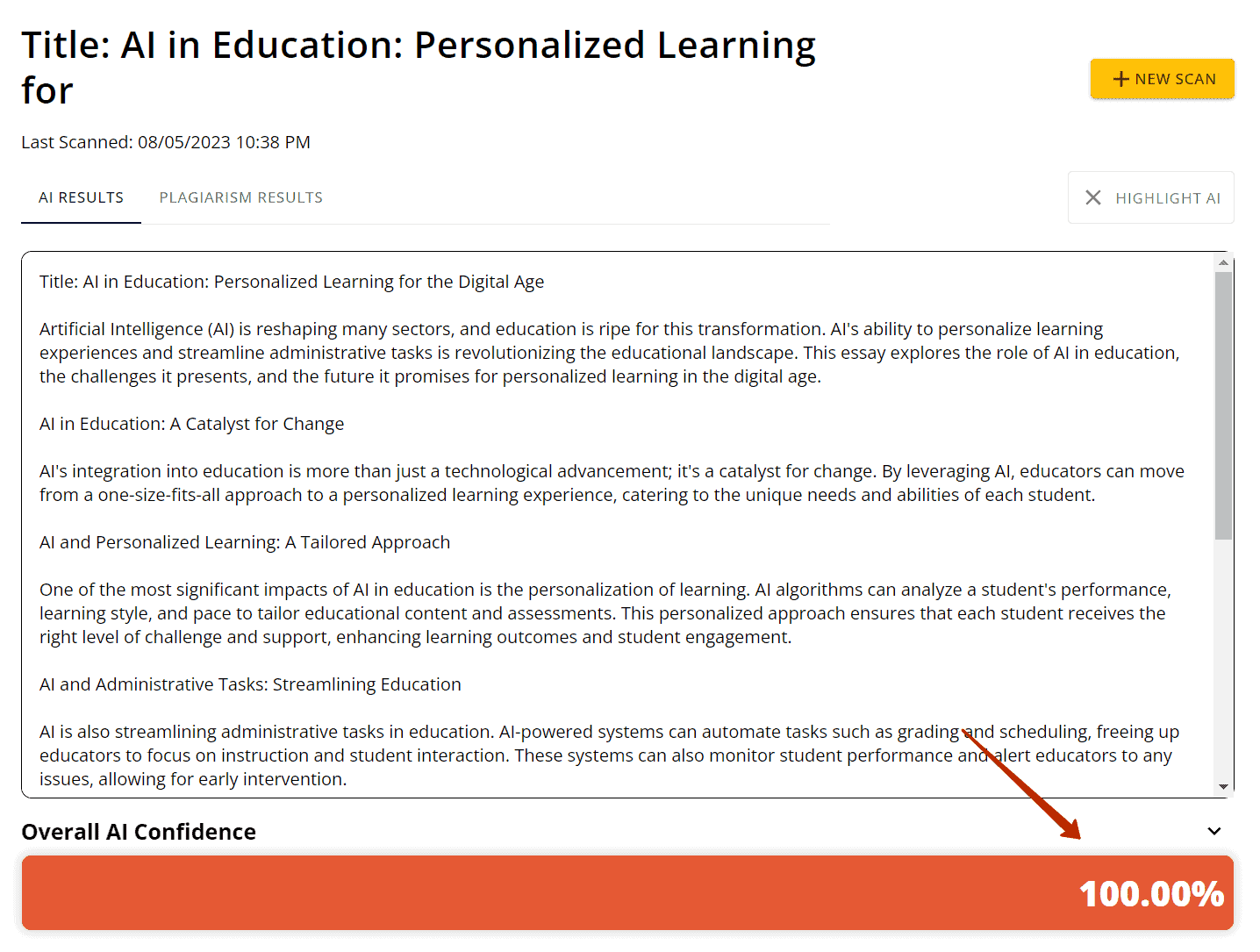

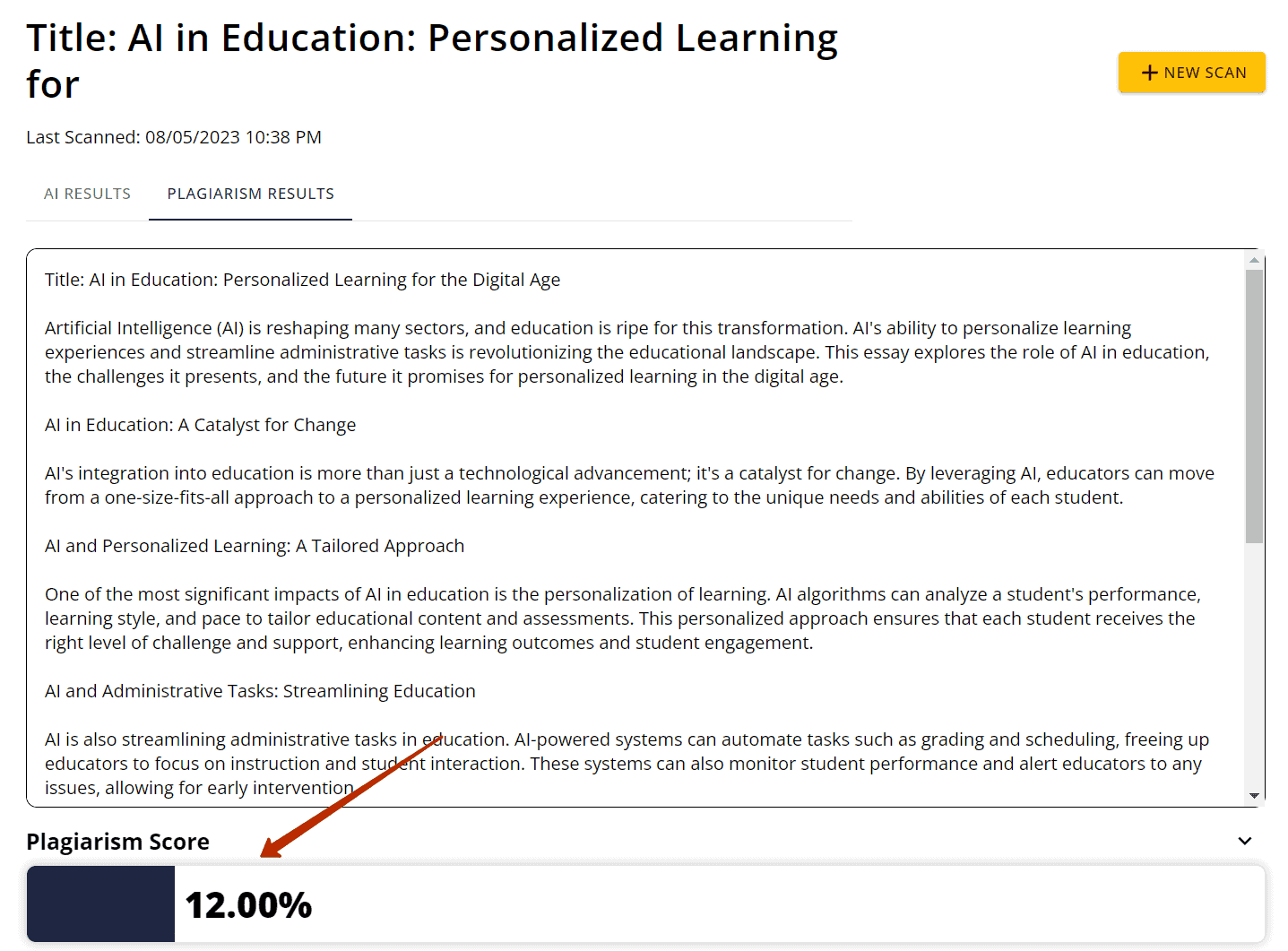

Now the Passed AI verification:

100% AI. Correct.

12% plagiarism, makes sense. More on this later.

Test 5

ChatGPT’s creation:

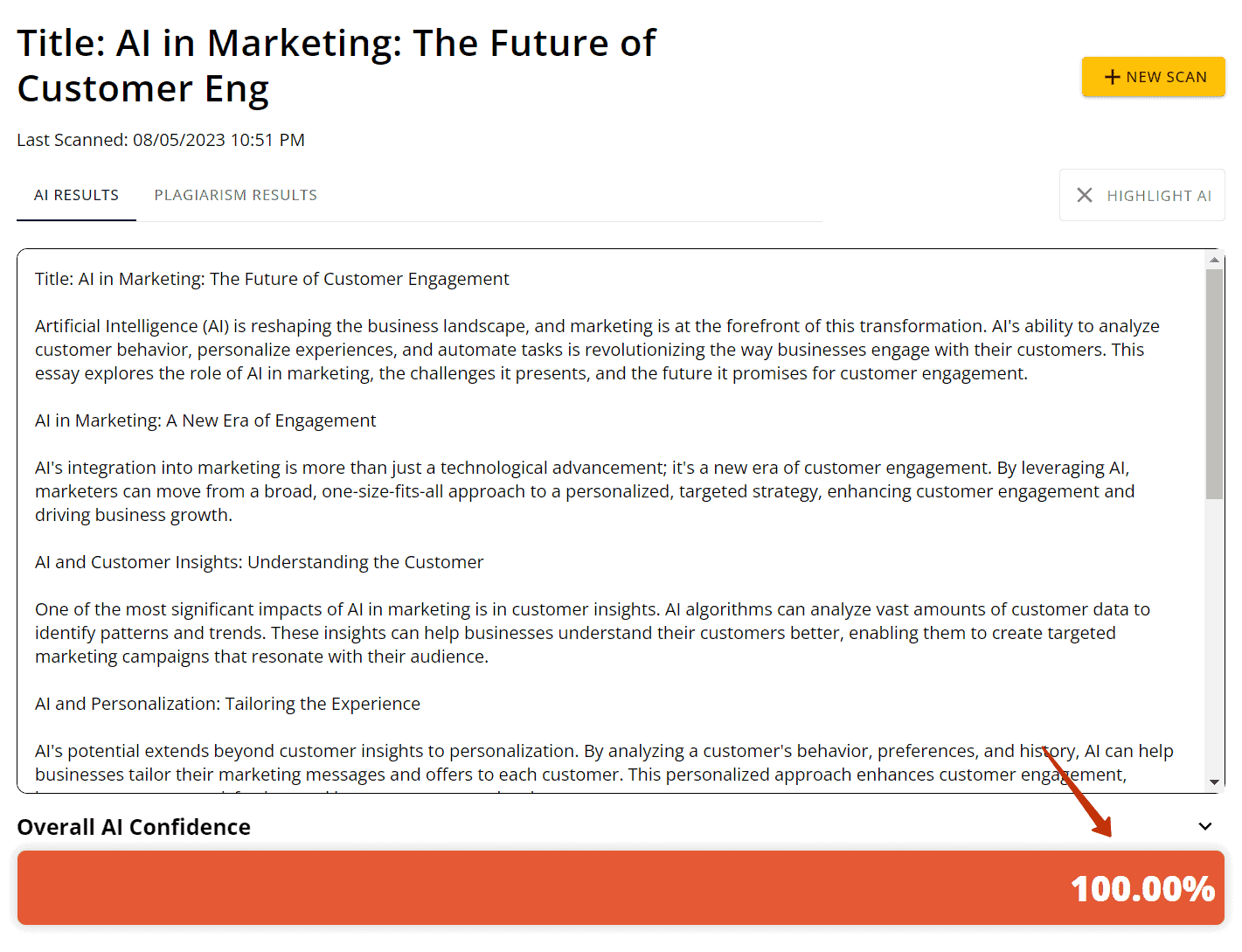

Passed AI scanning:

AI score: 100%.

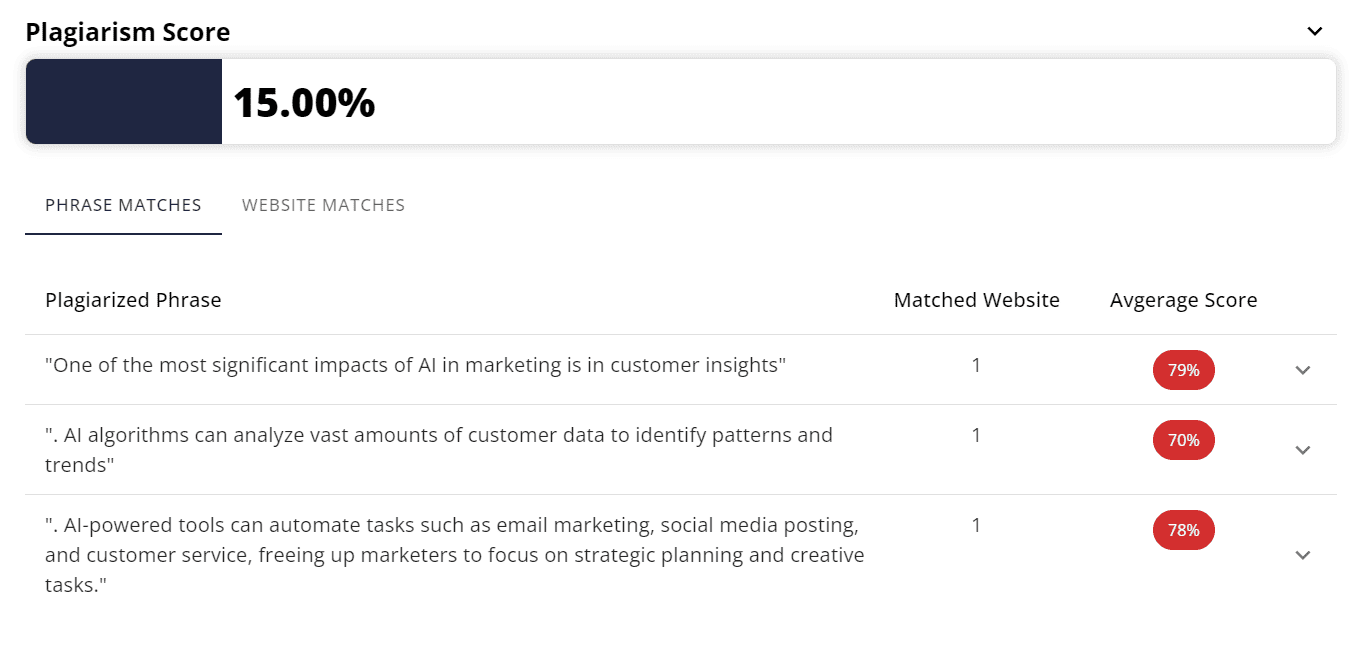

Plagiarism — a whopping 15%.

The plagiarism percentages worry me slightly. But I’m not really concerned since I write original content. Yes, I use AI sometimes, but I dictate what and how it writes. Plagiarism is a non-issue. More salient points.

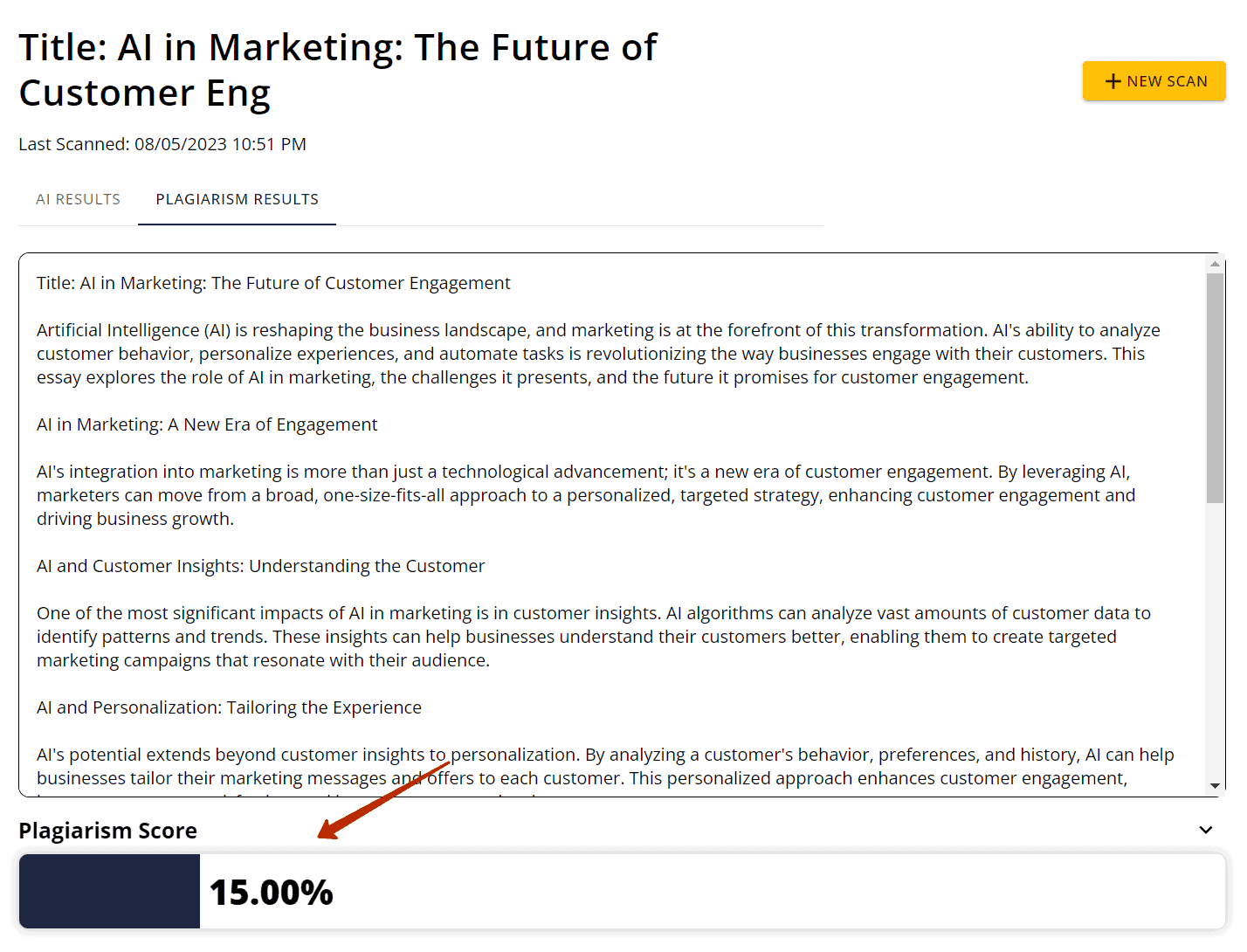

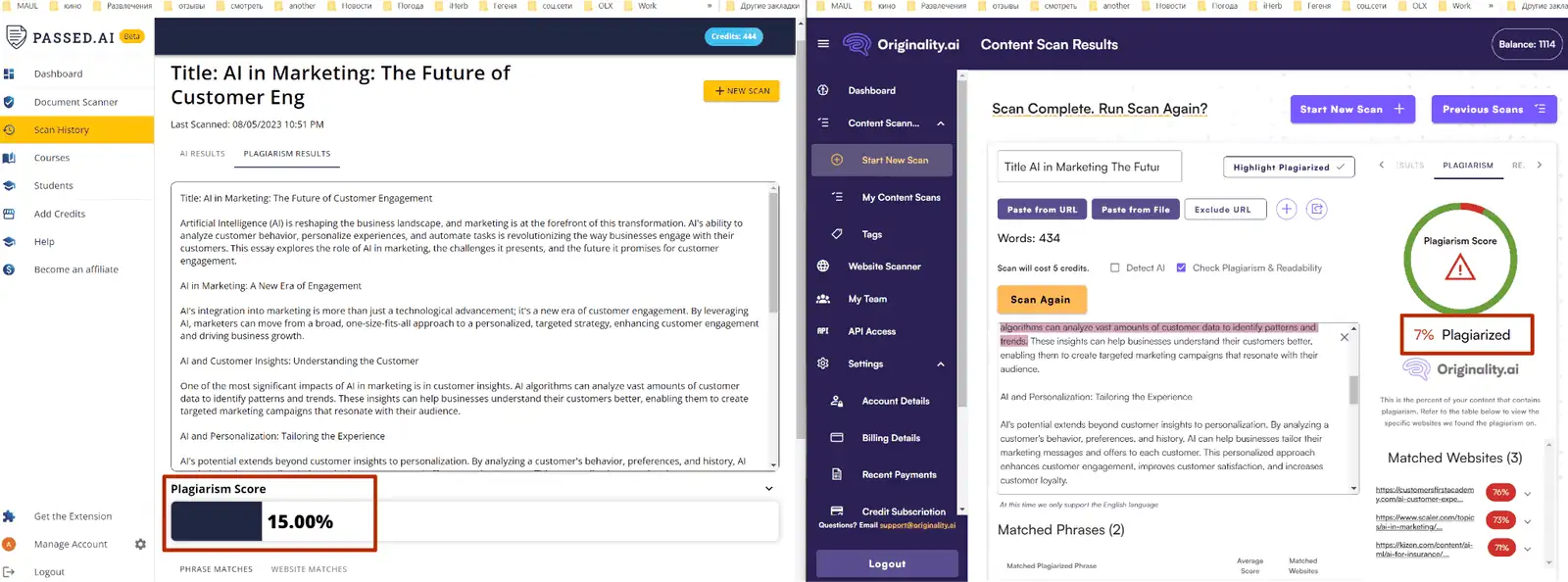

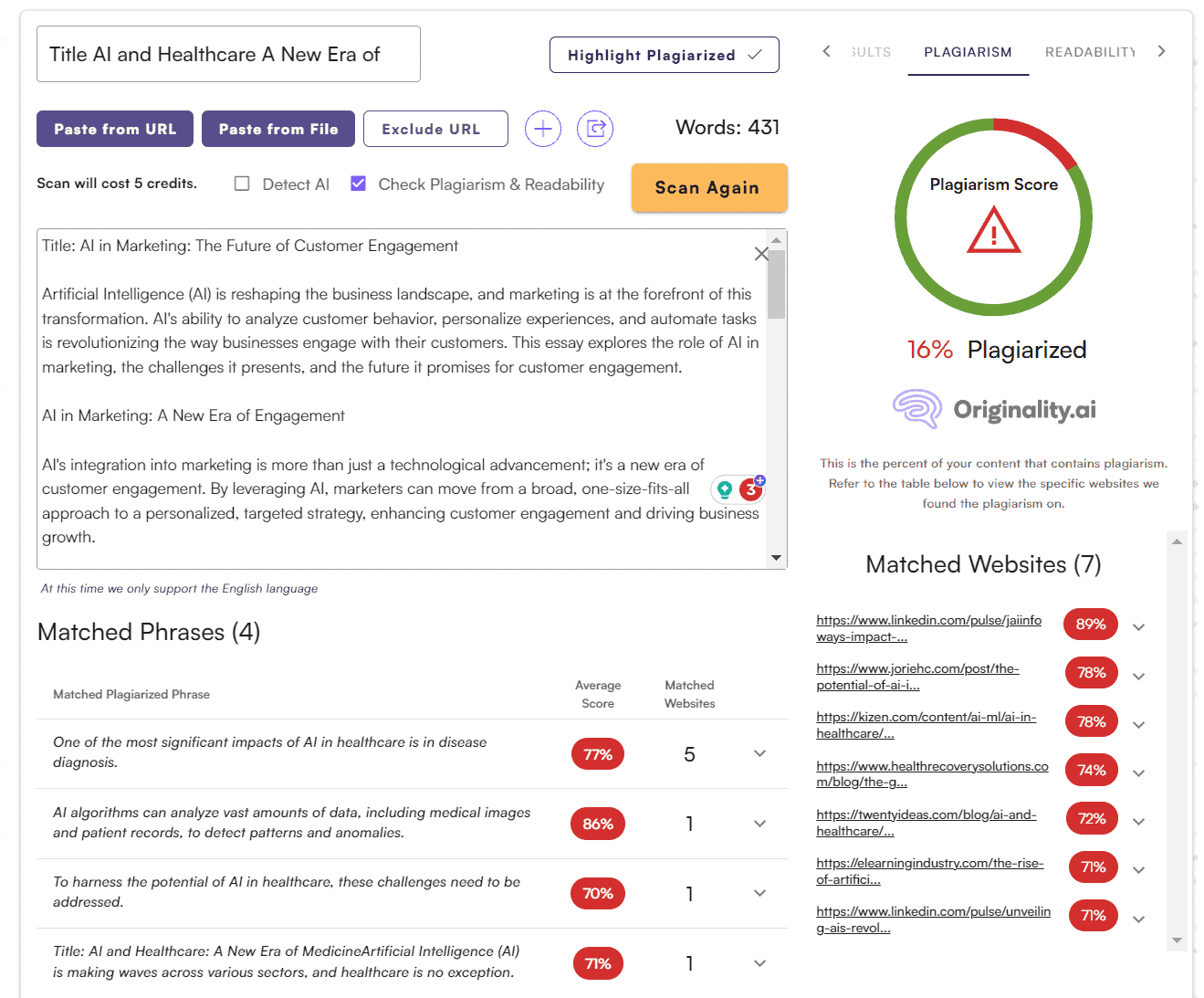

I’ll check the same text in Passed AI, Originality AI, and Grammarly to compare. Here we go:

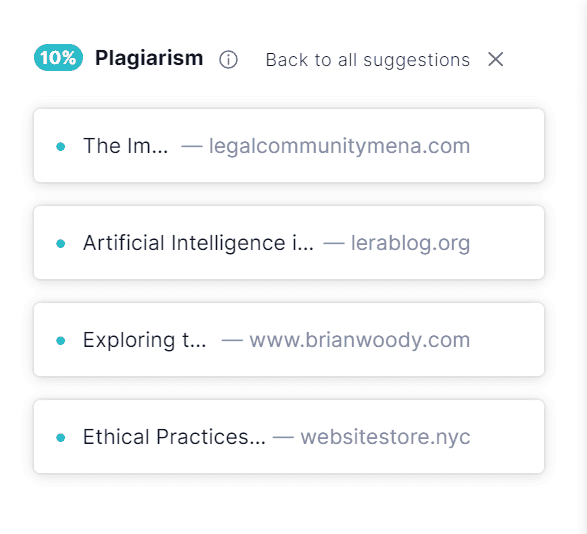

As expected, the results differ somewhat from Originality. Passed AI shows 15%, Originality AI shows 7%.

But the percentages and phrase matches are roughly similar.

Originality AI results:

Passed AI:

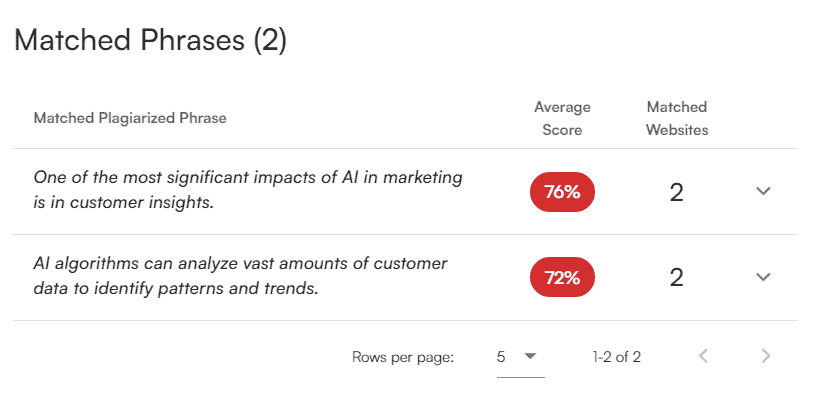

Both tools compare Phrase Match and Website Match. I showed Website Match above. Now Phrase Match:

Passed AI:

Originality AI:

Largely similar results. Passed AI may inflate plagiarism percentages slightly. But minor discrepancies exist with any math/metrics.

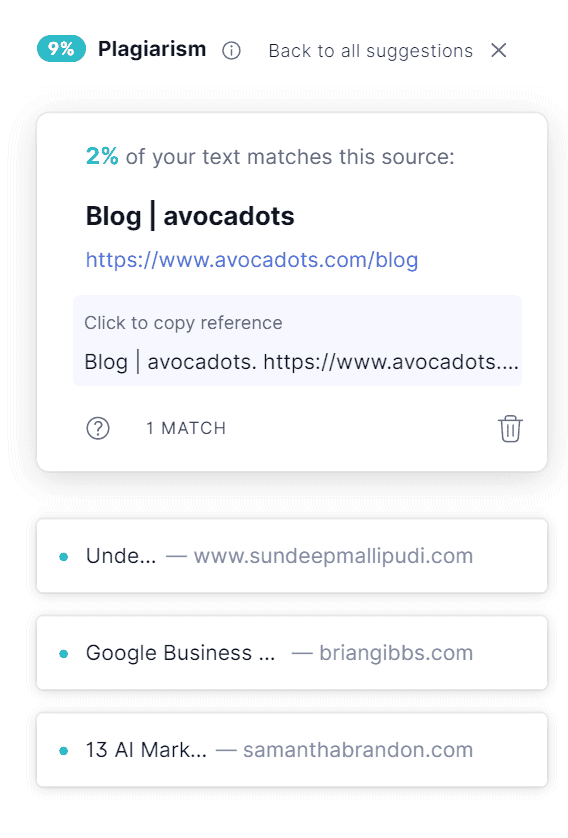

Now Grammarly:

Different phrase matches, 9% plagiarism here.

Let’s do one more, again checking Originality AI and Grammarly.

I’ll use the text with the highest Passed AI plagiarism percentage.

Phrase Match:

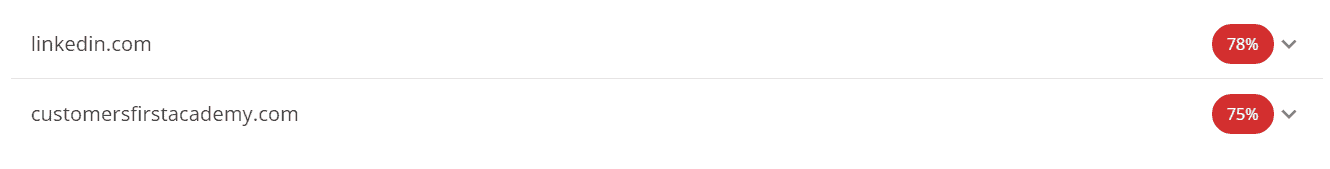

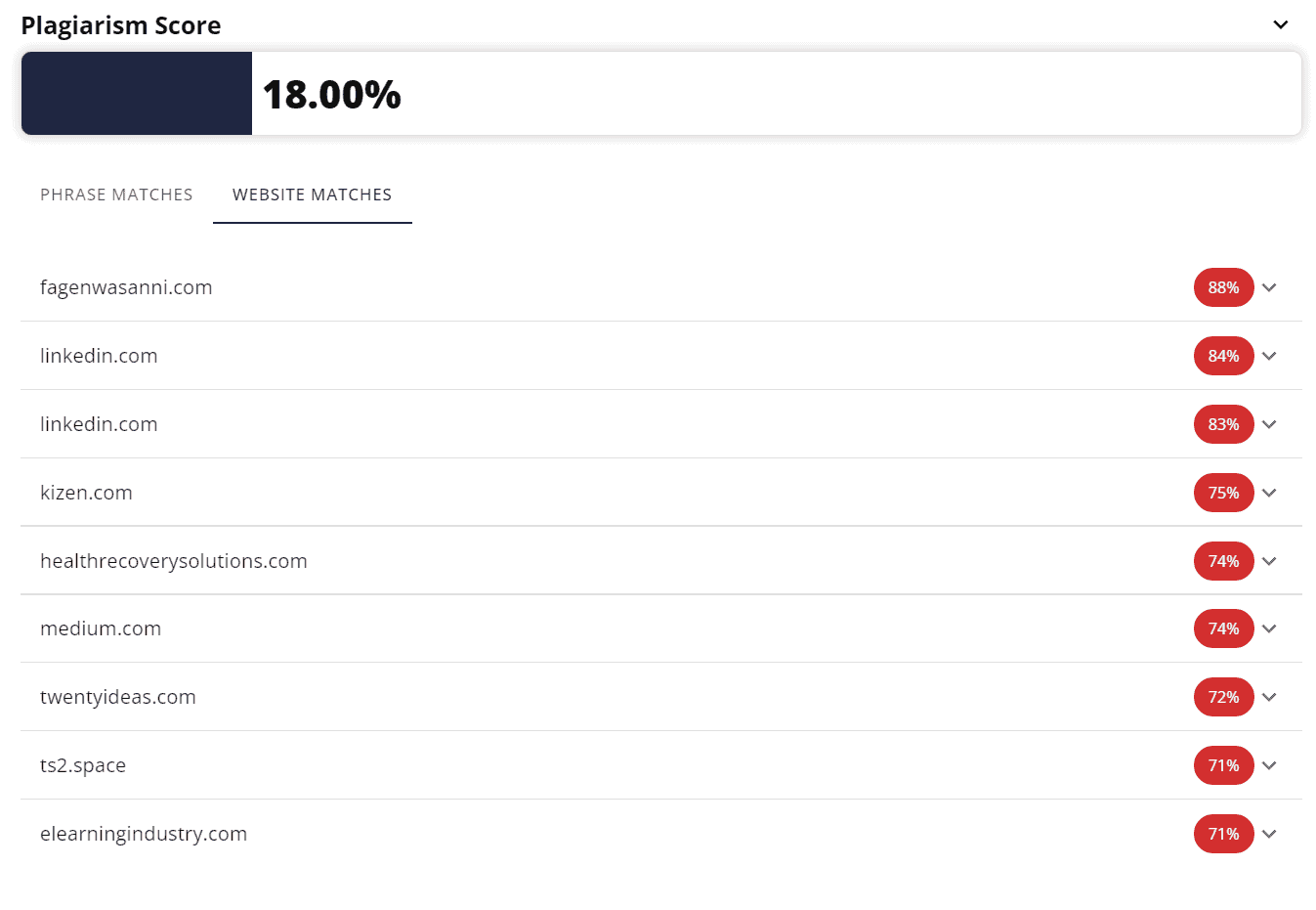

Website Match:

Originality AI:

Originality showed a slightly different 16%. Similar phrases, but Passed AI found more sites (9 vs. 7) for Website Match.

Now Grammarly:

Just 10% here. Passed AI and Originality AI seem stronger on plagiarism checks.

To summarize: Passed AI handled identifying AI text with 99.9% accuracy. Ditto for plagiarism. Yes, small deviations from Originality AI’s plagiarism results, but minor — Passed AI sometimes even exceeds Originality AI.

Round 3: Checking my own texts for AI detection

Passed AI nailed AI text identification. Now let’s see how it fares on my personal writings.

I’ll randomly grab excerpts to demonstrate capabilities. Shouldn’t be issues here, but let’s verify. No more plagiarism checks — I’m convinced of Passed AI’s skills there.

Test 1:

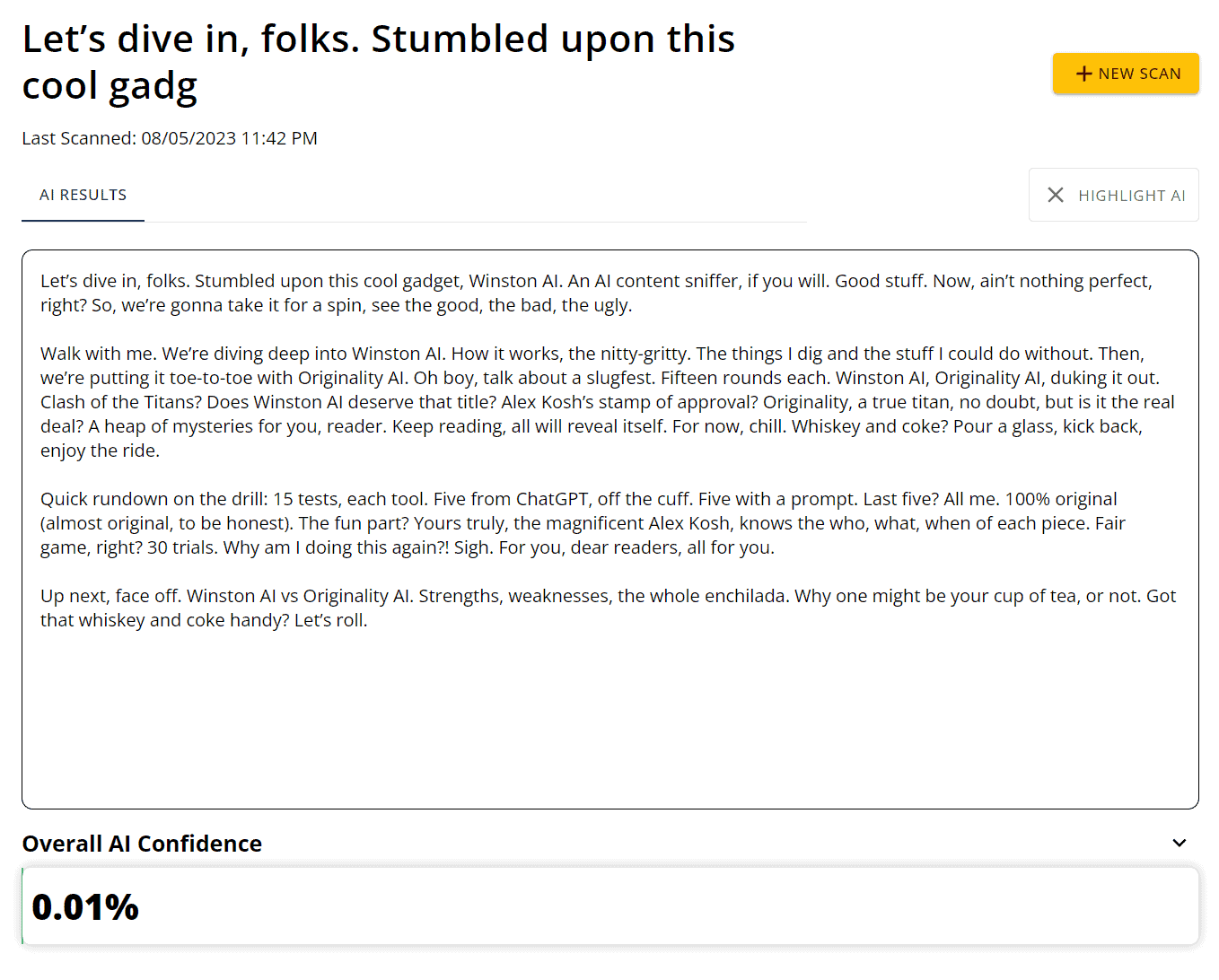

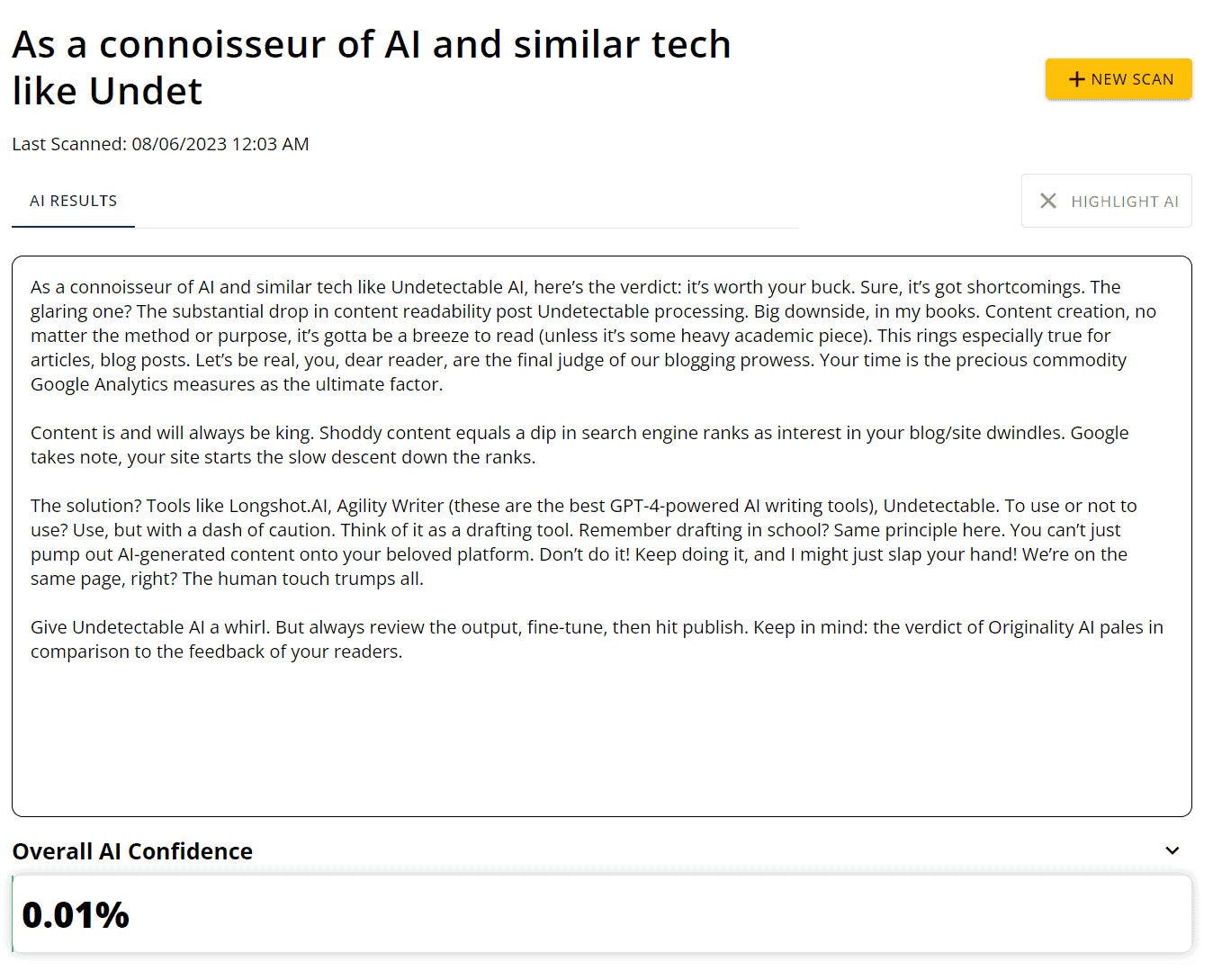

My text, from my Winston vs Originality AI review. Recommended reading btw.

Test 2:

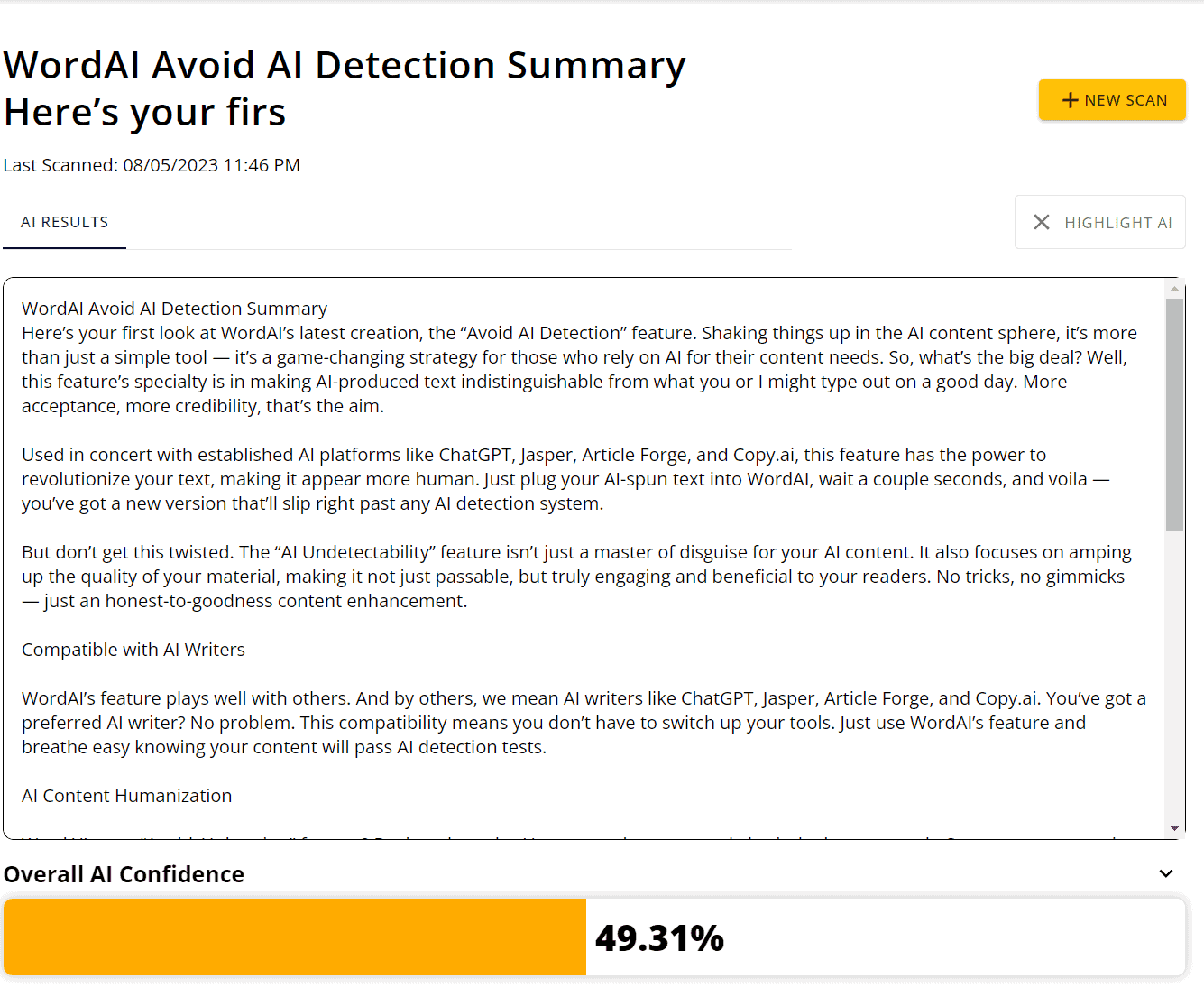

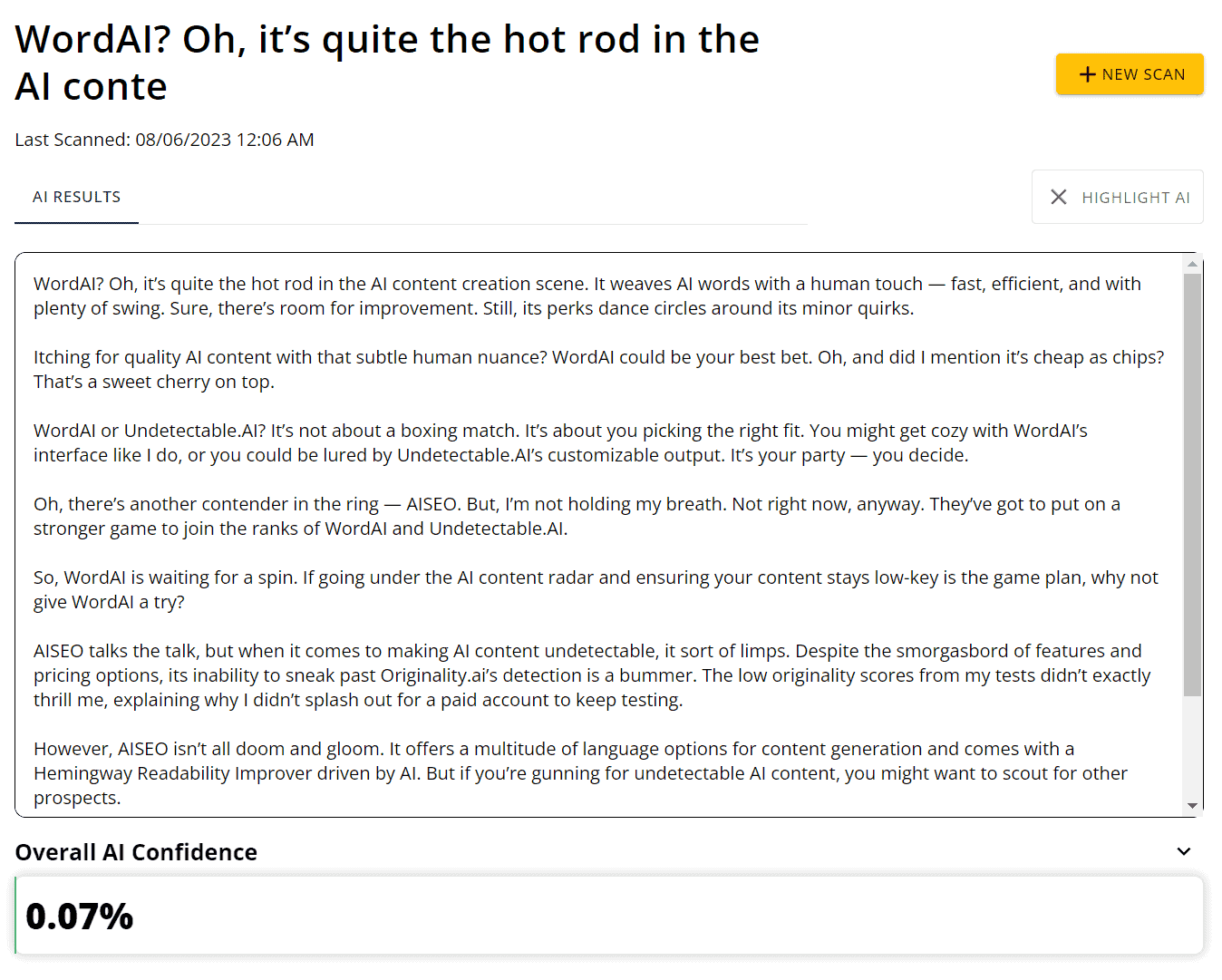

Whoops, almost got caught! Let me explain. This is from my WordAI review. WordAI spins text to beat tools like Passed AI and Originality AI. But here’s the thing: I followed the same feature description pattern. Hence Passed AI’s percentage.

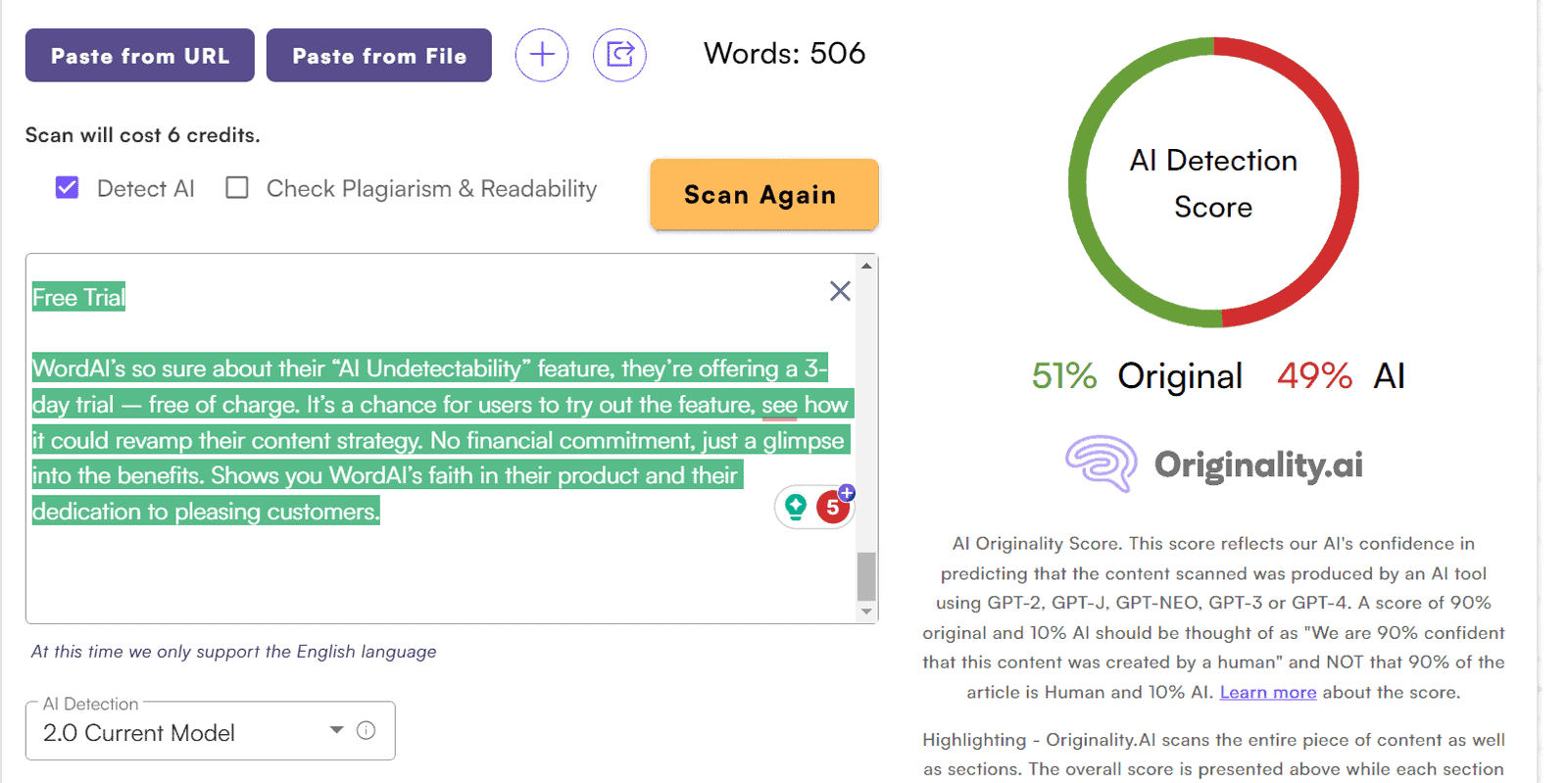

For comparison, same text in Originality, same percentage:

But all green text:

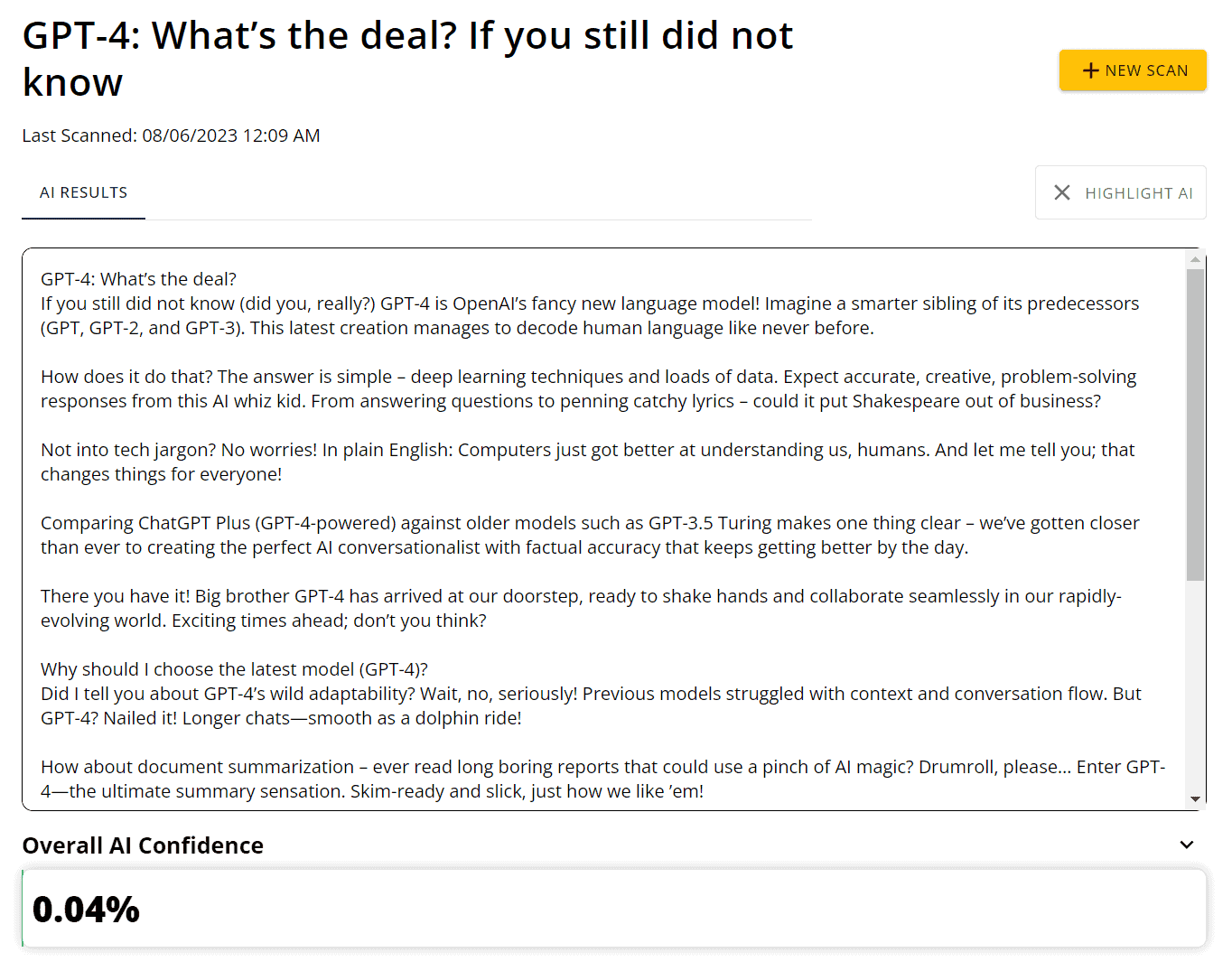

Test 3

Accurate. 99.99% human-written content identified correctly.

Test 4.

Another excerpt ID’d properly. 99.93% human content.

Test 5

99.96% original, human-written content. I rest my case.

To summarize: Passed AI nails identifying human-written content, just like Originality AI. Yes, one test showed only 49%, but I explained why — Originality AI looks at patterns too, and I followed a pattern in that review. Logical and clear.

I considered testing content using prompts. I study prompt engineering and can generate human-passing AI content. But for ethical reasons, no texts or prompts here. My view: students should use their own thinking, period.

Passed AI: User Experience

Now my take on using Passed AI. I won’t dive into every feature — it’s intuitive:

Login, you see the Dashboard and left panel. Typical AI detector layout. No need to overcomplicate things.

We know Document Scanner. Below is the course creation tab. Add an ID for convenience:

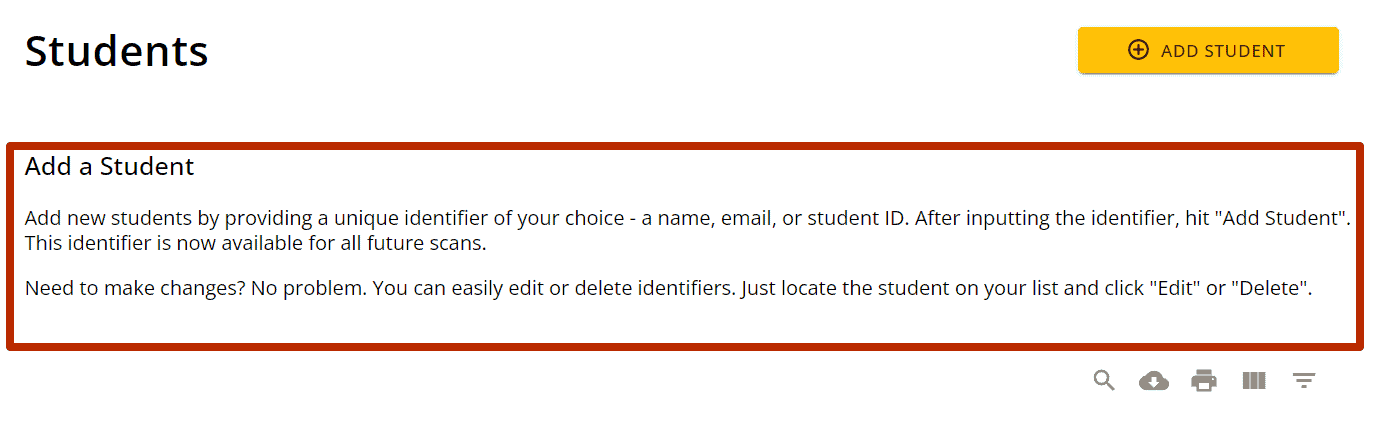

Adding students works the same:

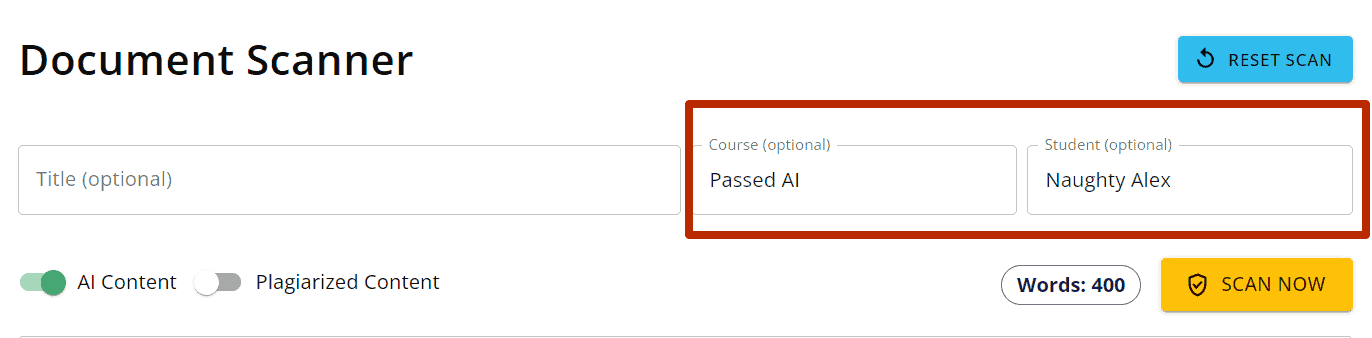

What’s this for? Let me show you. Select a course and student when scanning:

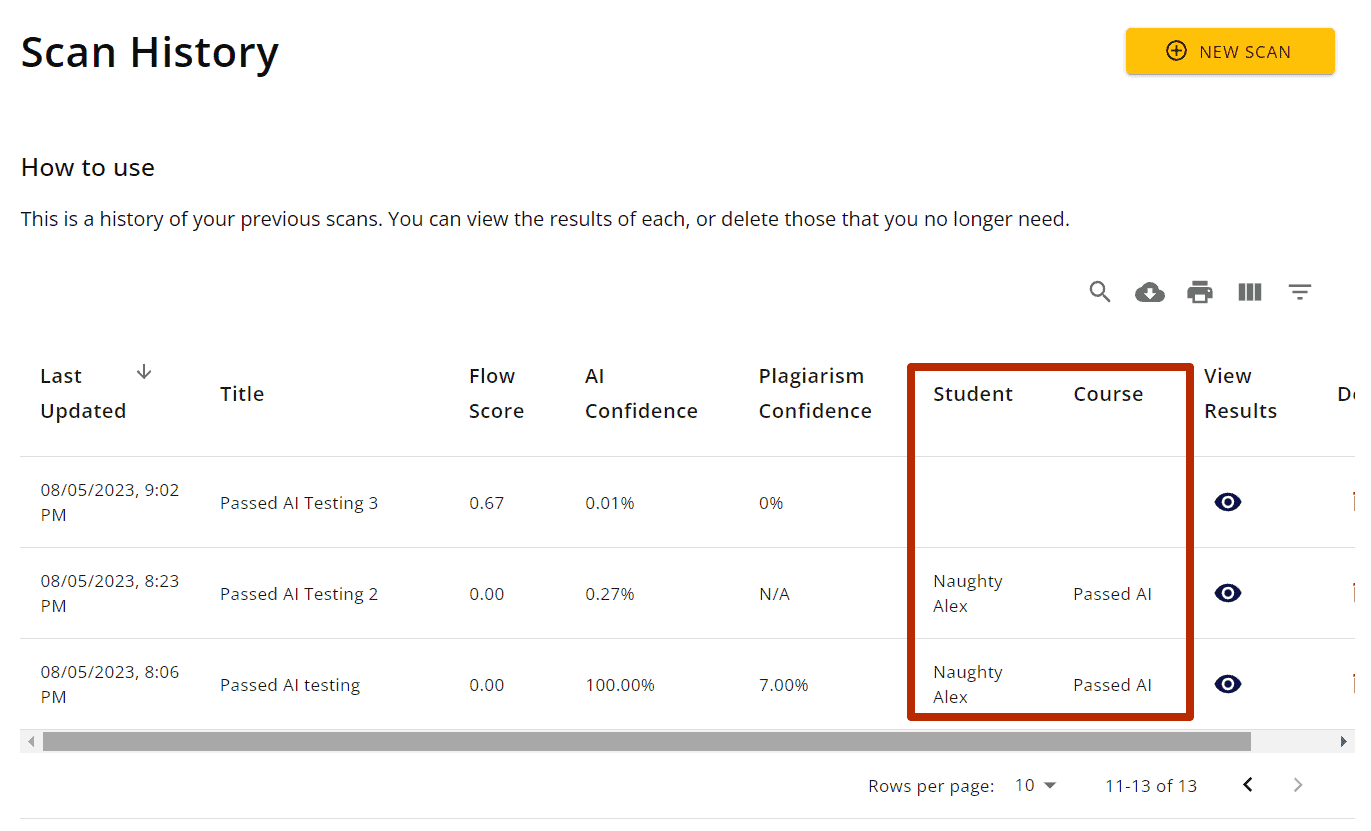

This helps browsing Scan History:

Navigation is simple. Plus, each tab has tips:

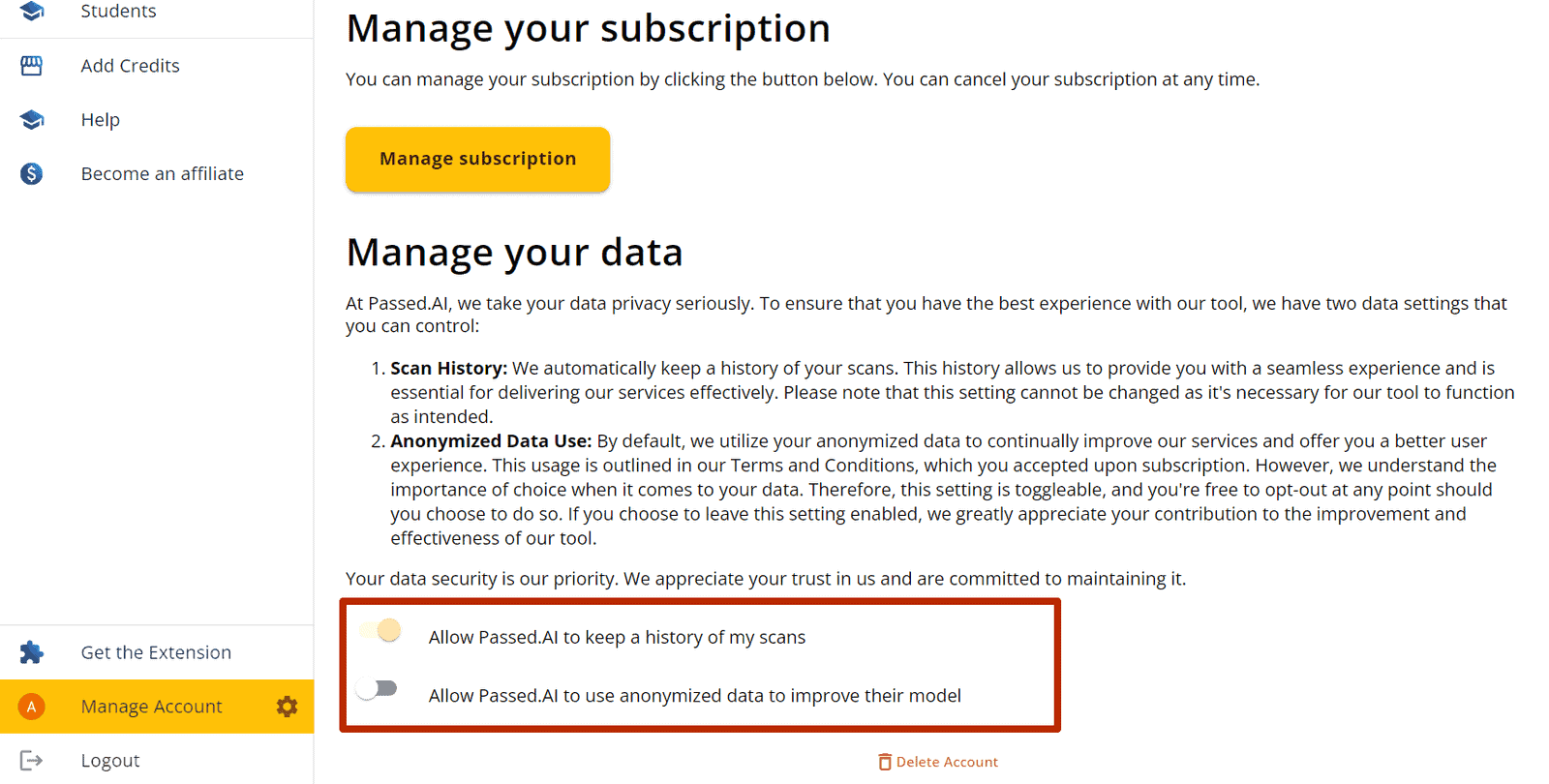

Like Originality AI, Passed AI lets you decide whether to provide your data to developers. Key because only the user should control their data. Unfortunately did not see this with Winston AI.

Manage data under Manage Account:

In summary: a pleasure to use. No issues whatsoever. A couple times during testing I couldn’t view scan results — just refresh or toggle between AI/Plagiarism tabs. Minor bug, especially for a beta product:

Passed AI Pricing

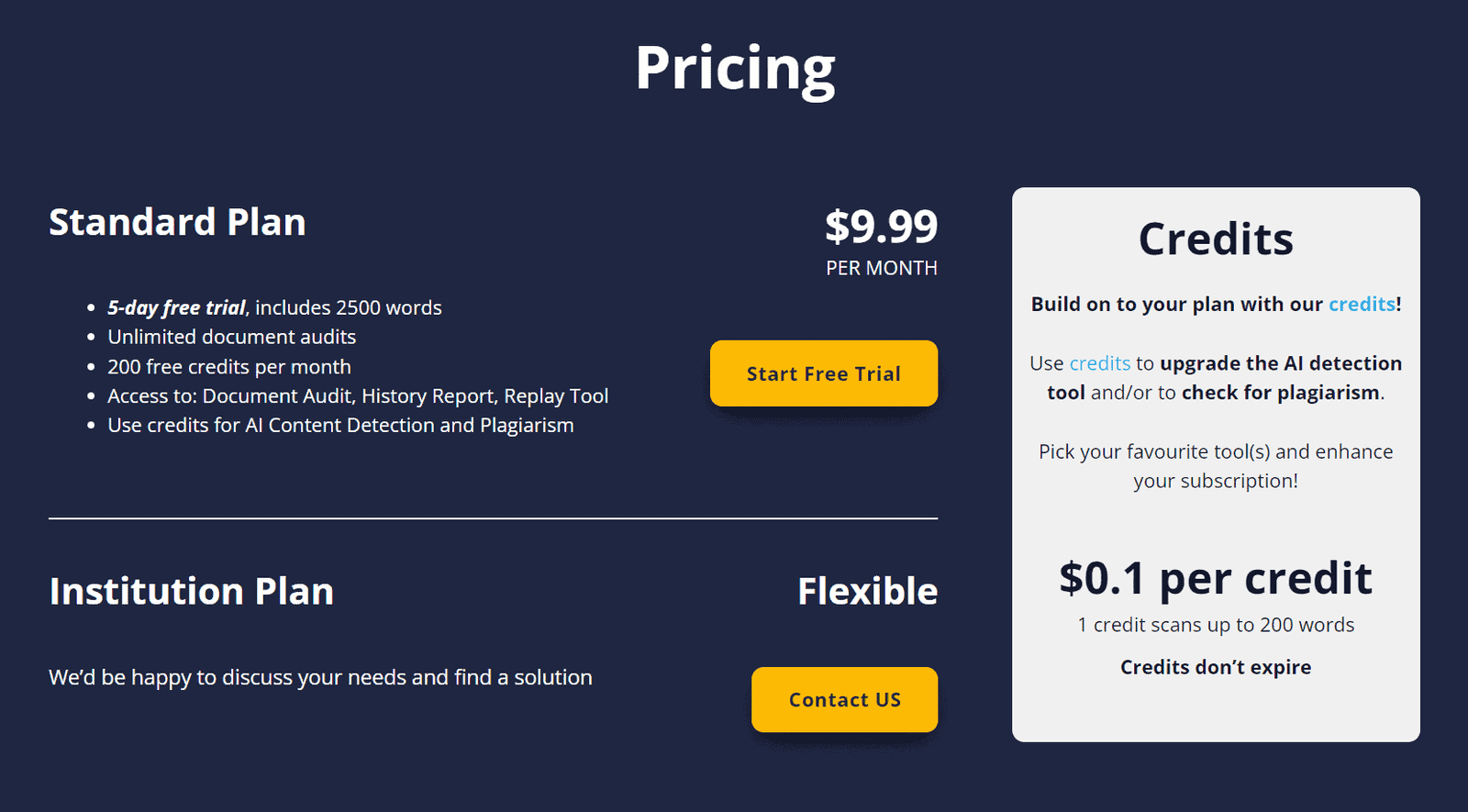

Simple: $9.99/month gets you the tool plus 200 free credits each month. 1 credit = $0.1 and scans up to 200 words (depends on plan — for me 100 words/credit). And credits don’t expire, crucial point.

One clarification: Don’t expect 200 words/credit on the standard $9.99 plan with 200 credits. You’ll get 100 words/credit. If you’ll scan over 20,000 words/month, get the 1,000 credit pack — then 200 words/credit.

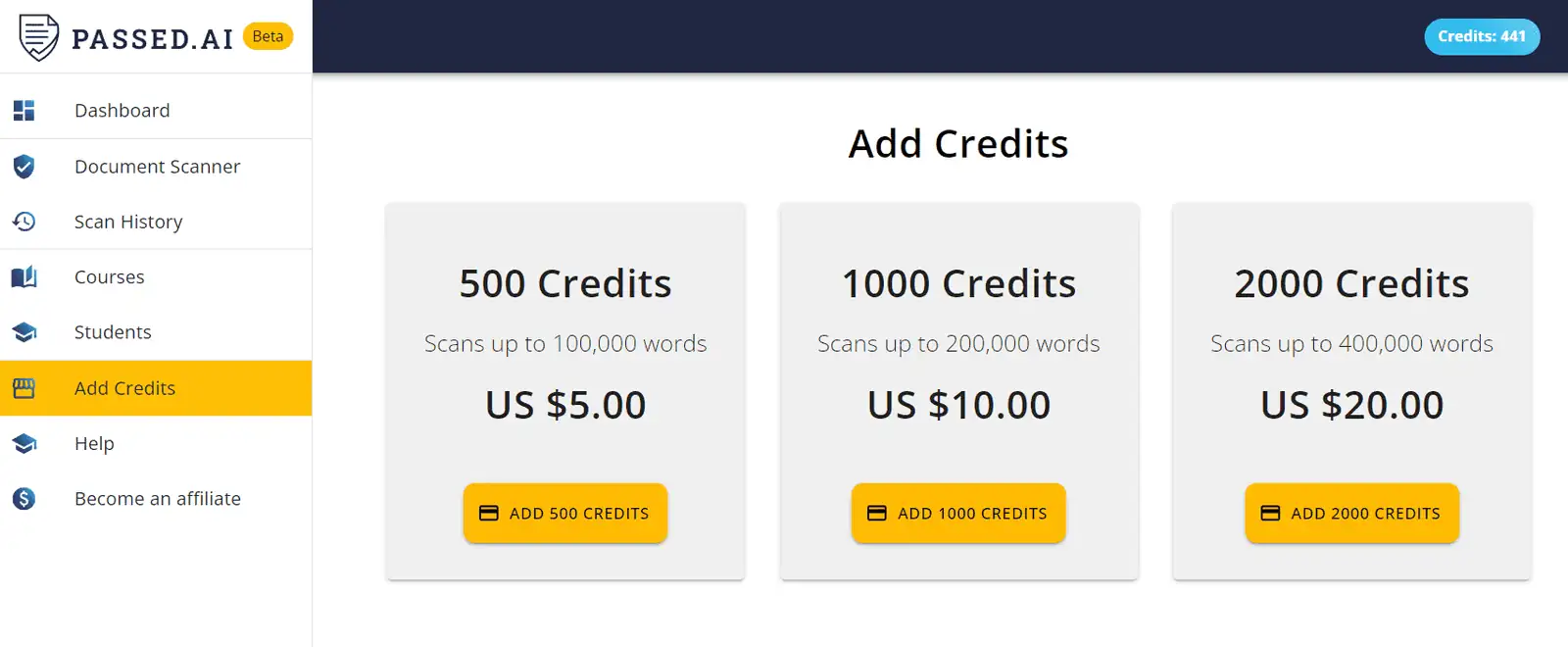

Let me break it down: $9.99, 200 credits. With those you can scan 20,000 words (40,000 with 1,000 credit purchase — higher scan limit). Buy more credits if needed. Add Credits tab:

Choose credit amount here.

So around $10 for the tool itself, pay extra for credits. Does this make sense?

Key so you don’t get confused:

- Don’t expect 200 words/credit on the basic $9.99 plan. You’ll get 100 words/credit.

- Scanning over 20,000 words/month? Get the 1,000 credit pack for 200 words/credit.

- Plagiarism scans cost double credits.

Hope this provides clarity.

Passed AI vs Originality AI

Here’s the big comparison. Passed AI’s main rival is Originality AI — they both have Chrome extensions. I hadn’t used Originality’s before.

Passed AI’s secret sauce is their extension. How do both extensions stack up?

Originality AI’s plugin:

Passed AI’s:

Advantage: Passed AI.

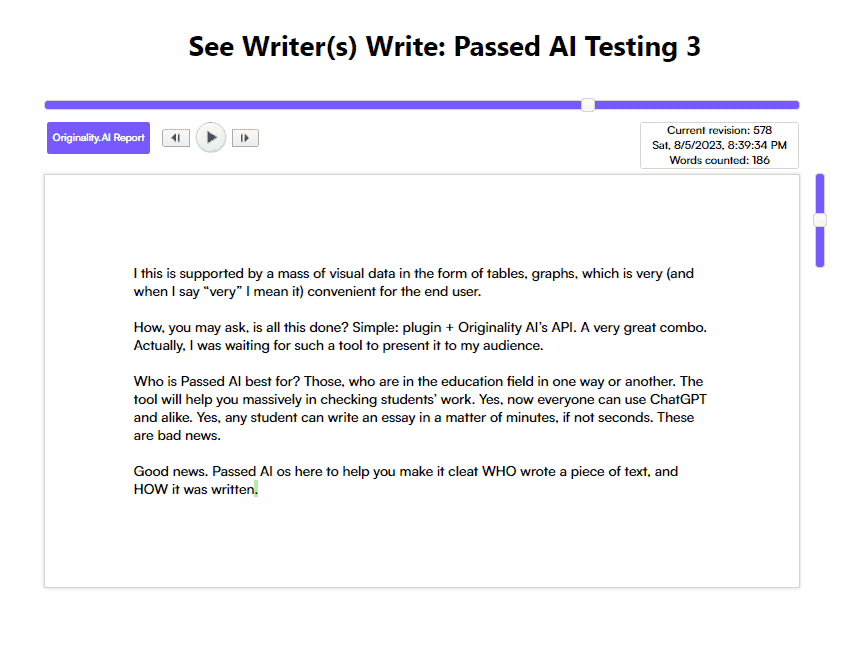

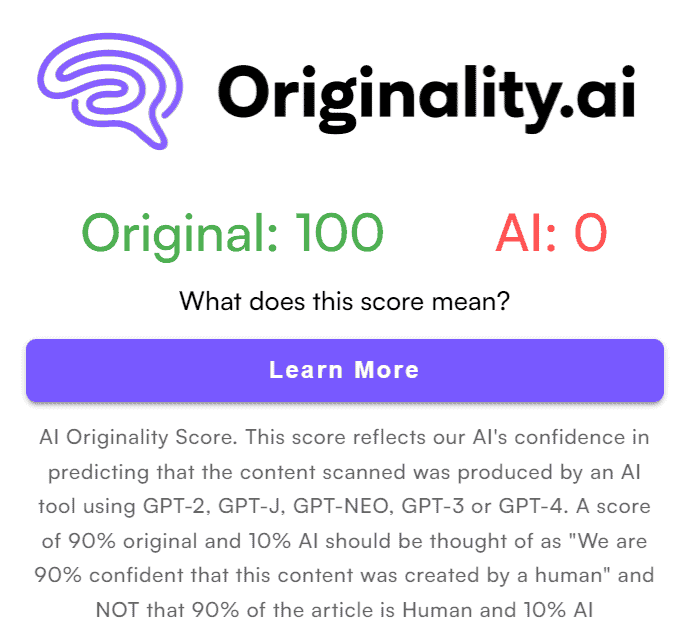

Clicking Originality Report gets you:

Clicking Passed AI’s Audit Report:

More details? I think so.

The Replay tools are nearly identical:

Except Passed AI has speed controls (didn’t see in Originality’s).

Originality’s Update button immediately scans for AI:

No Quick Scan like Passed AI’s.

And no tabs like Passed AI:

Teachers can do an overall audit, full scan, plagiarism check.

There’s a Help tab too.

Choice seems clear.

Don’t get me wrong — as a general AI detector, Originality AI is superb. But Passed AI built something unique for educators using it as a model.

Your call, but Passed AI has my vote.

Why Educators Need AI Detection

Teachers. Professors. You feel me. Times are changing. Technology advancing. New challenges in the classroom. Like students using AI to write essays and papers. Don’t play coy — you know it’s happening.

So why do you need an AI detection tool like Passed AI? Let me break it down:

Preserve Academic Integrity

- AI content undermines the learning process

- Enables cheating and plagiarism

- Erodes trust between student and teacher

Maintain Educational Standards

- AI can write persuasively but often lacks true comprehension

- Work should demonstrate the student’s understanding

- Assessing real knowledge requires identifying AI content

Fairness to Honest Students

- Most students do their own work legitimately

- AI content gives cheaters an unfair advantage

- Levels the playing field for those playing by the rules

Uphold Your Reputation

- Teachers judged for not catching dubious content

- AI-generated work suggests lax academic standards

- Robust detection preserves your status as an educator

Keep Up with Technology

- AI writing tools are only getting more advanced

- Existing tactics like plagiarism checks are falling behind

- AI detection is essential for the tech-enabled classroom

Look, the writing’s on the wall. Battling AI-authored work is critical for today’s teachers. Passed AI brings next-gen tools purpose-built for the task. Enter the era of AI-enhanced education — with educators empowered, not imperiled, by technology.

Final Word: Is Passed AI Worth It?

In this digital age, the boundaries are fading. Human creativity and machine intelligence? They’re merging. But here’s a standout: a tool shaking up the learning game. Meet Passed AI. It’s no mere gadget. A revolution in education. Beyond catching the cheaters, it’s about integrity. Originality. Honesty.

Pressed for time? Quick scans. Need full trust? Comprehensive scans. The Chrome extension with Google Docs integration? Handy. One click. AI-written content identified. The human touch? Alive and well.

Mistakes. Students make them. But now, a clear line between innocent mishap and intentional fraud. The audit report tells a story. Flow score, actions, duration. It’s all there. The replay tool, long inserts, timelines? Transparency at its best.

Innovation. That’s the name of the game. Passed AI doesn’t fight the future; it welcomes it. AI’s growth? Inevitable. But it’s here to enhance, not replace. To free, not imprison. A guiding light for educators. A tool, not a hindrance.

Implications? Big. Message? Unmistakable. AI’s here, but it’s no menace. Opportunity knocks. The human mind? Still the champion.

Conclusion? Passed AI’s not just a game-changer. It’s a lifesaver in a storm, a new way of thinking. Preservation, education, growth. The future’s about values, and with this tool, they’re safe. Technology advances, but academic integrity? Rock solid. The future? It’s here. Fear? Gone. The power? Yours. Always has been. Always will be.

About the Author

Meet Alex Kosch, your go-to buddy for all things AI! Join our friendly chats on discovering and mastering AI tools, while we navigate this fascinating tech world with laughter, relatable stories, and genuine insights. Welcome aboard!

KEEP READING, MY FRIEND