As a pro blogger, I think tools like Claude, GPT-4, and Agility Writer are super helpful. They let me research topics, make outlines, and write posts way faster than I could by hand.

But I always edit and polish everything myself in the end. These smart tools can write good stuff fast. But I don’t want my work to sound robotic. I want my posts to sound like me!

I leverage AI strictly for drafting. In the end, I’m the only one who polishes everything.

Six months ago, I started using Originality.AI. It gives me confidence that my writing is 100% mine, even when I use AI to write a first draft. In this post, I’ll share my experiences using Originality every day as a pro blogger. It’s helped me keep my work original without slowing me down.

The cool thing is Originality doesn’t just say if AI wrote something. It can show the exact parts that aren’t original. So I can use AI drafts to get started fast. But Originality makes sure my finished work sounds human.

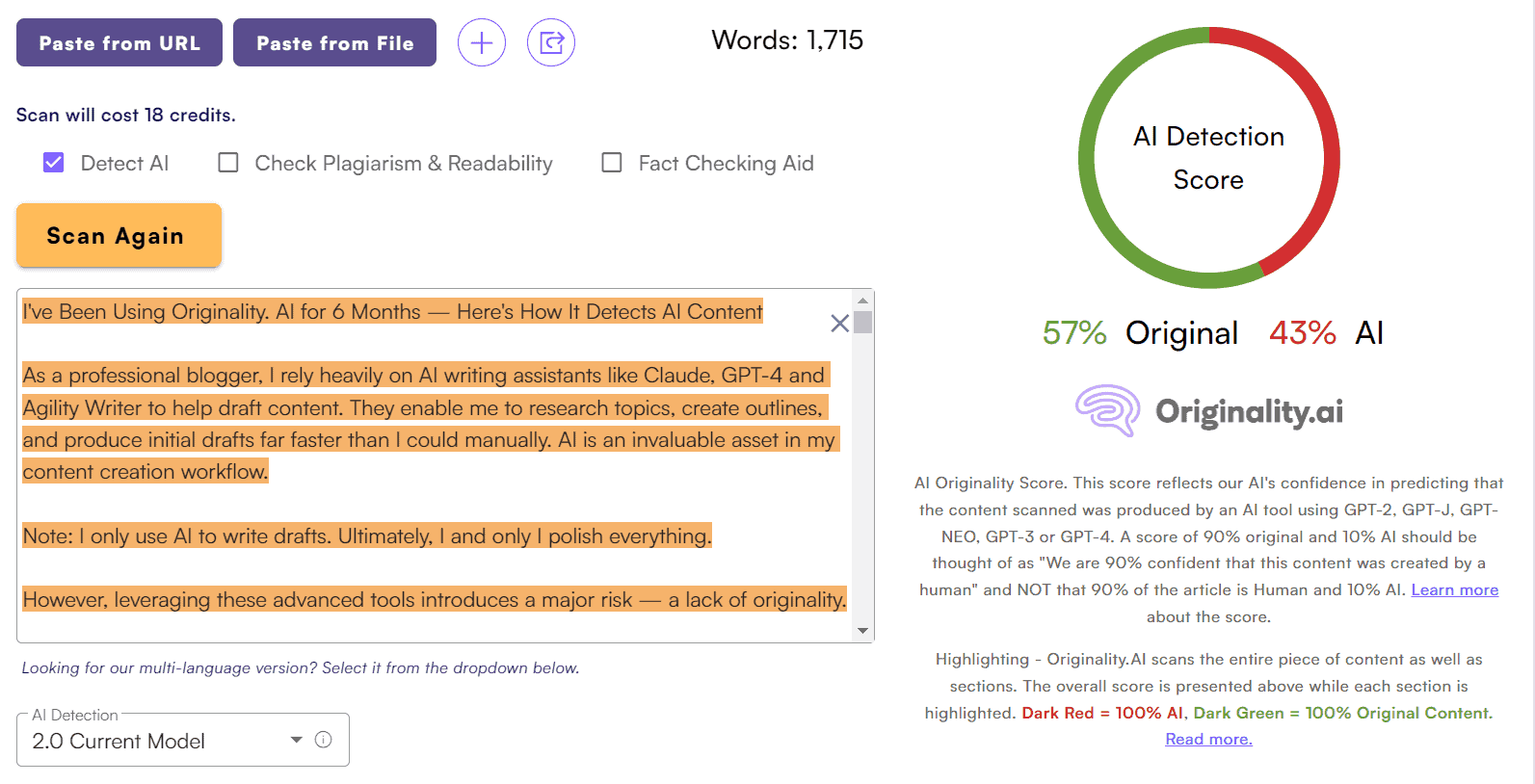

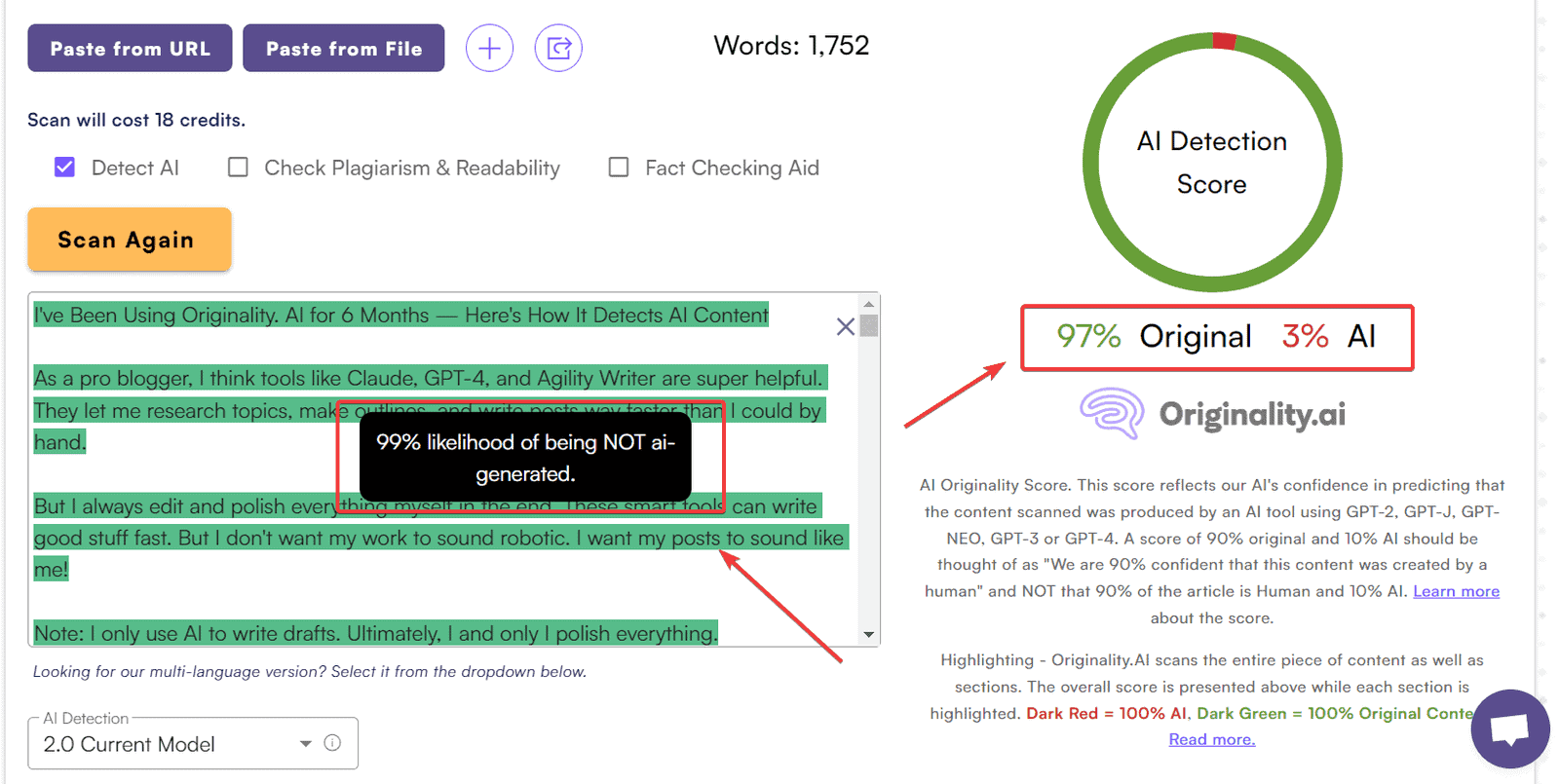

For example, I had Claude write a first draft of this post about me using AI as a blogger, with some context given:

I carefully edited Claude’s draft to share my thoughts in my words. But before hitting publish, I ran my final version through Originality to double check.

Originality flagged a few sentences that matched Claude’s draft too closely. Thanks to its detailed reports, I tweaked those parts to ensure this post is my original work start to finish.

Being able to keep my voice and stay original without slowing down has been awesome.

My Ongoing Struggle To Keep My Writing Original

As a blogger with a tech site, AI writing tools help me a ton:

- Huge time saver — AI helps me research, outline posts, and write first drafts way faster.

- More posts — With AI, I can publish a lot more often and give search engines fresh content.

- Better engagement — Using tools like Claude, I make posts readers want to read.

The benefits of AI are fantastic. But it introduces one big risk — originality.

As a pro blogger, I use tools like Claude and GPT-4 to write first drafts for my site. It saves me crazy amounts of time.

But even as the creator, sometimes I can’t tell what parts were written by AI versus me or my human team. Sometimes the AI stuff just sounds so natural. Not always, but it happens.

I’ve spent hours and hours reviewing draft posts word-by-word trying to find the human vs AI bits. It was like searching for a needle in a haystack!

The AI tools make me way more productive. But checking everything by hand wiped out those gains. I really needed a better solution.

I didn’t need something to catch plagiarism or copied content. I needed tech that could spot which parts of my writing were made by an algorithm, not a human. An advanced AI detector that could quickly tell human vs machine text apart.

Finding Originality.AI — The Solution I Needed

Searching for the best AI detector, I tested a bunch of tools. I was pumped when I found Originality.AI.

Right away, I could tell it was way better than other AI detectors. Originality had three awesome advantages:

- Advanced AI detection — Goes deep to spot language patterns created by AI.

- Super accuracy — Using machine learning, it catches AI text about 70% of the time, even advanced AI.

- Helpful insights — Shows exactly what’s AI vs original text with highlights and popups.

After using Originality almost every day for six months, I feel totally confident my content is solid.

Now, let’s look at how this tool uses AI itself to identify machine-written text so accurately.

A Look Inside Originality’s AI Content Detector

Originality wasn’t created using regular programming. Instead, it uses a technology changing many areas — artificial intelligence.

Specifically, Originality uses an advanced neural network model trained with deep learning.

By studying huge amounts of data, this AI model learns subtle patterns that tell human writing apart from machine text. It uses these insights to predict if new text was written by a human or an algorithm.

But how does this work behind the scenes?

Diverse Training Data Is Key

Like people, AI models need lots of training before they can master skills like finding AI content.

Originality’s model is fed a huge dataset with text from many sources:

- Published books covering different genres

- Articles from big online publishers

- Blog posts on all kinds of topics

- Social media showing informal language

- Forums for hobbyists and pros

- AI-generated text from models like GPT-4

Seeing billions of words in so many styles prepares the model for any text it sees.

Importantly, Originality’s dataset has a ton of machine-generated content. This real AI output is crucial for the model to learn patterns that show text written by an algorithm vs a human.

Advanced Neural Network Architecture

While varied training data is key, having the right model architecture matters, too.

Originality uses a transformer neural network that’s optimized for language tasks.

Transformers process text similar to how people read by looking at words in context. This is better than old recurrent networks.

With billions of internal settings, Originality’s model design can pick up subtle patterns in text. It uses these insights to label content as human or AI with strong accuracy.

Quick Updates As AI Advances

This is where Originality really excels — their commitment to constant improvement.

As new AI writing tools like GPT-4 and Claude 2 come out, Originality quickly gathers example text from them. They retrain their model to keep catching the newest machine text.

For example, just weeks after GPT-4 launched, Originality updated their model using tons of GPT-4 output. This rapid iteration is key to stay accurate as AI moves forward.

The end result is an AI detector that gets better as language models improve.

Seeing Big Improvements Over 6 Months Of Use

As an early user starting six months ago, I’ve seen Originality get way better during my time using it:

As a daily user, seeing constant improvements over months shows Originality’s commitment to staying advanced.

Supporting 13 Languages — A Huge Milestone

One of Originality’s biggest achievements so far is adding 12 more languages beyond English to catch AI content.

For anyone making content in multiple languages, this is super valuable:

- French

- Spanish

- Vietnamese

- German

- Italian

- Polish

- Russian

- Persian

- Dutch

- Greek

- Portuguese

- Turkish

They did this by training their model on diverse text samples from each language — both human and AI-generated.

Lots of testing shows excellent accuracy across the supported languages:

| Language | Accuracy |

|---|---|

| French | 100% |

| Spanish | 100% |

| Vietnamese | 100% |

| German | 100% |

| Italian | 99% |

| Polish | 100% |

| Russian | 100% |

| Persian | 99% |

| Dutch | 100% |

| Greek | 100% |

| Portuguese | 92% |

| Turkish | 100% |

If you blog in multiple languages, this gives huge confidence for each project.

Knowing Originality can reliably check content in 13 languages makes it indispensable. This was a massive step forward.

How Originality Compares To Other Detectors

Looking at different AI detectors, I’ve seen most use one of three main approaches:

Feature-based — Checks for patterns in things like word complexity, sentence structure, and repetition. Easy to build but easier for AI to fool.

Zero-shot classification — Uses a language model like GPT-3 to predict if text looks like its own output. Can be tricked by rephrasing.

Fine-tuned model — Uses a robust neural network trained on spotting human vs AI text. More advanced but pricier to develop.

Originality is a fine-tuned model. From my tests, this method gives way better accuracy when done right.

Although more expensive to make, a fine-tuned model allows:

- Exposure to millions of text examples to find subtle patterns

- Model design specialized for the task

- Quick retraining as new data appears

- Ongoing improvements over time

These advantages are clear when testing Originality against others — it simply has the best precision catching AI text.

Why Originality.AI is My Go-To for Content Integrity

After using Originality every day for months, it’s become a must-have tool I can’t live without.

Here’s why it’s way better than alternatives for my needs as a blogger:

- Reliable every day — Originality gives total confidence my posts stay original, so I can focus on creating.

- Peace of mind — I know my hard work won’t be punished or lose value due to AI detection concerns.

- Work smarter — I maximize gains from AI writing without losing originality. I get the best of both!

- Clear results — With visual labels, I can instantly see any AI content that slipped in while drafting.

- Awesome team — The minds behind Originality impress me — they constantly improve their product.

- Trusted brand — With a stellar reputation in content, I know Originality has my back.

As the AI generation grows hugely each year, manual checking will soon be impossible. For any business using AI, the need for accurate detection like Originality will only increase.

I’m thrilled to have this peace of mind as models like Claude, GPT-4, and more emerge. Originality helps future-proof content creation.

Final Thoughts

As a pro writer, I struggled to tell the difference between AI text and human writing. Advanced models like Claude and GPT-4 can make super nuanced, persuasive text that fools me a lot.

I felt overwhelmed trying to confirm my work was original when using AI writing help. So I was thrilled to find Originality about 6 months ago — it’s been the solution I desperately needed.

After daily use in my workflow, I confidently recommend Originality to any writer using AI generation tools. Here’s why it’s been so valuable for me:

- It helps me maximize gains from AI assistants without losing originality and integrity of my finished work. Robust training data, optimized neural network design, and quick updates give it great accuracy, even on sophisticated AI models.

- For multilingual content, support for 12 more languages brings huge value.

- I appreciate they continuously improve it to address issues like false positives and detecting new AI models.

As a pro who depends on publishing original valuable content, using an AI detector like Originality gives essential peace of mind. I no longer worry about content integrity when I use AI for drafting.

If you struggle to confirm originality like I did, I’d definitely suggest trying Originality. In my experience, it’s the real deal for sustainable AI content creation long-term.

Ready to give it a try?

About the Author

Meet Alex Kosch, your go-to buddy for all things AI! Join our friendly chats on discovering and mastering AI tools, while we navigate this fascinating tech world with laughter, relatable stories, and genuine insights. Welcome aboard!

KEEP READING, MY FRIEND