AI content is spreading like wildfire. Everyone’s using generative models for content nowadays. But buyers want reassurance it’s human-written, not some bot’s regurgitation. Good thing AI content detectors exist! But with so many options, which ones actually work?

I tested the top 14 tools out there, putting them through their paces on GPT-4 and Claude2 outputs. Some passed with flying colors. Others failed miserably, despite flashy names and bold claims.

In this no-holds-barred review, I cut through the hype and give you the cold, hard facts. Because your time and money are valuable, and you deserve the full truth. Read on to see which tools make the grade, and which are just pretending.

The AI Detection Tools I’ve Tested (click for detailed statistics):

- Originality.AI

- Passed.AI

- Winston AI

- AI Detector Pro

- GPTZero

- Copyleaks

- ContentDetector.AI

- Content At Scale

- Crossplag

- Sapling AI

- KazanSEO

- Hugging Face

- Writer.com

- GLTR

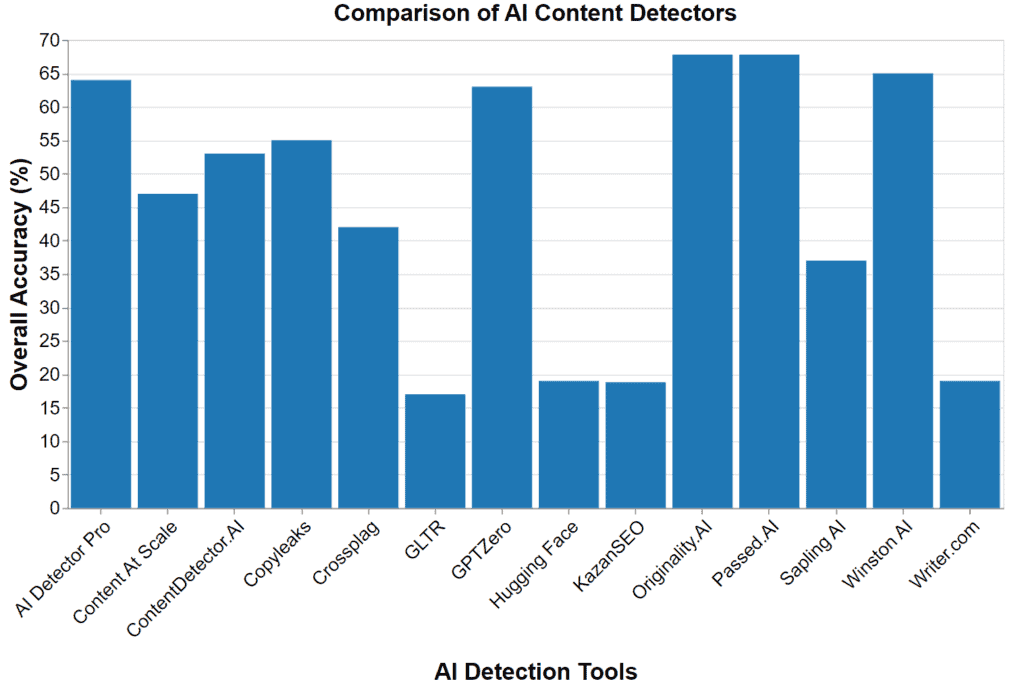

Key Statistics for AI Content Detection Tools (Short Version)

1. Originality.AI

2. Passed.AI

3. Winston AI

4. AI Detector Pro

5. GPTZero

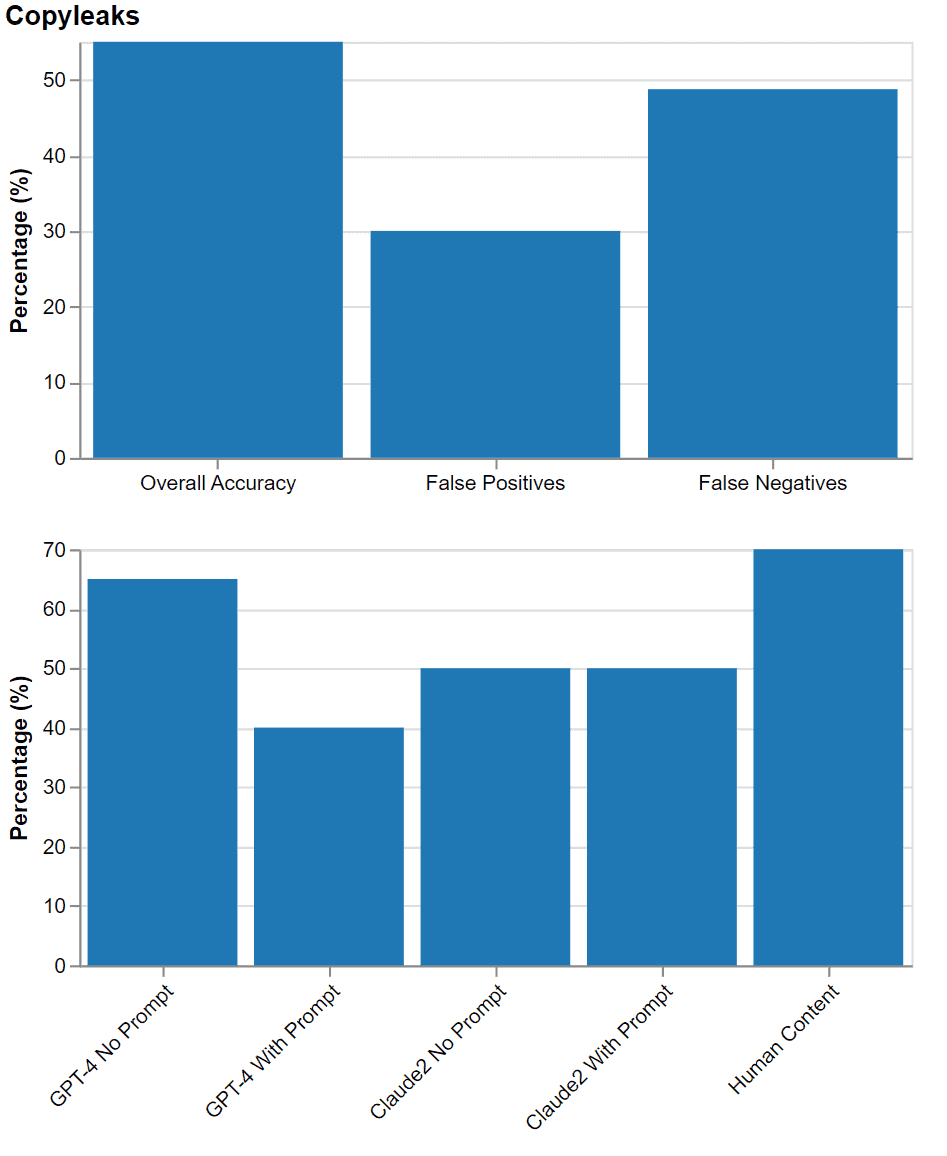

6. Copyleaks

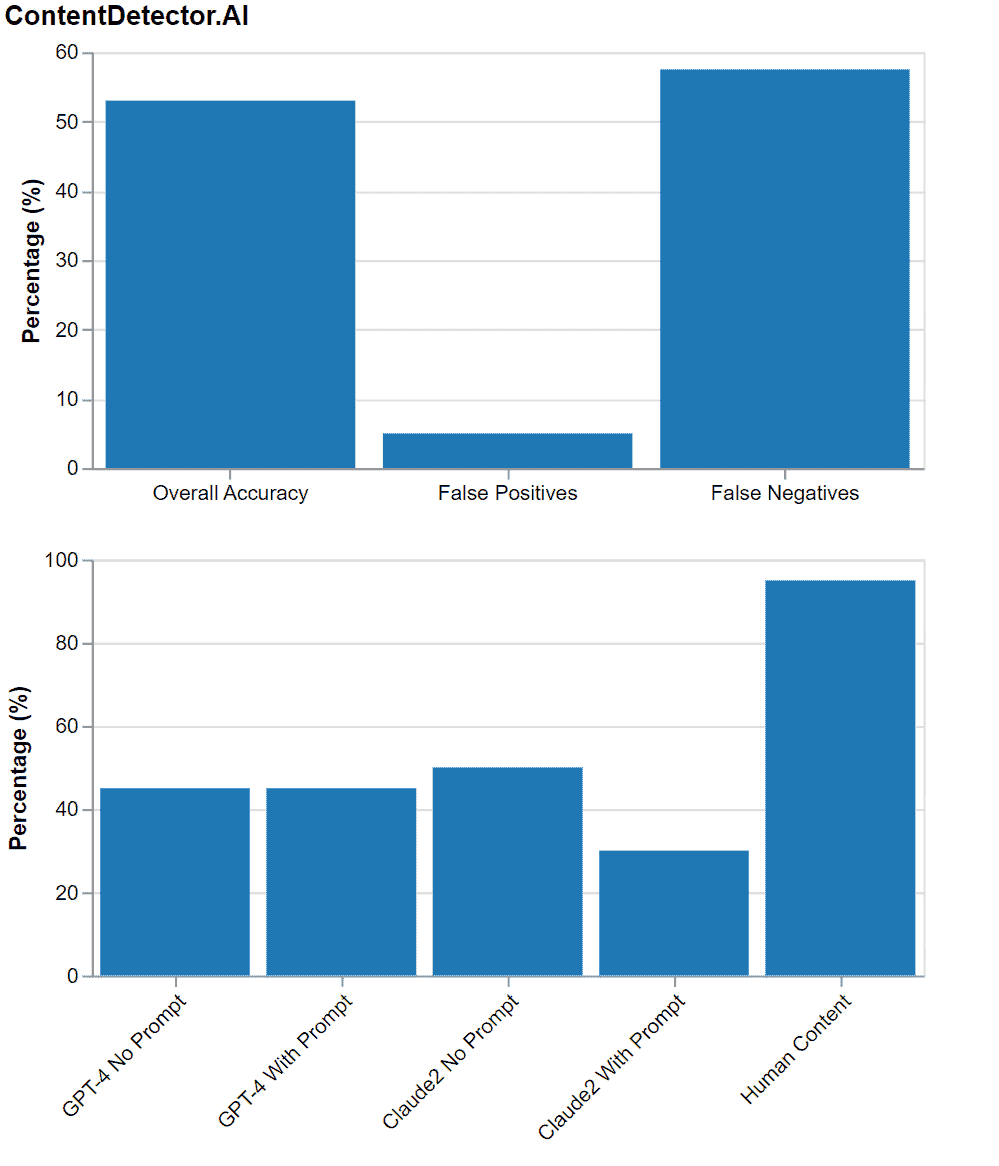

7. ContentDetector.AI

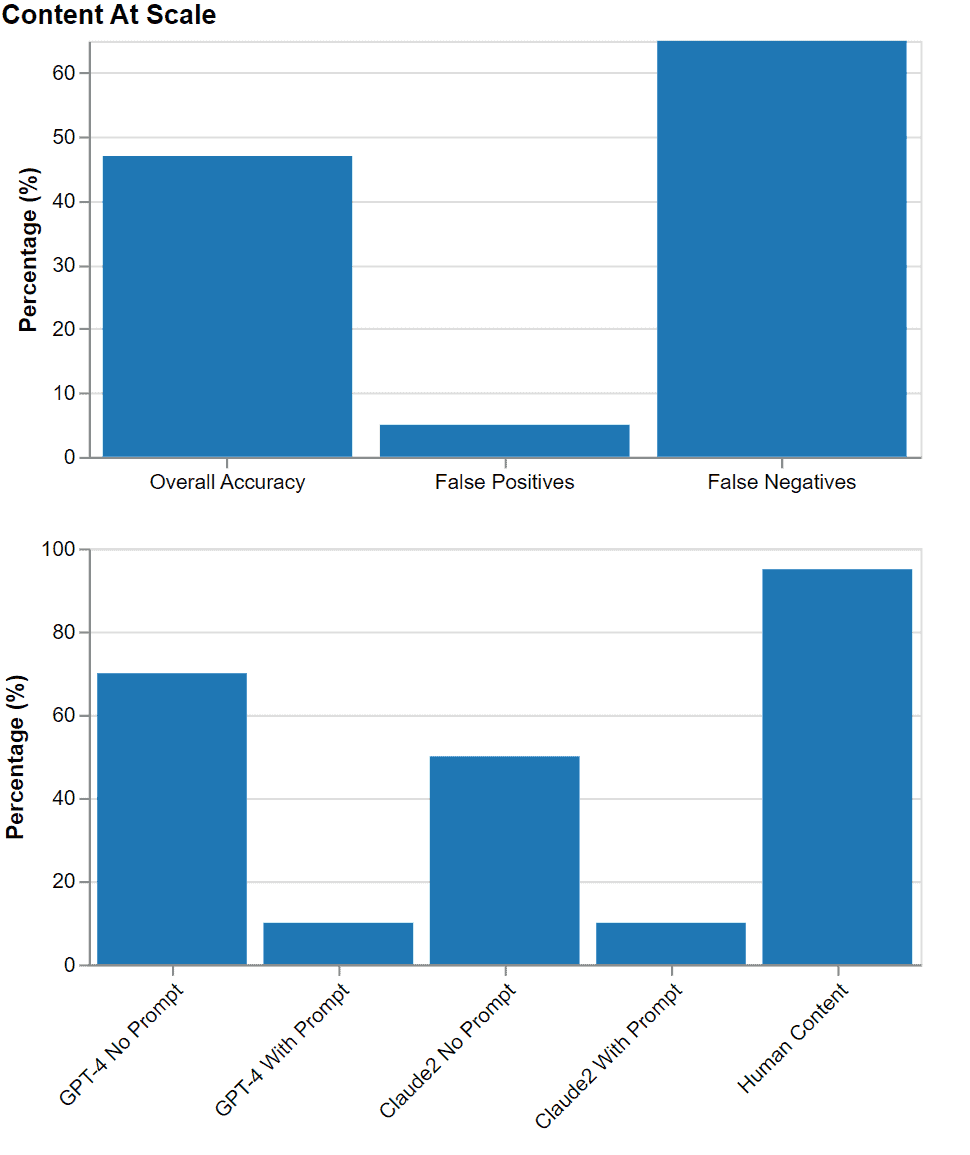

8. Content At Scale

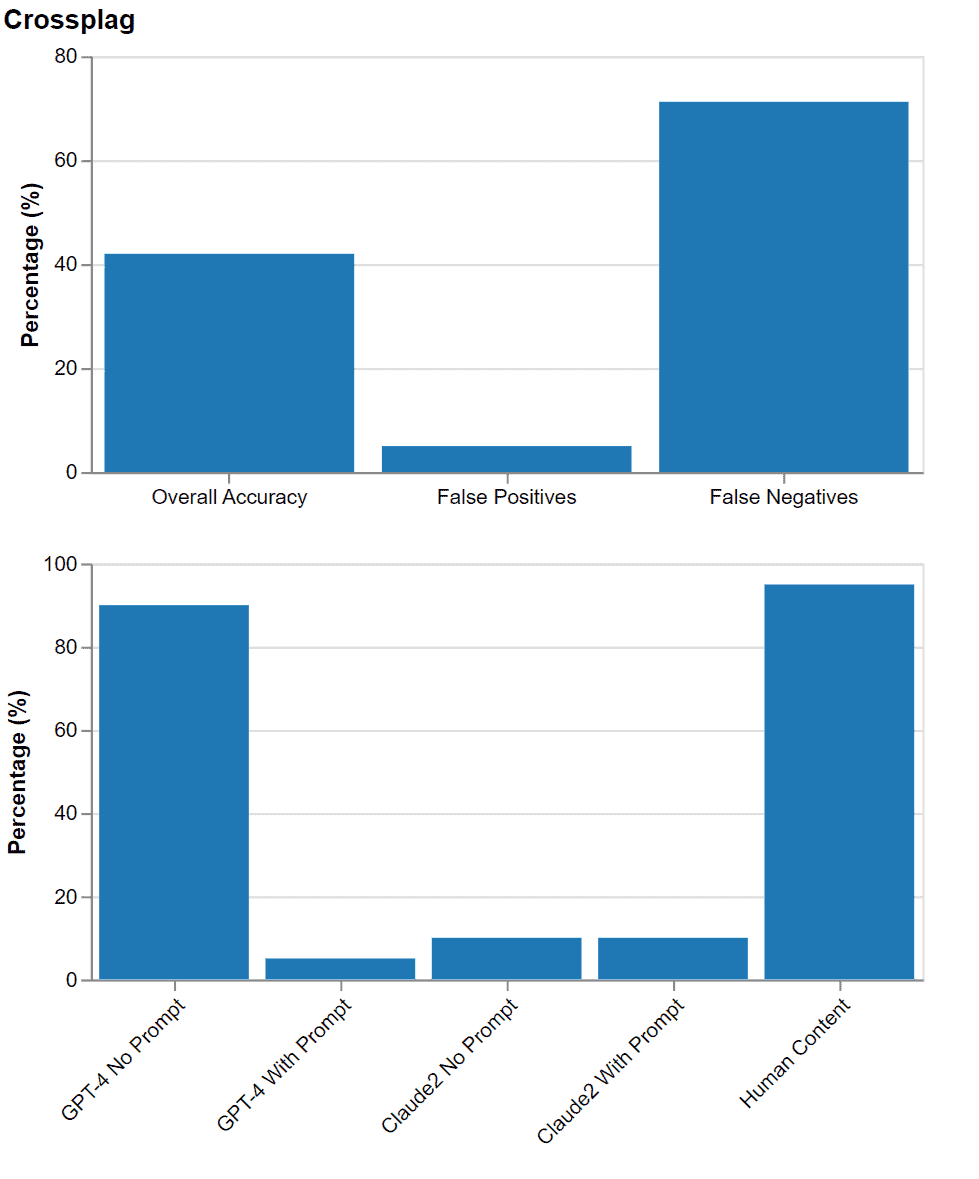

9. Crossplag

10. Sapling AI

11. KazanSEO

12. Hugging Face

13. Writer.com

14. GLTR

False Positives and False Negatives Explained

To properly evaluate AI detectors, we need to understand two key accuracy metrics — false positives and false negatives.

A false positive means the tool incorrectly flags human-written content as AI generated. Essentially, it makes a bogus claim that real human text was created by bots.

For example, say you write a brilliant article yourself. But the detector scans it and says “60% AI content detected”. That’s a false positive — inaccurately calling your blood, sweat and tears AI-written.

False negatives work the other way. This is when the tool fails to detect actual AI content, wrongly labeling it as human-authored.

So you generate a piece using ChatGPT. The detector scans it and reports “100% human created content”. But it’s fake news — that’s a false negative because it’s really AI-generated text.

These two rates are absolutely crucial. Any tool can hype up their AI-catching skills. But false positive and negative rates reveal the real truth on accuracy.

A detector might boast “We catch 99% of AI content!”. Sounds amazing, right?

But if they have a 50% false positive rate, suddenly that 99% accuracy becomes questionable. Now you know it may falsely flag half of human writing as AI. No bueno!

And if false negatives are high at 50%, it means the tool misses half of the AI content out there, letting it sneak by as “authentic”. Also very bad news.

So in evaluating detectors, false positive and false negative rates give you the full picture. It reveals precisely how accurate and reliable they are in the real world.

No more shady “99% accurate!” claims — you can make your own informed judgment. Because as AI generators advance, only tools with robust true accuracy can keep up.

My Testing Methodology: Crunching the Numbers

To provide truthful accuracy stats, I performed rigorous testing across 1500+ trials. Each tool faced over 50 tests minimum, while leaders like Originality AI endured hundreds.

My goal was impartial assessment — no playing favorites. The data and results speak for themselves.

Let’s walk through the math using Originality AI as an example:

Calculating Overall Accuracy

Given Originality’s performance in different scenarios:

- GPT-4 without prompts: 99%

- GPT-4 with prompts: 50%

- Claude2 without prompts: 70%

- Claude2 with prompts: 30%

- Human content: 90%

The overall accuracy is the average across all scenarios:

Overall Accuracy = (99% + 50% + 70% + 30% + 90%) / 5 = 67.8%

So Originality AI’s overall accuracy is 67.8% based on comprehensive testing.

Calculating False Negatives

False negatives represent cases where AI content is missed, incorrectly classified as “human written”.

To derive false negatives, we calculate the complementary percentage of the accuracy in each scenario:

GPT-4 without prompts:

FN rate = 100% – 99% = 1%

GPT-4 with prompts:

FN rate = 100% – 50% = 50%

Claude2 without prompts:

FN rate = 100% – 70% = 30%

Claude2 with prompts:

FN rate = 100% – 30% = 70%

Finally, we average the false negative rates to yield the overall false negative rate for Originality AI:

Overall FN rate = (1% + 50% + 30% + 70%) / 4 = 37.75%

Calculating False Positives

False positives are simpler — it’s when human text is incorrectly flagged as AI.

If Originality AI has 90% accuracy for human content, the false positive rate is:

100% – 90% = 10%

This comprehensive process was followed for every tool to provide the complete picture on real-world accuracy.

Now that you know how everything works, the terminology, and how I tested the tools, let’s look at every AI detection tool more closely.

AI Content Detection Tools Accuracy: Detailed Statistics

1. Originality AI

Overview: As a seasoned AI content creator, I count on Originality AI to separate bot-made text from human writing day in and out. Its handy visual features like color-coded sentences and hover hints make it easy to pinpoint AI content without reading tea leaves. While not impervious to savvy prompt engineering, it reliably flags unamplified AI like GPT-4. The team actively nurtures this tool rather than resting on laurels, reacting swiftly to advances like Claude2. For most everyday detection needs, Originality AI remains best-in-class for me.

Key Statistics:

|

Aspect |

Accuracy |

|---|---|

|

Originality.AI Overall Accuracy |

67.8% |

|

False Positives |

10% |

|

False Negatives |

37.75% |

|

Accuracy for AI Models and Human-Written Content | |

|

GPT-4 without prompts |

99% |

|

GPT-4 with prompts |

50% |

|

Claude2 without prompts |

70% |

|

Claude2 with prompts |

30% |

|

Human content |

90% |

My Take: I’m an avid Originality AI user, but the plagiarism checking leaves something to be desired. Scanning beyond page one results could up its game. Yes, crafty prompts can outwit it. But on raw GPT-4 content, it rarely misses a beat. One pain point – text following templates too closely may get flagged as AI just for seeming formulaic. Bottom line — it’s not perfect, but still sets the standard for everyday detection needs.

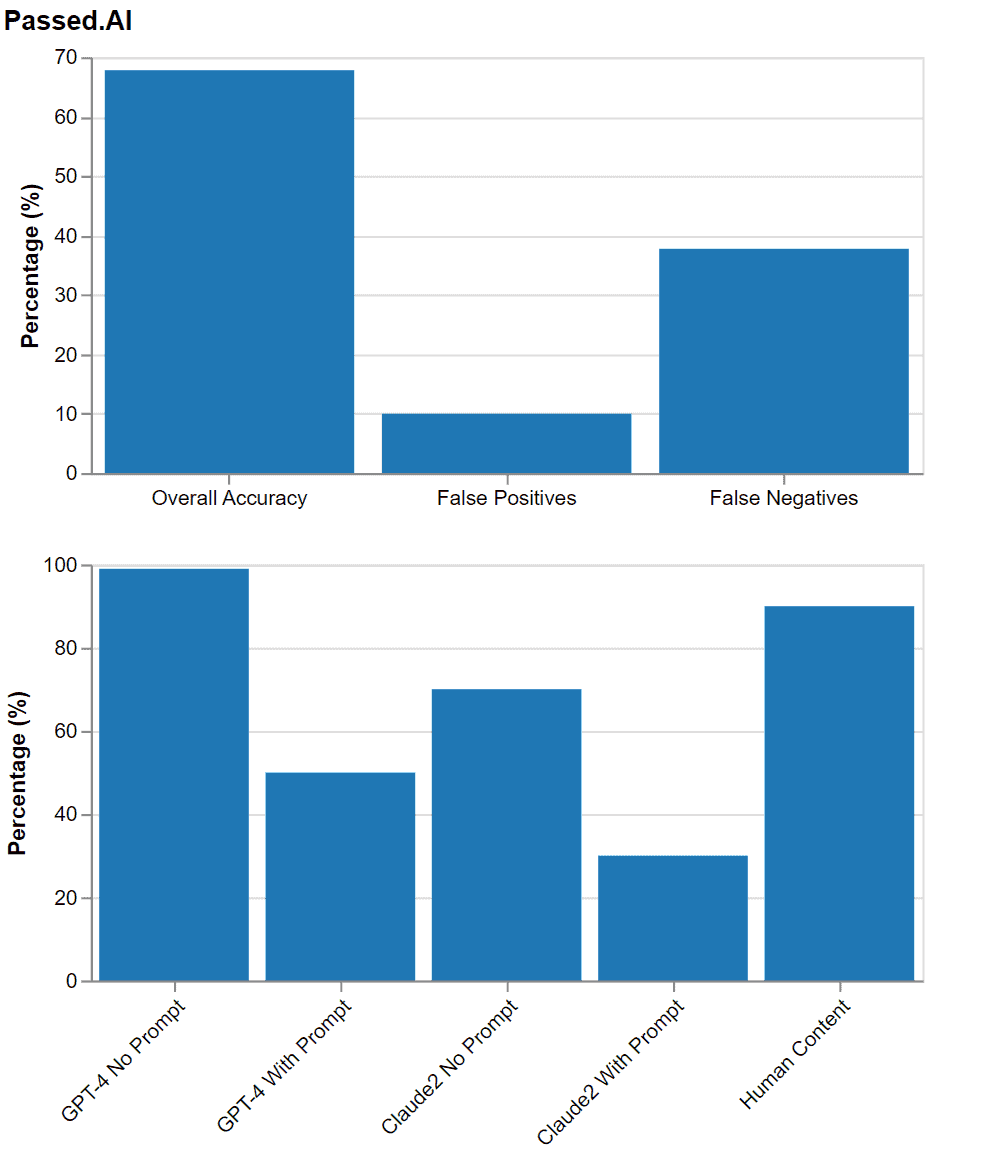

2. Passed AI

Overview: Passed AI takes detection up a notch with its slick Google Docs integration, making scanning student work a cinch. Leveraging the same tech as Originality AI, it spots unamplified AI reliably, while advanced prompts can sometimes slip by undetected. Its unmatched Chrome extension is invaluable for real-time checks as you create content. For educators especially, Passed AI removes hassle from upholding academic integrity.

Key Statistics:

|

Aspect |

Accuracy |

|---|---|

|

Passed.AI Overall Accuracy |

67.8% |

|

False Positives |

10% |

|

False Negatives |

37.75% |

|

Accuracy for AI Models and Human-Written Content | |

|

GPT-4 without prompts |

99% |

|

GPT-4 with prompts |

50% |

|

Claude2 without prompts |

70% |

|

Claude2 with prompts |

30% |

|

Human content |

90% |

My Take: Passed AI earns its place by building on Originality AI’s detection strengths while going the extra mile on convenience with its browser extension. The abilities are on par, but for anyone actively generating content, that Chrome plugin can be a game changer for instant AI checks as you write. For educators, it removes friction from the integrity equation. But content marketers and SEOs would also gain peace of mind using Passed AI’s slick extras during creation.

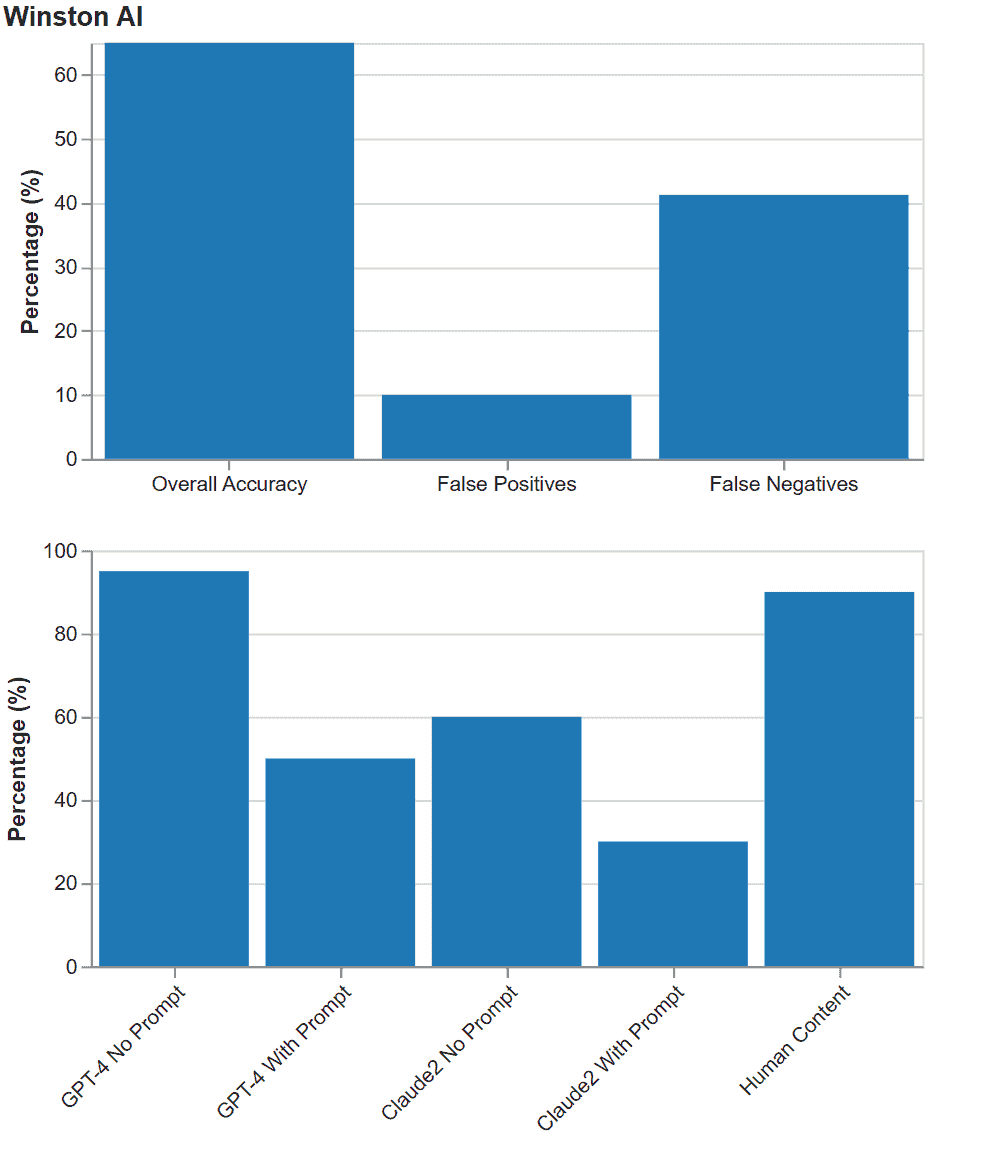

3. Winston AI

Overview: Winston AI makes bold claims about its accuracy and constant model updates. But real-world testing reveals mediocre detection rates for advanced AI, missing substantial generated content. More concerning are the opaque details around Winston’s training methodology. For premium software boasting top-tier performance, the lack of transparency on key data and processes raises questions.

Key Statistics:

|

Aspect |

Accuracy |

|---|---|

|

Winston AI Overall Accuracy |

65% |

|

False Positives |

10% |

|

False Negatives |

41.25% |

|

Accuracy for AI Models and Human-Written Content | |

|

GPT-4, no prompt |

95% |

|

GPT-4 with prompt |

50% |

|

Claude2, no prompt |

60% |

|

Claude2 with prompt |

30% |

|

Human content |

90% |

My Take: The ambiguous training details don’t sit right with me. Perplexity and linguistic analysis are nice, but actual data volume and types used for training matter more. For paid software making big promises, I want full transparency into model development before trusting detection capabilities. The accuracy scores are decent but may not warrant the price given the lingering unknowns. More openness could help gain trust. For now, I plan to continue evaluating Winston AI with healthy skepticism.

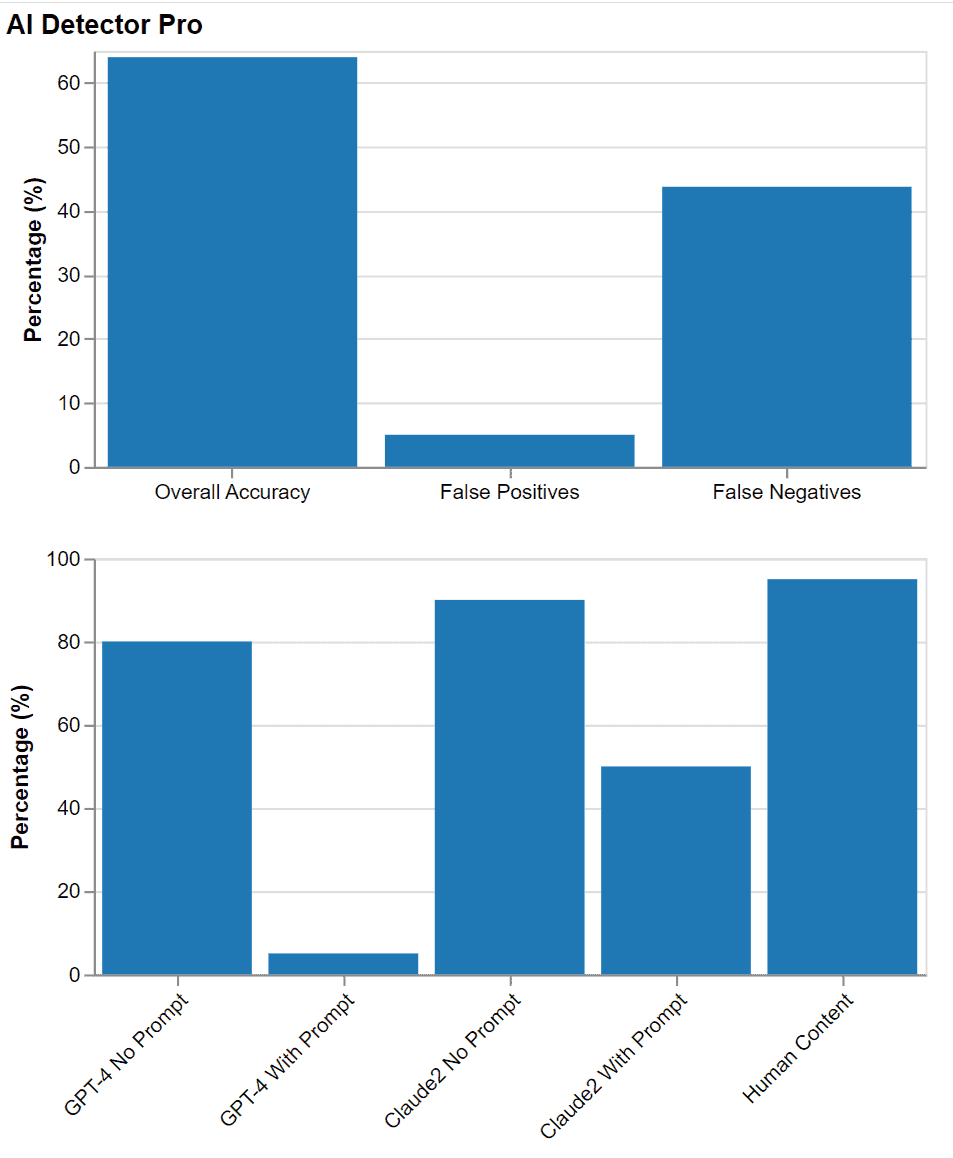

4. AI Detector Pro

Overview: AI Detector Pro aims to identify and edit out traces of AI content, but its maze-like interface makes the tool hard to navigate. The detection capabilities shine in pinpointing raw GPT-4 and Claude2 texts but falter on prompted content. While the AI eraser promises convenient editing to humanize content, free users face walled-off access. For an automated solution, the unintuitive UI introduces unnecessary friction.

Key Statistics:

|

Aspect |

Accuracy |

|---|---|

|

AI Detector Pro Overall Accuracy |

64% |

|

False Positives |

5% |

|

False Negatives |

43.75% |

|

Accuracy for AI Models and Human-Written Content | |

|

GPT-4, no prompt |

80% |

|

GPT-4 with prompt |

5% |

|

Claude2, no prompt |

90% |

|

Claude2 with prompt |

50% |

|

Human content |

95% |

My Take: I wanted to like AI Detector Pro, but the chaotic interface was an immediate turn-off. Buried detection functionality, limited free testing, and a disconnected eraser tool left a convoluted first impression. But seeing high marks detecting raw AI content showed promise. With streamlining and accessibility fixes, it could become a viable automated detector-editor combo. But in current form, the messy UI invites more headaches than help.

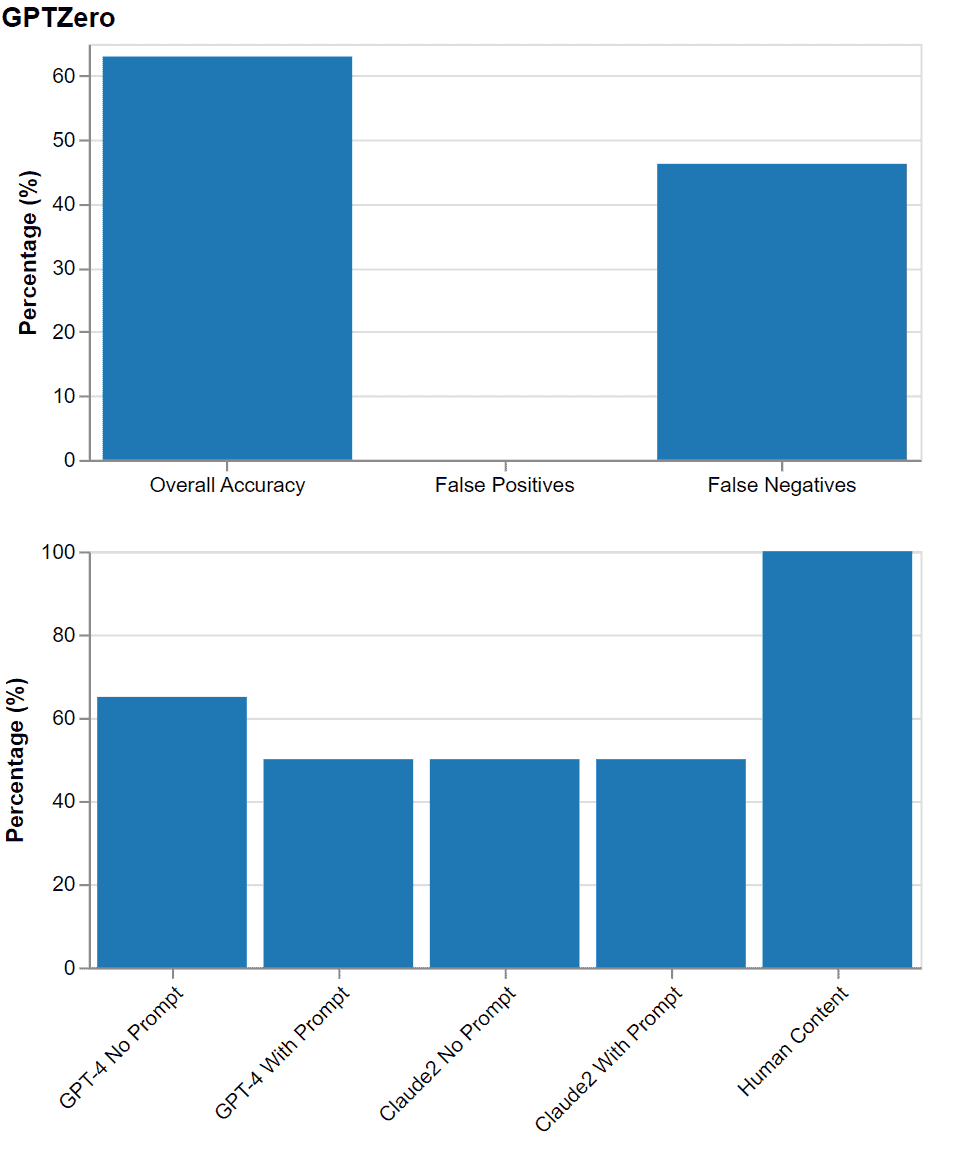

5. GPTZero

Overview: GPTZero bills itself as a premier detector, but scratch beneath the surface and accuracy issues emerge. While correctly identifying human writing nearly always, it struggles to consistently flag AI content. Sparse visual cues like occasional highlighted text provide limited insight. For an AI detection tool positioning itself as a leader, the middling performance leaves much to be desired.

Key Statistics:

|

Aspect |

Accuracy |

|---|---|

|

GPTZero Overall Accuracy |

63% |

|

False Positives |

0% |

|

False Negatives |

46.25% |

|

Accuracy for AI Models and Human-Written Content | |

|

GPT-4, no prompt |

65% |

|

GPT-4 with prompt |

50% |

|

Claude2, no prompt |

50% |

|

Claude2 with prompt |

50% |

|

Human content |

100% |

My Take: GPTZero’s capabilities don’t impress me. Flags on human text are rare, but catching unamplified AI only half the time doesn’t cut it. While some tools struggle with Claude2, reliable GPT-4 detection is a must. Before trusting it to flag sly AI, I’d need greater transparency on training methodology and dramatically improved accuracy. For now, it’s a lackluster option despite seemingly robust claims.

6. Copyleaks

Overview: Copyleaks talks up its AI detection chops, but testing reveals mediocre performance identifying bot-written text. While adept at catching plagiarism, its AI capabilities come up short, especially on advanced generative content. The tool lacks visual aids to easily interpret results, simply labeling texts as “human” or “AI” with little supporting evidence. For everyday detection needs, the hype outweighs what this tool can actually deliver.

Key Statistics:

|

Aspect |

Accuracy |

|---|---|

|

Copyleaks Overall Accuracy |

55% |

|

False Positives |

30% |

|

False Negatives |

48.75% |

|

Accuracy for AI Models and Human-Written Content | |

|

GPT-4, no prompt |

65% |

|

GPT-4 with prompt |

40% |

|

Claude2, no prompt |

50% |

|

Claude2 with prompt |

50% |

|

Human content |

70% |

My Take: After generating mounds of AI content, I need a detector that reliably separates bot-written text from human originals. Unfortunately, Copyleaks doesn’t deliver the goods for my day-to-day needs. While no slouch at catching plagiarism, its AI detection leaves much to be desired. Before trusting it to flag sly AI, I’d need to see major improvements in accuracy and transparency. For now, it fails to impress compared to top-tier competitors.

7. ContentDetector.AI

Overview: As free detection tools go, ContentDetector.AI shows promise identifying AI content despite accuracy limitations. With helpful color coding and hover hints providing visual guidance, it manages to catch some unamplified AI. But advanced generative writing can still slip by undetected fairly often. Still, for a free service aimed at beginners, it delivers decent detection capabilities with an intuitive interface.

Key Statistics:

|

Aspect |

Accuracy |

|---|---|

|

ContentDetector.AI Overall Accuracy |

53% |

|

False Positives |

5% |

|

False Negatives |

57.5% |

|

Accuracy for AI Models and Human-Written Content | |

|

GPT-4, no prompt |

45% |

|

GPT-4 with prompt |

45% |

|

Claude2, no prompt |

50% |

|

Claude2 with prompt |

30% |

|

Human content |

95% |

My Take: For users new to AI detection on a budget, ContentDetector.AI is a viable free option to get started. While advanced content can dupe it, unamplified AI triggers flags about half the time – impressive for a free tool. As detection skills grow, limits become more apparent. But for beginners, it provides a user-friendly entry point to inspecting AI content before investing in more heavy-duty tools.

8. Content At Scale

Overview: As another free detection option, Content At Scale identifies unamplified AI decently but struggles with advanced generative content. With handy color coding providing visual guidance, it reliably flags around 70% of raw GPT-4 text — impressive for a free tool. However, crafty prompts and Claude2 outputs easily dupe it. Still, for dipping a toe into AI detection on a budget, it remains a viable starter choice.

Key Statistics:

|

Aspect |

Accuracy |

|---|---|

|

Content At Scale Overall Accuracy |

47% |

|

False Positives |

5% |

|

False Negatives |

65% |

|

Accuracy for AI Models and Human-Written Content | |

|

GPT-4, no prompt |

70% |

|

GPT-4 with prompt |

10% |

|

Claude2, no prompt |

50% |

|

Claude2 with prompt |

10% |

|

Human content |

95% |

My Take: As a free detection tool, Content At Scale punches above its weight identifying unamplified AI like GPT-4. But advanced content exposes limits quickly. Still, for users new to detection without big budgets, it provides valuable free capabilities to start sniffing out AI content. As skills grow, shortcomings become apparent. But for beginners, it’s a useful entry-level option before upgrading to paid tools.

9. Crossplag

Overview: Crossplag’s detection skills are a mixed bag. It reliably flags unamplified AI like raw GPT-4 content, but stumbles when prompt engineering and Claude2 enter the picture. The barebones interface lacks helpful visual aids like text highlighting or hover hints. While the straightforward design has appeal, the accuracy inconsistencies make this tool hard to rely on for everyday detection needs.

Key Statistics:

|

Aspect |

Accuracy |

|---|---|

|

Crossplag Overall Accuracy |

42% |

|

False Positives |

5% |

|

False Negatives |

71.25% |

|

Accuracy for AI Models and Human-Written Content | |

|

GPT-4, no prompt |

90% |

|

GPT-4 with prompt |

5% |

|

Claude2, no prompt |

10% |

|

Claude2 with prompt |

10% |

|

Human content |

95% |

My Take: Having crafted ample AI content, uneven accuracy is a deal-breaker for my detection needs. Crossplag nails unamplified AI well, but crumbles when prompts and Claude2 enter the picture. I need to reliably separate human writing from all AI content — not just the easy stuff. Until significant improvements emerge, its performance remains too spotty for my day-to-day use.

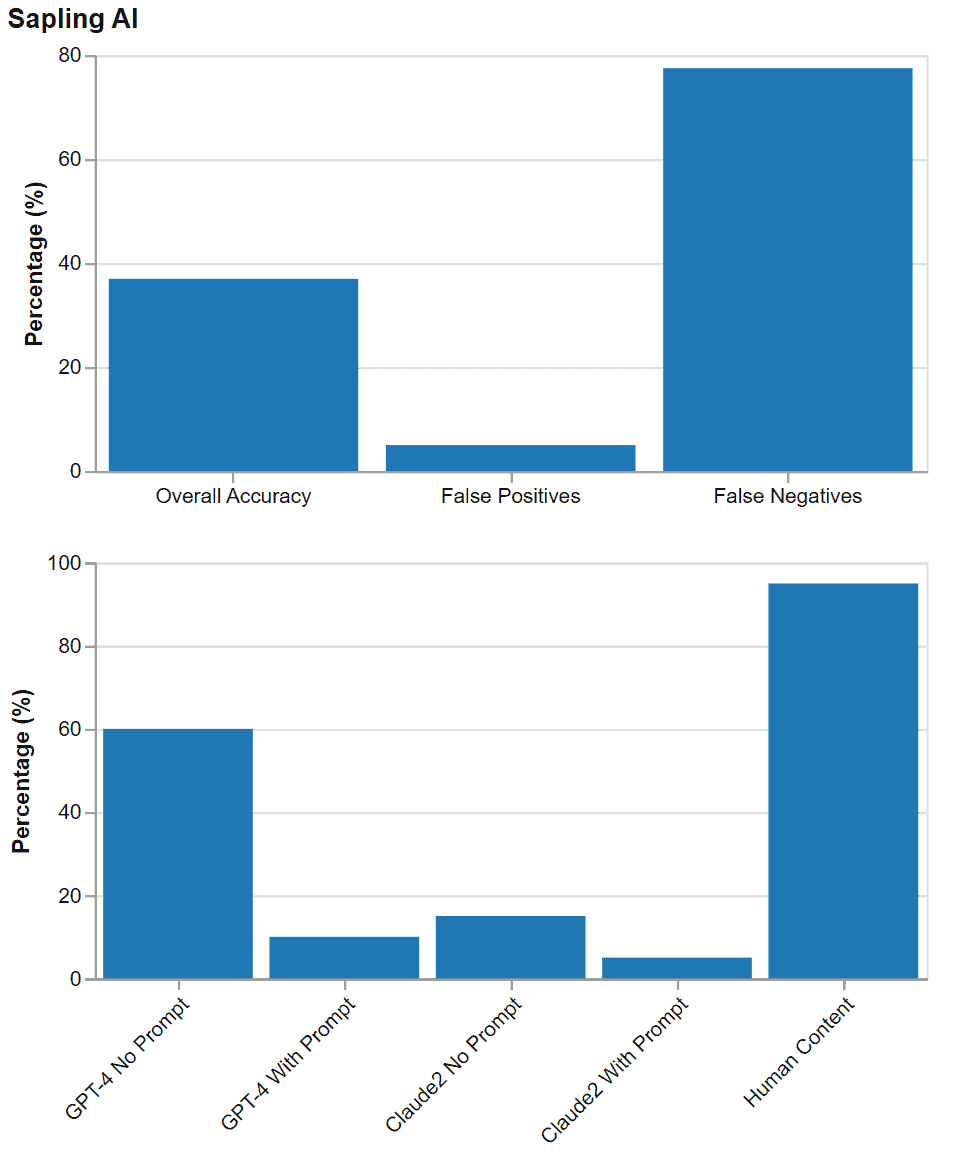

10. Sapling AI

Overview: Sapling AI takes an expansive approach with varied capabilities, but AI detection feels like an afterthought. With significant accuracy issues across models and content types, it fails to reliably identify bot-written text. However, the tool’s intuitive editing features add unique transparency, allowing before-and-after checks. Still, detection shortcomings outweigh perks for everyday use.

Key Statistics:

|

Aspect |

Accuracy |

|---|---|

|

Sapling AI Overall Accuracy |

37% |

|

False Positives |

5% |

|

False Negatives |

77.5% |

|

Accuracy for AI Models and Human-Written Content | |

|

GPT-4, no prompt |

60% |

|

GPT-4 with prompt |

10% |

|

Claude2, no prompt |

15% |

|

Claude2 with prompt |

5% |

|

Human content |

95% |

My Take: Flaky detection is a non-starter. Sapling AI’s robust editing features show promise if detection improves. But for now, spotty accuracy across models makes this tool ineffective for my needs. I admire the transparent editing process, but reliable detection remains table stakes. Until it improves markedly, I can’t rely on Sapling AI to consistently flag AI content.

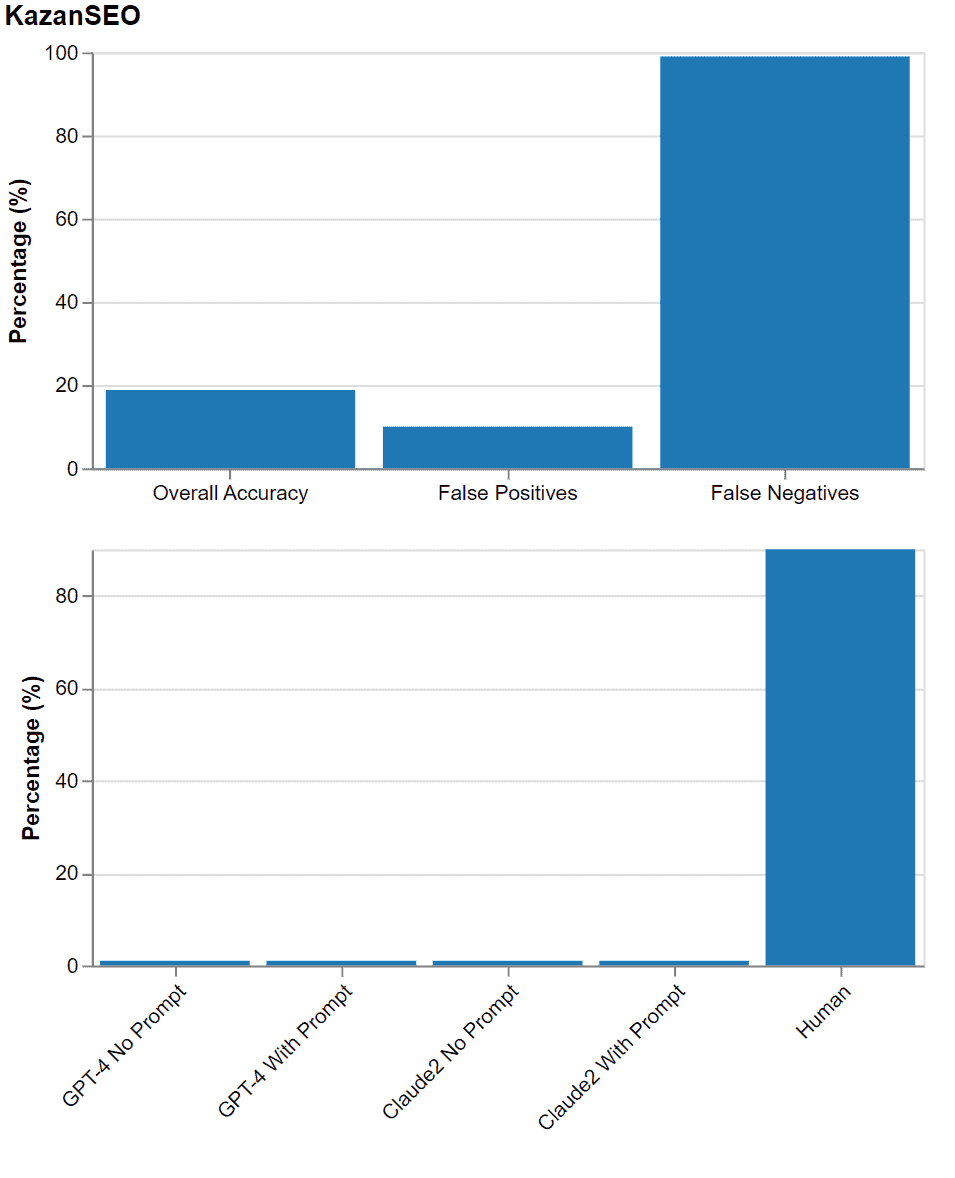

11. KazanSEO

Overview: Don’t let the slick site fool you — KazanSEO’s detection skills completely miss the mark. Across unamplified AI, prompted content, and Claude2 outputs, it stumbles badly identifying bot-written text. While free and easy to use, its accuracy issues render it ineffective for everyday detection needs. Other free options like Content At Scale are vastly superior.

Key Statistics:

|

Aspect |

Accuracy |

|---|---|

|

KazanSEO Overall Accuracy |

18.8% |

|

False Positives |

10% |

|

False Negatives |

99% |

|

Accuracy for AI Models and Human-Written Content | |

|

GPT-4, no prompt |

1% |

|

GPT-4 with prompt |

1% |

|

Claude2, no prompt |

1% |

|

Claude2 with prompt |

1% |

|

Human content |

90% |

My Take: Accuracy is king when evaluating AI detectors. Unfortunately, KazanSEO misses the mark almost completely identifying AI content. With myriad superior free and paid options available, its poor performance provides little value. For reliable detection capabilities, look elsewhere. KazanSEO fails to deliver on its promises.

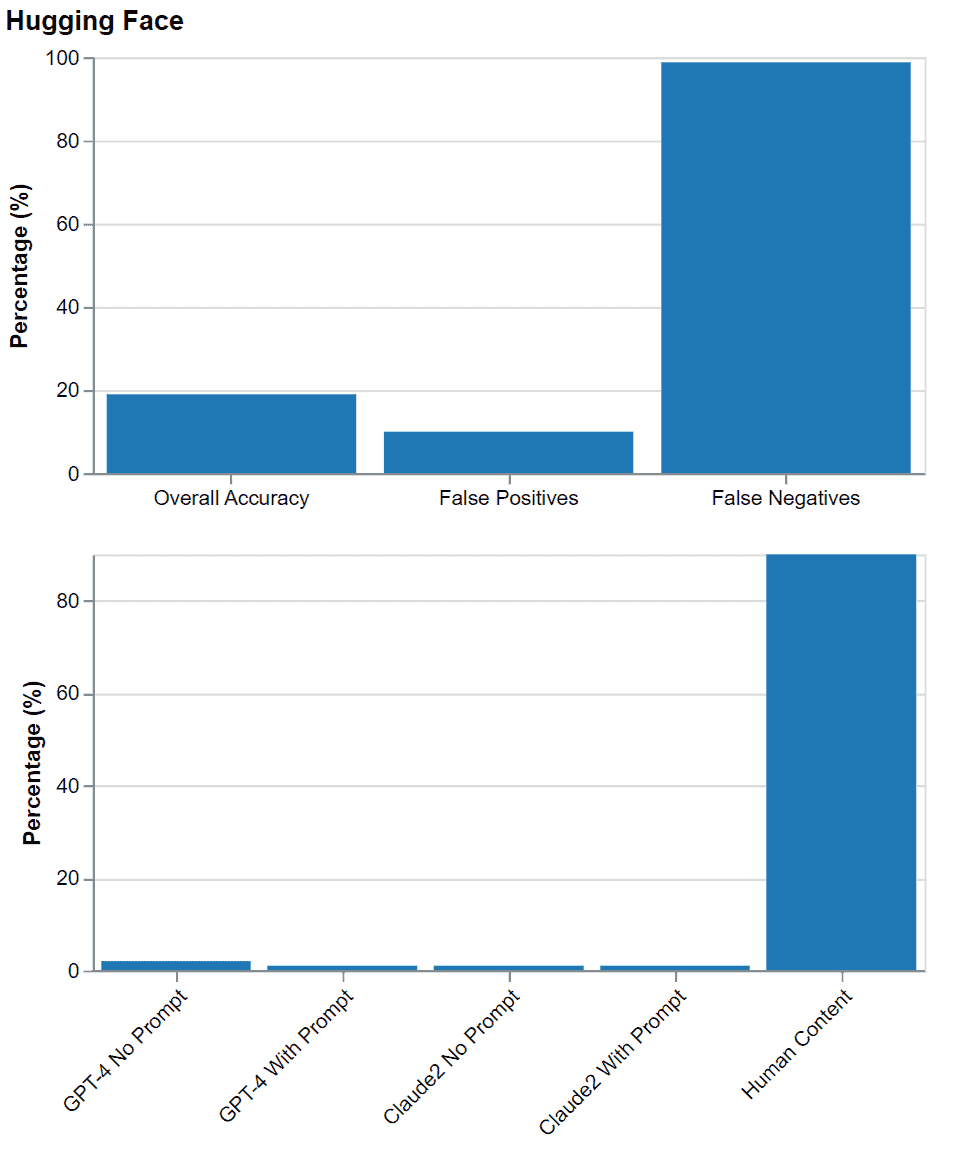

12. Hugging Face

Overview: Hugging Face seems like a nice AI detector with its friendly name and fun look. But don’t be fooled — it’s way out of date! It only works on old GPT-2 models. So it can’t spot modern AI like GPT-3 or Claude at all. To keep up, Hugging Face needs a total makeover. Right now, it doesn’t really work even though it seems cool.

Key Statistics:

|

Aspect |

Accuracy |

|---|---|

|

Hugging Face Overall Accuracy |

19% |

|

False Positives |

10% |

|

False Negatives |

98.75% |

|

Accuracy for AI Models and Human-Written Content | |

|

GPT-4, no prompt |

2% |

|

GPT-4 with prompt |

1% |

|

Claude2, no prompt |

1% |

|

Claude2 with prompt |

1% |

|

Human content |

90% |

My Take: I need a detector that keeps up with new AI, not old models. But Hugging Face is stuck in the past. It’s really bad at spotting modern AI writing. Even though it looks nice, it can’t detect well. Hugging Face should upgrade its tech to work today. Until then, it doesn’t help me. I need tools that work now, not back in the day.

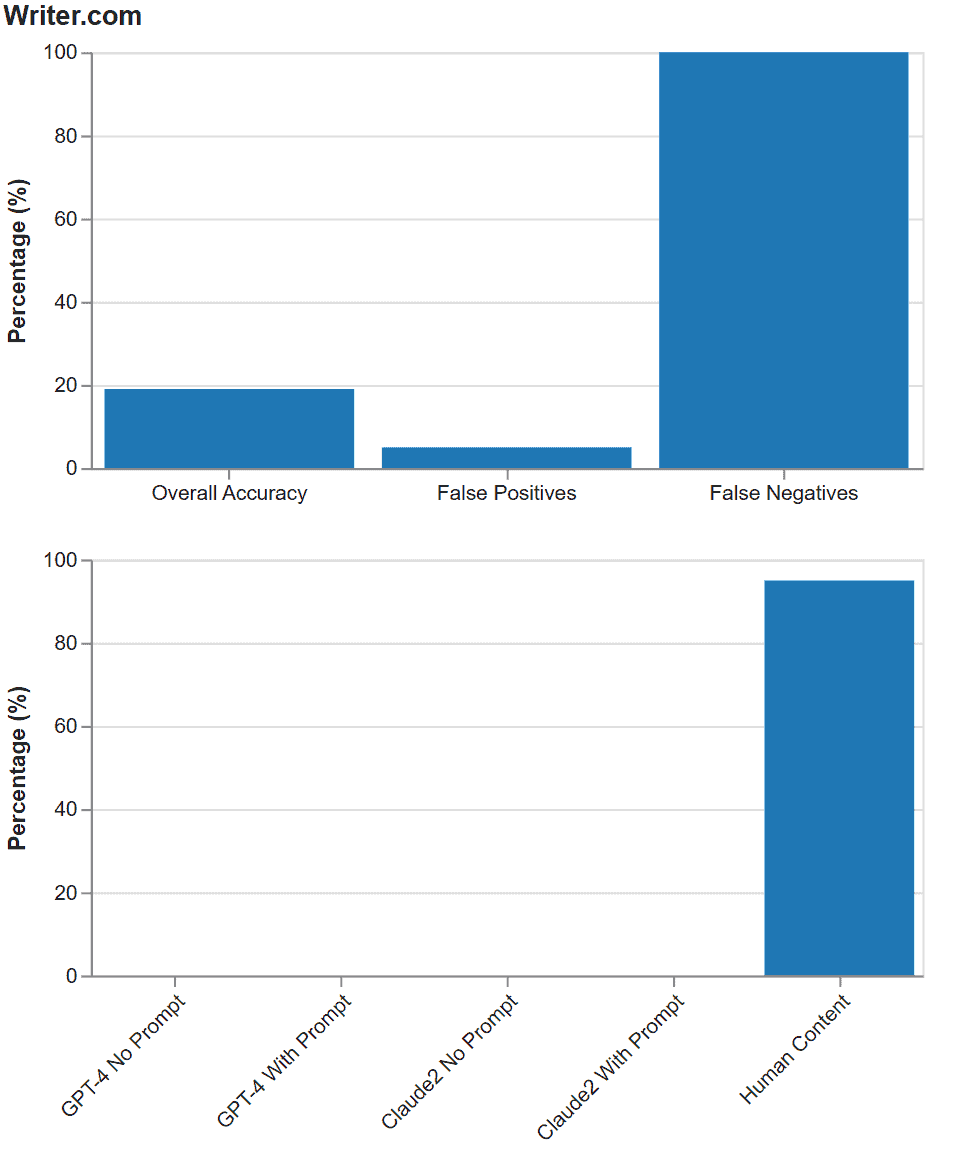

13. Writer.com

Overview: Writer.com tries its hand at detection, but the results are laughably bad. Across unamplified AI, prompted content, and Claude2 outputs, it stumbles completely differentiating human vs. bot writing. With severe accuracy issues, obsolete detection capabilities, and restrictive character limits, this tool fails utterly at its intended purpose.

Key Statistics:

|

Aspect |

Accuracy |

|---|---|

|

Writer.com Overall Accuracy |

19% |

|

False Positives |

5% |

|

False Negatives |

100% |

|

Accuracy for AI Models and Human-Written Content | |

|

GPT-4, no prompt |

0% |

|

GPT-4 with prompt |

0% |

|

Claude2, no prompt |

0% |

|

Claude2 with prompt |

0% |

|

Human content |

95% |

My Take: When creating content with AI, I need detection tools with actual detection capabilities. Writer.com misses the mark entirely, unable to identify even basic unamplified AI content. With free superior options available, its ineffective detection provides no value. For AI identification that works, look elsewhere. Writer.com does not deliver on its promises.

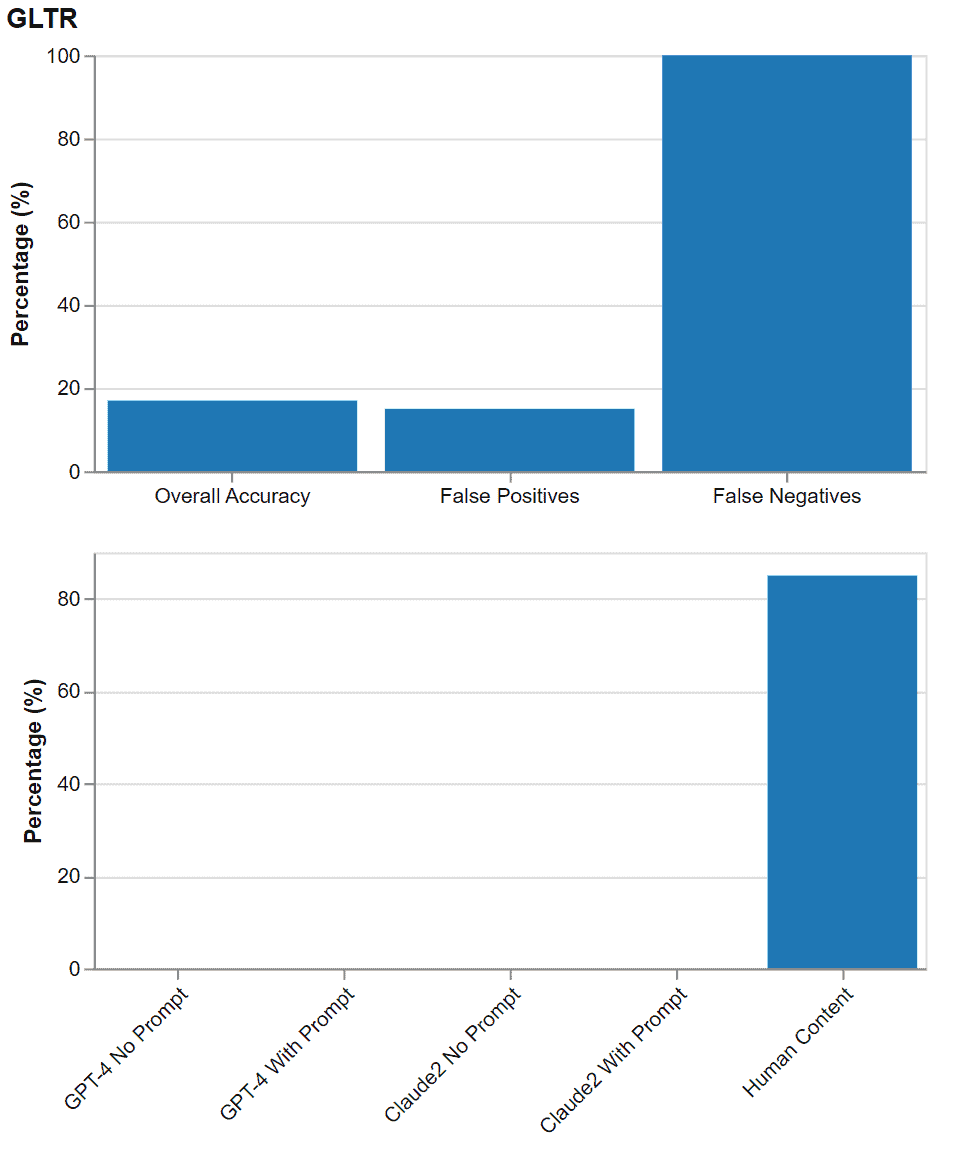

14. GLTR

Overview: Long ago, GLTR was kind of popular for its simple design. But it could only detect old GPT-2 models. Now versus modern AI, GLTR fails totally. Without big updates, its accuracy is terrible on new models. The basic look is great but doesn’t fix the broken detector.

Key Statistics:

|

Aspect |

Accuracy |

|---|---|

|

GLTR Overall Accuracy |

17% |

|

False Positives |

15% |

|

False Negatives |

100% |

|

Accuracy for AI Models and Human-Written Content | |

|

GPT-4, no prompt |

0% |

|

GPT-4 with prompt |

0% |

|

Claude2, no prompt |

0% |

|

Claude2 with prompt |

0% |

|

Human content |

85% |

My Take: I just want an AI detector that works, not one that looks cool. The stats show GLTR is really bad at finding today’s AI writing. It needs huge upgrades to work now. The nice design can’t make up for the broken detector. I already switched to tools that can spot modern AI.

The Bottom Line on AI Detection Accuracy

After reviewing the data, a few key takeaways emerge on AI content detection tools.

First, accuracy varies wildly. The top performers like Originality AI and Passed AI reliably flag ~70% of AI content overall. Meanwhile, laggards like Writer.com and GLTR barely scratch 10-20% accuracy. There’s a massive gulf between leaders and stragglers.

Second, advanced AI prompts remain challenging. Even the best detectors struggle with prompted content, with false negative rates around 30-50%. As models evolve, prompt engineering is the new frontier for detection. Tools must constantly update to keep pace.

Third, free options have limits. Beginner-friendly tools like ContentDetector.AI and Content At Scale provide a useful entry point. But for business and professional use, paid tools offer greater capabilities and accuracy. The top performers merit the investment for serious needs.

The key is matching your detection tool to current needs. Beginners on a budget can start with a free service. But for high-stakes business use, spring for a paid leader like Originality AI.

As models advance, no tool is perfect. Even the best boast ~30% false negatives, missing some AI. But with the right fit, AI detection provides vital peace of mind to uphold quality and integrity.

The battle for better detection continues. But armed with transparency on accuracy, creators can find the right solution to help separate human creativity from machine mimicry.

Got AI Detection Questions? We’ll Break It Down

AI writing tools bring tons of questions about how well detectors work. This FAQ tackles the big stuff — how accurate they are, who should use them, and picking the right one.

-

What shows an AI detection tool is good?

Check the overall accuracy, false positives, and false negatives. Overall accuracy says how often it gets AI right. False positives is when it flags human writing wrongly as AI. False negatives is when it misses seeing AI, calls it human. Compare tools on these key points.

-

How accurate are top detectors like Originality AI now?

Testing shows the best like Originality AI and Passed AI catch about 65-70% of AI overall. So they correctly spot 7 out of 10 AI samples. They do better on AI without prompts, but worse when fancy prompts are used. No tool is perfect yet, but the top ones find most AI.

-

What AI detection tools work best for business?

For professional use, paid tools like Originality AI and Passed AI rule. They have extra features to detect AI, customize settings, integrate into workflows. They cost more but deliver the goods for big business needs.

-

Should I use a free or paid AI content detector?

It depends on your needs. Free tools like Content At Scale are great for beginners on a budget. But for big business use, paid tools are worth the investment. Consider your volume, risks, accuracy needs. For personal play, free may work. For business, paid brings more reliability.

-

How do I choose the right AI detector?

Match the tool to how you’ll use it. Casual personal use? A basic freebie is fine. Students/teachers? Check out Passed AI. Marketing pro? Get one that integrates with your workflow. Consider volume, risks, budget to find the best fit.

-

Can any tool catch all AI reliably today?

No tool is totally perfect yet. The best catch about 70% of AI, still missing some prompted content. Until tech improves, some AI evades detection. Personally, I recommend Originality.AI and Passed.AI.

-

How are top detection tools keeping up with new AI?

Leading tools constantly update their formulas as new models like Claude emerge. They research, add fresh data, release regular upgrades. But AI evolves quickly. Even great tools lag briefly until they understand new tricks. Picking one that actively upgrades is key.

-

What are the limits of current AI detection?

No tool flawlessly catches all AI yet. Savvy prompts still often sneak by. There’s always back-and-forth as generators and detectors advance. Expect some missed AI for now — about 30% false negatives. Use human review alongside detectors to grab what slips through. Accuracy is steadily getting better.

-

How can I strengthen AI detection in my process?

Use detectors as just a first filtering step. Then have humans review to catch misses. Check creator histories, monitor work quality changes, run plagiarism checks. Set AI rules and test randomly. Combining detectors with robust processes boosts integrity as the tech evolves.

-

What’s coming next for AI detectors?

As AI keeps transforming, detection tech will innovate to keep pace. We’ll likely see better accuracy, less missed AI. Integration into creation platforms will grow. And new approaches like style analysis will improve things. The tech race guarantees exciting advances in AI detection ahead!

About the Author

Meet Alex Kosch, your go-to buddy for all things AI! Join our friendly chats on discovering and mastering AI tools, while we navigate this fascinating tech world with laughter, relatable stories, and genuine insights. Welcome aboard!

KEEP READING, MY FRIEND